[Android Studio] Using API of OpenCV DNN

前言

一、故事背景

- NDK方法人脸识别

OpenCV4Android系列:

1. OpenCV4Android开发实录(1):移植OpenCV3.3.0库到Android Studio

2.OpenCV4Android开发实录(2): 使用OpenCV3.3.0库实现人脸检测

- 轻量化卷积模型

纵览轻量化卷积神经网络:SqueezeNet、MobileNet、ShuffleNet、Xception

- 移动设备集成OpenCV DNN

Official tutorials, which looks good: https://docs.opencv.org/3.4.1/d0/d6c/tutorial_dnn_android.html

- tensorboard监控训练过程

深度学习入门篇--手把手教你用 TensorFlow 训练模型

二、从训练到部署

- 目的

训练一个OpenCV DNN可以集成并部署在移动端的模型。

- 训练

Ref: 深度学习入门篇--手把手教你用 TensorFlow 训练模型

Ref: [Tensorflow] Object Detection API - build your training environment

这里,重点补充下 tensorboard 的相关内容。

可能的位置: ./object_detection/eval_util.py

可视化:

tensorboard --logdir= D:/training-sets/data-translate/training

- 部署

From: https://github.com/opencv/opencv/tree/master/samples/android

Ref: https://github.com/floe/opencv-tutorial-1-camerapreview

Ref: https://github.com/floe/opencv-tutorial-2-mixedprocessing

三、部署到手机

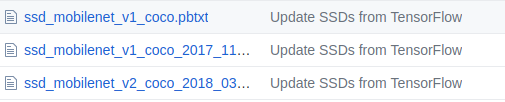

- TensorFlow 提供的版本

- OpenCV 支持的版本

参见:opencv_extra/testdata/dnn/

Jeff: 对应的v2比较大,估计是为了性能考虑,敬请期待ssdlite版本。

- 手机测试代码

结合tutorial-3和objdetect就好,代码示范如下:

package org.opencv.samples.tutorial1; import org.opencv.android.BaseLoaderCallback; import org.opencv.android.CameraBridgeViewBase.CvCameraViewFrame; import org.opencv.android.LoaderCallbackInterface; import org.opencv.android.OpenCVLoader; import org.opencv.core.Mat; import org.opencv.android.CameraBridgeViewBase; import org.opencv.android.CameraBridgeViewBase.CvCameraViewListener2; import org.opencv.dnn.Dnn; // Jeffrey import android.app.Activity; import android.os.Bundle; import android.util.Log; import android.view.Menu; import android.view.MenuItem; import android.view.SurfaceView; import android.view.WindowManager; import android.widget.Toast; //------------------------------------------------------------------- import android.content.Context; import android.content.res.AssetManager; //import android.os.Bundle; //import android.support.v7.app.AppCompatActivity; # Jeff: ignore. //import android.util.Log; //import org.opencv.android.BaseLoaderCallback; //import org.opencv.android.CameraBridgeViewBase; //import org.opencv.android.CameraBridgeViewBase.CvCameraViewFrame; //import org.opencv.android.CameraBridgeViewBase.CvCameraViewListener2; //import org.opencv.android.LoaderCallbackInterface; //import org.opencv.android.OpenCVLoader; import org.opencv.core.Core; //import org.opencv.core.Mat; import org.opencv.core.Point; import org.opencv.core.Scalar; import org.opencv.core.Size; import org.opencv.dnn.Net; //import org.opencv.dnn.Dnn; import org.opencv.imgproc.Imgproc; import java.io.BufferedInputStream; import java.io.File; import java.io.FileOutputStream; import java.io.IOException; //------------------------------------------------------------------- public class Tutorial1Activity extends Activity implements CvCameraViewListener2 { private static final String TAG = "OCVSample::Activity"; private CameraBridgeViewBase mOpenCvCameraView; private boolean mIsJavaCamera = true; private MenuItem mItemSwitchCamera = null; private static final String[] classNames = {"background", "laava"}; private Net net; private BaseLoaderCallback mLoaderCallback = new BaseLoaderCallback(this) { @Override public void onManagerConnected(int status) { switch (status) { case LoaderCallbackInterface.SUCCESS: { Log.i(TAG, "OpenCV loaded successfully"); mOpenCvCameraView.enableView(); } break; default: { super.onManagerConnected(status); } break; } } }; public Tutorial1Activity() { Log.i(TAG, "Instantiated new " + this.getClass()); } /** Called when the activity is first created. */ @Override public void onCreate(Bundle savedInstanceState) { Log.i(TAG, "called onCreate"); super.onCreate(savedInstanceState); getWindow().addFlags(WindowManager.LayoutParams.FLAG_KEEP_SCREEN_ON); setContentView(R.layout.tutorial1_surface_view); mOpenCvCameraView = (CameraBridgeViewBase) findViewById(R.id.tutorial1_activity_java_surface_view); mOpenCvCameraView.setVisibility(SurfaceView.VISIBLE); mOpenCvCameraView.setCvCameraViewListener(this); } //------------------------------------------------------------------- @Override public void onPause() { super.onPause(); if (mOpenCvCameraView != null) mOpenCvCameraView.disableView(); } @Override public void onResume() { super.onResume(); if (!OpenCVLoader.initDebug()) { Log.d(TAG, "Internal OpenCV library not found. Using OpenCV Manager for initialization"); OpenCVLoader.initAsync(OpenCVLoader.OPENCV_VERSION_3_0_0, this, mLoaderCallback); } else { Log.d(TAG, "OpenCV library found inside package. Using it!"); mLoaderCallback.onManagerConnected(LoaderCallbackInterface.SUCCESS); } } public void onDestroy() { super.onDestroy(); if (mOpenCvCameraView != null) mOpenCvCameraView.disableView(); } //------------------------------------------------------------------- // Upload file to storage and return a path. private static String getPath(String file, Context context) { AssetManager assetManager = context.getAssets(); BufferedInputStream inputStream = null; try { // Read data from assets. inputStream = new BufferedInputStream(assetManager.open(file)); byte[] data = new byte[inputStream.available()]; inputStream.read(data); inputStream.close(); // Create copy file in storage. File outFile = new File(context.getFilesDir(), file); FileOutputStream os = new FileOutputStream(outFile); os.write(data); os.close(); // Return a path to file which may be read in common way. return outFile.getAbsolutePath(); } catch (IOException ex) { Log.i(TAG, "Failed to upload a file"); } return ""; } // Load a network. public void onCameraViewStarted(int width, int height) { String proto = getPath("MobileNetSSD_deploy.prototxt", this); String weights = getPath("MobileNetSSD_deploy.caffemodel", this); String config = getPath("ssd_mobilenet_v1_coco.pbtxt", this); String model = getPath("frozen_inference_graph.pb", this); // net = Dnn.readNetFromCaffe(proto, weights); net = Dnn.readNetFromTensorflow(model, config); Log.i(TAG, "Network loaded successfully"); } //------------------------------------------------------------------- /** * * * * @param inputFrame * @return */ public Mat onCameraFrame(CvCameraViewFrame inputFrame) { final int IN_WIDTH = 300; final int IN_HEIGHT = 300; final float WH_RATIO = (float)IN_WIDTH / IN_HEIGHT; final double IN_SCALE_FACTOR = 0.007843; final double MEAN_VAL = 127.5; final double THRESHOLD = 0.2; // Get a new frame Mat frame = inputFrame.rgba(); Imgproc.cvtColor(frame, frame, Imgproc.COLOR_RGBA2RGB); // Forward image through network. Mat blob = Dnn.blobFromImage(frame, IN_SCALE_FACTOR, new Size(IN_WIDTH, IN_HEIGHT), new Scalar(MEAN_VAL, MEAN_VAL, MEAN_VAL), false, false); net.setInput(blob); Mat detections = net.forward(); int cols = frame.cols(); int rows = frame.rows(); Size cropSize; if ((float)cols / rows > WH_RATIO) { cropSize = new Size(rows * WH_RATIO, rows); } else { cropSize = new Size(cols, cols / WH_RATIO); } int y1 = (int)(rows - cropSize.height) / 2; int y2 = (int)(y1 + cropSize.height); int x1 = (int)(cols - cropSize.width) / 2; int x2 = (int)(x1 + cropSize.width); Mat subFrame = frame.submat(y1, y2, x1, x2); cols = subFrame.cols(); rows = subFrame.rows(); detections = detections.reshape(1, (int)detections.total() / 7); for (int i = 0; i < detections.rows(); ++i) { double confidence = detections.get(i, 2)[0]; if (confidence > THRESHOLD) { int classId = (int)detections.get(i, 1)[0]; int xLeftBottom = (int)(detections.get(i, 3)[0] * cols); int yLeftBottom = (int)(detections.get(i, 4)[0] * rows); int xRightTop = (int)(detections.get(i, 5)[0] * cols); int yRightTop = (int)(detections.get(i, 6)[0] * rows); // Draw rectangle around detected object. Imgproc.rectangle(subFrame, new Point(xLeftBottom, yLeftBottom), new Point(xRightTop, yRightTop), new Scalar(0, 255, 0)); String label = classNames[classId] + ": " + confidence; int[] baseLine = new int[1]; Size labelSize = Imgproc.getTextSize(label, Core.FONT_HERSHEY_SIMPLEX, 0.5, 1, baseLine); // Draw background for label. Imgproc.rectangle(subFrame, new Point(xLeftBottom, yLeftBottom - labelSize.height), new Point(xLeftBottom + labelSize.width, yLeftBottom + baseLine[0]), new Scalar(255, 255, 255), Core.FILLED); // Write class name and confidence. Imgproc.putText(subFrame, label, new Point(xLeftBottom, yLeftBottom), Core.FONT_HERSHEY_SIMPLEX, 0.5, new Scalar(0, 0, 0)); } } return frame; } //------------------------------------------------------------------- public void onCameraViewStopped() { } //------------------------------------------------------------------- }

End.