[Model] GoogLeNet

主要就是对Inception Module的理解

网络结构分析

没有densy layer竟然,这是给手机上运行做铺垫么。

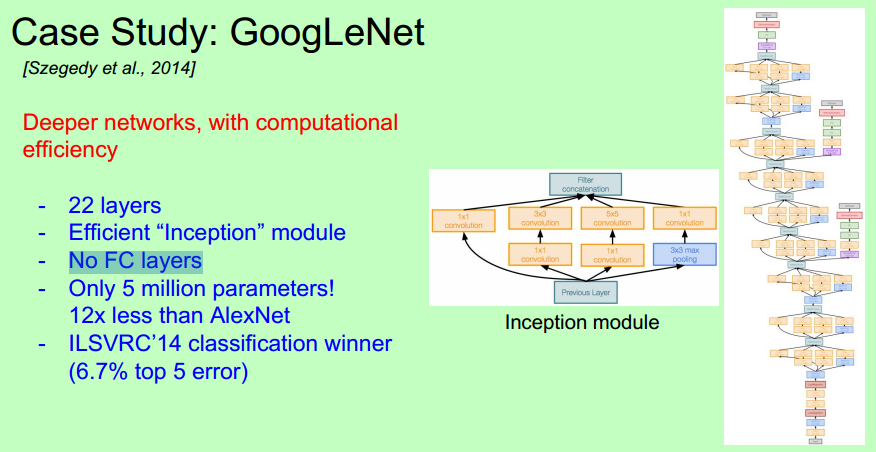

一个新型的模块设计: 【不同类型的layer并行放在了一起】

最初的设计:

对上图做以下说明:

1 . 采用不同大小的卷积核意味着不同大小的感受野,最后拼接意味着不同尺度特征的融合;

2 . 之所以卷积核大小采用1、3和5,主要是为了方便对齐。因为设定卷积步长stride=1之后,只要分别设定pad=0、1、2,那么卷积之后便可以得到相同维度的特征,然后这些特征就可以直接拼接在一起了;

3 . 文章说很多地方都表明pooling挺有效,所以Inception里面也嵌入了。

4 . 网络越到后面,特征越抽象,而且每个特征所涉及的感受野也更大了,因此随着层数的增加,3x3和5x5卷积的比例也要增加。

但是,使用5x5的卷积核仍然会带来巨大的计算量。 为此,文章借鉴NIN2,采用1x1卷积核来进行降维。

例如:上一层的输出为100x100x128,经过具有256个输出的5x5卷积层之后(stride=1,pad=2),输出数据为100x100x256。其中,卷积层的参数为128x5x5x256 [入通道*卷积长*卷积宽*出通道]。

假如上一层输出先经过具有32个输出的1x1卷积层,再经过具有256个输出的5x5卷积层,那么最终的输出数据仍为为100x100x256,但卷积参数量已经减少为128x1x1x32 + 32x5x5x256,大约减少了4倍。

[其实就是通道数减少一下的操作]

故,有了如下设计,插入了1*1的卷积核.

具体改进后的Inception Module如下图:

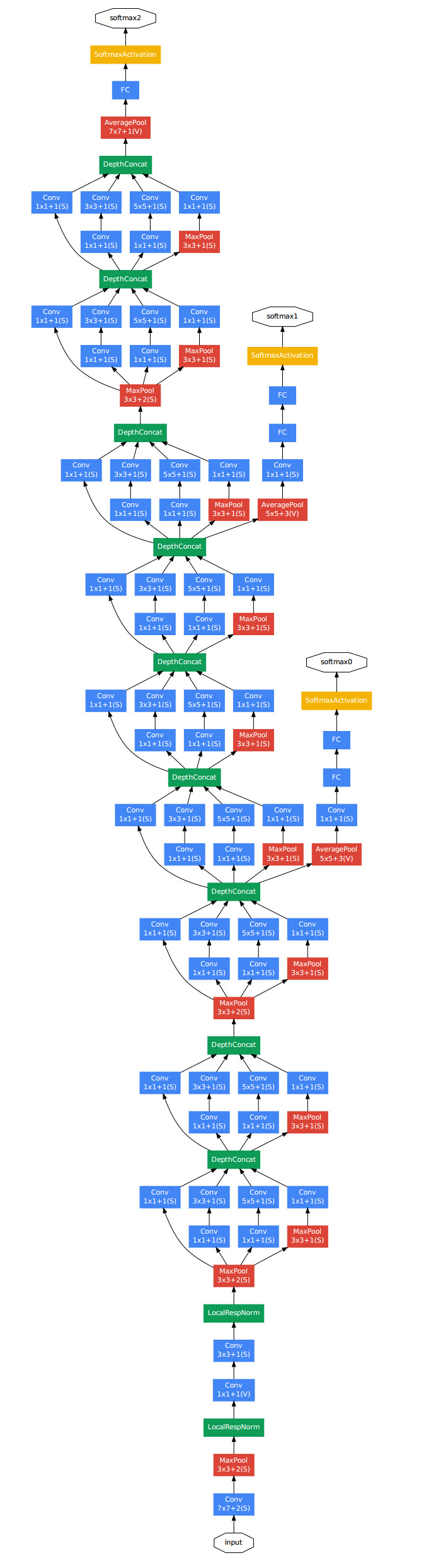

Auxiliary classification outputs设计:

From: http://www.cnblogs.com/hansjorn/p/7522084.html

对上图做如下说明:

1 . 显然GoogLeNet采用了模块化的结构,方便增添和修改;

2 . 网络最后采用了average pooling来代替全连接层,想法来自NIN,事实证明可以将TOP1 accuracy提高0.6%。但是,实际在最后还是加了一个全连接层,主要是为了方便以后大家finetune;

3 . 虽然移除了全连接,但是网络中依然使用了Dropout ;

4 . 为了避免梯度消失,网络额外增加了2个辅助的softmax用于向前传导梯度。文章中说这两个辅助的分类器的loss应该加一个衰减系数,但看caffe中的model也没有加任何衰减。此外,实际测试的时候,这两个额外的softmax会被去掉。

以上就是最初的v1型号

From: http://blog.csdn.net/u010025211/article/details/51206237

google Inception v1 - v4 papers 发展历程

先上Paper列表:

- [v1] Going Deeper with Convolutions, 6.67% test error, http://arxiv.org/abs/1409.4842

- [v2] Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift, 4.8% test error, http://arxiv.org/abs/1502.03167

- [v3] Rethinking the Inception Architecture for Computer Vision, 3.5% test error, http://arxiv.org/abs/1512.00567

- [v4] Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning, 3.08% test error, http://arxiv.org/abs/1602.07261

大体思路:

- Inception v1的网络,将1x1,3x3,5x5的conv和3x3的pooling,stack在一起,一方面增加了网络的width,另一方面增加了网络对尺度的适应性;

- v2的网络在v1的基础上,进行了改进,一方面了加入了BN层,减少了Internal Covariate Shift(内部neuron的数据分布发生变化),使每一层的输出都规范化到一个N(0, 1)的高斯,另外一方面学习VGG用2个3x3的conv替代inception模块中的5x5,既降低了参数数量,也加速计算;

- v3一个最重要的改进是分解(Factorization),将7x7分解成两个一维的卷积(1x7,7x1),3x3也是一样(1x3,3x1),这样的好处,既可以加速计算(多余的计算能力可以用来加深网络),又可以将1个conv拆成2个conv,使得网络深度进一步增加,增加了网络的非线性,还有值得注意的地方是网络输入从224x224变为了299x299,更加精细设计了35x35/17x17/8x8的模块;

- v4研究了Inception模块结合Residual Connection能不能有改进?发现ResNet的结构可以极大地加速训练,同时性能也有提升,得到一个Inception-ResNet v2网络,同时还设计了一个更深更优化的Inception v4模型,能达到与Inception-ResNet v2相媲美的性能。

关注下Inception的写法: models/research/slim/nets/inception_v1.py

# Copyright 2016 The TensorFlow Authors. All Rights Reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# ==============================================================================

"""Contains the definition for inception v1 classification network."""

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

import tensorflow as tf

from nets import inception_utils

slim = tf.contrib.slim

trunc_normal = lambda stddev: tf.truncated_normal_initializer(0.0, stddev)

def inception_v1_base(inputs,

final_endpoint='Mixed_5c',

scope='InceptionV1'):

"""Defines the Inception V1 base architecture.

This architecture is defined in:

Going deeper with convolutions

Christian Szegedy, Wei Liu, Yangqing Jia, Pierre Sermanet, Scott Reed,

Dragomir Anguelov, Dumitru Erhan, Vincent Vanhoucke, Andrew Rabinovich.

http://arxiv.org/pdf/1409.4842v1.pdf.

Args:

inputs: a tensor of size [batch_size, height, width, channels].

final_endpoint: specifies the endpoint to construct the network up to. It

can be one of ['Conv2d_1a_7x7', 'MaxPool_2a_3x3', 'Conv2d_2b_1x1',

'Conv2d_2c_3x3', 'MaxPool_3a_3x3', 'Mixed_3b', 'Mixed_3c',

'MaxPool_4a_3x3', 'Mixed_4b', 'Mixed_4c', 'Mixed_4d', 'Mixed_4e',

'Mixed_4f', 'MaxPool_5a_2x2', 'Mixed_5b', 'Mixed_5c']

scope: Optional variable_scope.

Returns:

A dictionary from components of the network to the corresponding activation.

Raises:

ValueError: if final_endpoint is not set to one of the predefined values.

"""

end_points = {}

with tf.variable_scope(scope, 'InceptionV1', [inputs]):

with slim.arg_scope(

[slim.conv2d, slim.fully_connected],

weights_initializer=trunc_normal(0.01)):

with slim.arg_scope([slim.conv2d, slim.max_pool2d],

stride=1, padding='SAME'):

end_point = 'Conv2d_1a_7x7'

net = slim.conv2d(inputs, 64, [7, 7], stride=2, scope=end_point)

end_points[end_point] = net

if final_endpoint == end_point: return net, end_points

end_point = 'MaxPool_2a_3x3'

net = slim.max_pool2d(net, [3, 3], stride=2, scope=end_point)

end_points[end_point] = net

if final_endpoint == end_point: return net, end_points

end_point = 'Conv2d_2b_1x1'

net = slim.conv2d(net, 64, [1, 1], scope=end_point)

end_points[end_point] = net

if final_endpoint == end_point: return net, end_points

end_point = 'Conv2d_2c_3x3'

net = slim.conv2d(net, 192, [3, 3], scope=end_point)

end_points[end_point] = net

if final_endpoint == end_point: return net, end_points

#--------------------------------------------------------------------------------

end_point = 'MaxPool_3a_3x3'

net = slim.max_pool2d(net, [3, 3], stride=2, scope=end_point)

end_points[end_point] = net

if final_endpoint == end_point: return net, end_points

end_point = 'Mixed_3b'

with tf.variable_scope(end_point):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 64, [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 96, [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 128, [3, 3], scope='Conv2d_0b_3x3')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 16, [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 32, [3, 3], scope='Conv2d_0b_3x3')

with tf.variable_scope('Branch_3'):

branch_3 = slim.max_pool2d(net, [3, 3], scope='MaxPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 32, [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat(axis=3, values=[branch_0, branch_1, branch_2, branch_3])

end_points[end_point] = net

if final_endpoint == end_point: return net, end_points

#---------------------------------------------------------------------------------

end_point = 'Mixed_3c'

with tf.variable_scope(end_point):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 128, [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 128, [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 192, [3, 3], scope='Conv2d_0b_3x3')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 32, [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 96, [3, 3], scope='Conv2d_0b_3x3')

with tf.variable_scope('Branch_3'):

branch_3 = slim.max_pool2d(net, [3, 3], scope='MaxPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 64, [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat(

axis=3, values=[branch_0, branch_1, branch_2, branch_3])

end_points[end_point] = net

if final_endpoint == end_point: return net, end_points

end_point = 'MaxPool_4a_3x3'

net = slim.max_pool2d(net, [3, 3], stride=2, scope=end_point)

end_points[end_point] = net

if final_endpoint == end_point: return net, end_points

end_point = 'Mixed_4b'

with tf.variable_scope(end_point):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 192, [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 96, [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 208, [3, 3], scope='Conv2d_0b_3x3')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 16, [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 48, [3, 3], scope='Conv2d_0b_3x3')

with tf.variable_scope('Branch_3'):

branch_3 = slim.max_pool2d(net, [3, 3], scope='MaxPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 64, [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat(

axis=3, values=[branch_0, branch_1, branch_2, branch_3])

end_points[end_point] = net

if final_endpoint == end_point: return net, end_points

end_point = 'Mixed_4c'

with tf.variable_scope(end_point):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 160, [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 112, [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 224, [3, 3], scope='Conv2d_0b_3x3')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 24, [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 64, [3, 3], scope='Conv2d_0b_3x3')

with tf.variable_scope('Branch_3'):

branch_3 = slim.max_pool2d(net, [3, 3], scope='MaxPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 64, [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat(

axis=3, values=[branch_0, branch_1, branch_2, branch_3])

end_points[end_point] = net

if final_endpoint == end_point: return net, end_points

end_point = 'Mixed_4d'

with tf.variable_scope(end_point):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 128, [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 128, [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 256, [3, 3], scope='Conv2d_0b_3x3')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 24, [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 64, [3, 3], scope='Conv2d_0b_3x3')

with tf.variable_scope('Branch_3'):

branch_3 = slim.max_pool2d(net, [3, 3], scope='MaxPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 64, [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat(

axis=3, values=[branch_0, branch_1, branch_2, branch_3])

end_points[end_point] = net

if final_endpoint == end_point: return net, end_points

end_point = 'Mixed_4e'

with tf.variable_scope(end_point):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 112, [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 144, [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 288, [3, 3], scope='Conv2d_0b_3x3')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 32, [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 64, [3, 3], scope='Conv2d_0b_3x3')

with tf.variable_scope('Branch_3'):

branch_3 = slim.max_pool2d(net, [3, 3], scope='MaxPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 64, [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat(

axis=3, values=[branch_0, branch_1, branch_2, branch_3])

end_points[end_point] = net

if final_endpoint == end_point: return net, end_points

end_point = 'Mixed_4f'

with tf.variable_scope(end_point):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 256, [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 160, [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 320, [3, 3], scope='Conv2d_0b_3x3')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 32, [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 128, [3, 3], scope='Conv2d_0b_3x3')

with tf.variable_scope('Branch_3'):

branch_3 = slim.max_pool2d(net, [3, 3], scope='MaxPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 128, [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat(

axis=3, values=[branch_0, branch_1, branch_2, branch_3])

end_points[end_point] = net

if final_endpoint == end_point: return net, end_points

end_point = 'MaxPool_5a_2x2'

net = slim.max_pool2d(net, [2, 2], stride=2, scope=end_point)

end_points[end_point] = net

if final_endpoint == end_point: return net, end_points

end_point = 'Mixed_5b'

with tf.variable_scope(end_point):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 256, [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 160, [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 320, [3, 3], scope='Conv2d_0b_3x3')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 32, [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 128, [3, 3], scope='Conv2d_0a_3x3')

with tf.variable_scope('Branch_3'):

branch_3 = slim.max_pool2d(net, [3, 3], scope='MaxPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 128, [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat(

axis=3, values=[branch_0, branch_1, branch_2, branch_3])

end_points[end_point] = net

if final_endpoint == end_point: return net, end_points

end_point = 'Mixed_5c'

with tf.variable_scope(end_point):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 384, [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 192, [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 384, [3, 3], scope='Conv2d_0b_3x3')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 48, [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 128, [3, 3], scope='Conv2d_0b_3x3')

with tf.variable_scope('Branch_3'):

branch_3 = slim.max_pool2d(net, [3, 3], scope='MaxPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 128, [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat(

axis=3, values=[branch_0, branch_1, branch_2, branch_3])

end_points[end_point] = net

if final_endpoint == end_point: return net, end_points

raise ValueError('Unknown final endpoint %s' % final_endpoint)

def inception_v1(inputs,

num_classes=1000,

is_training=True,

dropout_keep_prob=0.8,

prediction_fn=slim.softmax,

spatial_squeeze=True,

reuse=None,

scope='InceptionV1'):

"""Defines the Inception V1 architecture.

This architecture is defined in:

Going deeper with convolutions

Christian Szegedy, Wei Liu, Yangqing Jia, Pierre Sermanet, Scott Reed,

Dragomir Anguelov, Dumitru Erhan, Vincent Vanhoucke, Andrew Rabinovich.

http://arxiv.org/pdf/1409.4842v1.pdf.

The default image size used to train this network is 224x224.

Args:

inputs: a tensor of size [batch_size, height, width, channels].

num_classes: number of predicted classes.

is_training: whether is training or not.

dropout_keep_prob: the percentage of activation values that are retained.

prediction_fn: a function to get predictions out of logits.

spatial_squeeze: if True, logits is of shape [B, C], if false logits is of

shape [B, 1, 1, C], where B is batch_size and C is number of classes.

reuse: whether or not the network and its variables should be reused. To be

able to reuse 'scope' must be given.

scope: Optional variable_scope.

Returns:

logits: the pre-softmax activations, a tensor of size

[batch_size, num_classes]

end_points: a dictionary from components of the network to the corresponding

activation.

"""

# Final pooling and prediction

with tf.variable_scope(scope, 'InceptionV1', [inputs, num_classes],

reuse=reuse) as scope:

with slim.arg_scope([slim.batch_norm, slim.dropout],

is_training=is_training):

net, end_points = inception_v1_base(inputs, scope=scope)

with tf.variable_scope('Logits'):

net = slim.avg_pool2d(net, [7, 7], stride=1, scope='AvgPool_0a_7x7')

net = slim.dropout(net,

dropout_keep_prob, scope='Dropout_0b')

logits = slim.conv2d(net, num_classes, [1, 1], activation_fn=None,

normalizer_fn=None, scope='Conv2d_0c_1x1')

if spatial_squeeze:

logits = tf.squeeze(logits, [1, 2], name='SpatialSqueeze')

end_points['Logits'] = logits

end_points['Predictions'] = prediction_fn(logits, scope='Predictions')

return logits, end_points

inception_v1.default_image_size = 224

inception_v1_arg_scope = inception_utils.inception_arg_scope

浙公网安备 33010602011771号

浙公网安备 33010602011771号