[ Openstack ] Openstack-Mitaka 高可用之 网络服务(Neutron)

目录

Openstack-Mitaka 高可用之 概述

Openstack-Mitaka 高可用之 环境初始化

Openstack-Mitaka 高可用之 Mariadb-Galera集群部署

Openstack-Mitaka 高可用之 Rabbitmq-server 集群部署

Openstack-Mitaka 高可用之 memcache

Openstack-Mitaka 高可用之 Pacemaker+corosync+pcs高可用集群

Openstack-Mitaka 高可用之 认证服务(keystone)

OpenStack-Mitaka 高可用之 镜像服务(glance)

Openstack-Mitaka 高可用之 计算服务(Nova)

Openstack-Mitaka 高可用之 网络服务(Neutron)

Openstack-Mitaka 高可用之 Dashboard

Openstack-Mitaka 高可用之 启动一个实例

Openstack-Mitaka 高可用之 测试

Openstack neutron 简介

Openstack Networking(neutron),允许创建、插入接口设备,这些设备由其他openstack服务管理。

neutron包含的组件:

(1)neutron-server

接收和路由APi请求到合适的openstack网络插件,以达到预想的目的。

(2)openstack网络插件和代理

插拔端口,创建网络和子网,以及提供IP地址,这些插件和代理依赖供应商和技术而不同。例如:Linux Bridge、 Open vSwitch

(3)消息队列

大多数的openstack networking安装都会用到,用于在neutron-server和各种各样的代理进程间路由信息。也为某些特定的插件扮演数据库的角色

openstack网络主要和openstack计算交互,以提供网络连接到它的实例。

网络工作模式及概念

虚拟化网络:

[ KVM ] 四种简单的网络模型

[ KVM 网络虚拟化 ] Openvswitch

这两篇有详细描述虚拟化网络的实现原理和方法。

安装并配置控制节点

以下操作在控制节点:

首先创建neutron库 [root@controller1 ~]# mysql -ugalera -pgalera -h 192.168.0.10 MariaDB [(none)]> CREATE DATABASE neutron; Query OK, 1 row affected (0.09 sec) MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' IDENTIFIED BY 'neutron'; Query OK, 0 rows affected (0.00 sec) MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' IDENTIFIED BY 'neutron'; Query OK, 0 rows affected (0.00 sec) MariaDB [(none)]> flush privileges; Query OK, 0 rows affected (0.01 sec) MariaDB [(none)]> Bye

创建服务证书:

[root@controller1 ~]# . admin-openrc [root@controller1 ~]# openstack user create --domain default --password-prompt neutron #密码为 neutron [root@controller1 ~]# openstack role add --project service --user neutron admin [root@controller1 ~]# openstack service create --name neutron --description "OpenStack Networking" network

创建网络服务API端点:

[root@controller1 ~]# openstack endpoint create --region RegionOne network public http://controller:9696 [root@controller1 ~]# openstack endpoint create --region RegionOne network internal http://controller:9696 [root@controller1 ~]# openstack endpoint create --region RegionOne network admin http://controller:9696

配置网络选项:

这里提供了两种网络选项

(1)只支持实例连接到公有网络(外部网络)。没有私有网络(个人网络),路由器以及浮动IP地址。只有admin或者其他特权用户才可以管理公有网络。

(2)选项2在选项1的基础上多了layer-3服务,支持实例连接到私有网络。demo或者其他没有特权的用户可以管理自己的私有网络,包含连接到公网和私网的路由器管理。另外,浮动IP地址可以让实例使用私有网络连接到外部网络,这里的外部指物理网络,例如互联网。

这里,使用选项2来构建openstack网络,如果选项2配置完成,选项1也就会了。

Openstack 私有网络配置

安装组件:

三个controller节点都需要安装:

# yum install openstack-neutron openstack-neutron-ml2 openstack-neutron-linuxbridge ebtables -y

配置服务组件:

Networking服务器组件配置包括:数据库、认证机制、消息队列、拓扑变化通知和插件

主要配置如下几个文件:

[root@controller1 ~]# cd /etc/neutron/ [root@controller1 neutron]# ll neutron.conf plugins/ml2/ml2_conf.ini plugins/ml2/linuxbridge_agent.ini l3_agent.ini dhcp_agent.ini metadata_agent.ini -rw-r----- 1 root neutron 8741 Dec 3 19:26 dhcp_agent.ini -rw-r----- 1 root neutron 64171 Dec 3 19:23 neutron.conf -rw-r----- 1 root neutron 8490 Dec 3 19:25 plugins/ml2/linuxbridge_agent.ini -rw-r----- 1 root neutron 8886 Dec 3 19:25 plugins/ml2/ml2_conf.ini -rw-r----- 1 root neutron 11215 Dec 3 19:42 l3_agent.ini -rw-r----- 1 root neutron 7089 Dec 3 19:42 metadata_agent.ini neutron.conf 配置如下: [DEFAULT] core_plugin = ml2 service_plugins = router allow_overlapping_ips = True transport_url = rabbit://openstack:openstack@controller1,openstack:openstack@controller2,openstack:openstack@controller3 auth_strategy = keystone notify_nova_on_port_status_changes = True notify_nova_on_port_data_changes = True bind_host = 192.168.0.11 # 蓝色部分为dhcp和router高可用设置 dhcp_agents_per_network = 3 l3_ha = true max_l3_agents_per_router = 3 min_l3_agents_per_router = 2 l3_ha_net_cidr = 169.254.192.0/18 … [database] connection = mysql+pymysql://neutron:neutron@controller/neutron [keystone_authtoken] auth_uri = http://controller:5000 auth_url = http://controller:35357 neutron.conf:memcached_servers = controller1:11211,controller2:11211,controller3:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = neutron password = neutron … [nova] auth_url = http://controller:35357 auth_type = password project_domain_name = default user_domain_name = default region_name = RegionOne project_name = service username = nova password = nova [oslo_concurrency] lock_path = /var/lib/neutron/tmp plugins/ml2/ml2_conf.ini 配置如下: … [ml2] type_drivers = flat,vlan,vxlan tenant_network_types = vxlan mechanism_drivers = linuxbridge,l2population extension_drivers = port_security [ml2_type_flat] flat_networks = provider … [ml2_type_vxlan] vni_ranges = 1:1000 [securitygroup] enable_ipset = True plugins/ml2/linuxbridge_agent.ini 配置如下: [DEFAULT] [agent] [linux_bridge] physical_interface_mappings = provider:eno33554992 # 外网网卡名 [securitygroup] enable_security_group = True firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver [vxlan] enable_vxlan = True local_ip = 192.168.0.11 # 这里使用的管理地址,做vxlan隧道,每个节点填写本地管理地址 l2_population = True l3_agent.ini配置: [DEFAULT] interface_driver = neutron.agent.linux.interface.BridgeInterfaceDriver external_network_bridge = # 蓝色部分为网络高可用切换设置 ha_confs_path = $state_path/ha_confs ha_vrrp_auth_type = PASS ha_vrrp_auth_password = ha_vrrp_advert_int = 2 [AGENT] dhcp_agent.ini 配置如下: [DEFAULT] interface_driver = neutron.agent.linux.interface.BridgeInterfaceDriver external_network_bridge = [AGENT] metadata_agent.ini 配置如下: [DEFAULT] nova_metadata_ip = controller metadata_proxy_shared_secret = METADATA_SECRET [AGENT] 以上配置需要注意的是 plugins/ml2/linuxbridge_agent.ini 两个注释说明: physical_interface_mappings = provider:eno33554992 # 访问外网的网卡名,每台controller节点IP是不同的 local_ip = 192.168.0.11 # 管理地址,每台controller节点的管理IP是不同的

将以上六个文件拷贝到其他controller节点,拷贝一定不要遗漏文件,注意以下三项:

neutron.conf bind_host = 192.168.0.xx plugins/ml2/linuxbridge_agent.in physical_interface_mappings = provider:eno33554992 local_ip = 192.168.0.11

以下操作建议手动每个节点逐个修改,避免造成混乱

[root@controller1 neutron]# vim /etc/neutron/metadata_agent.ini [DEFAULT] nova_metadata_ip = controller1 metadata_proxy_shared_secret = METADATA_SECRET [AGENT] [cache]

Controller2、controller3节点修改同上,nova_metadata_ip改成对应的主机名

为计算服务配置网络功能:

建议逐个修改,避免配置文件混乱:

[root@controller1 ~]# vim /etc/nova/nova.conf [neutron] url = http://controller:9696 auth_url = http://controller:35357 auth_type = password project_domain_name = default user_domain_name = default region_name = RegionOne project_name = service username = neutron password = neutron

网络初始化脚本需要一个超链接,如下:

在每个controller节点执行:

# ln -vs /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini ‘/etc/neutron/plugin.ini’ -> ‘/etc/neutron/plugins/ml2/ml2_conf.ini’

同步数据库只需要在一台controller节点上执行即可:

[root@controller1 ~]# su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf \ --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron 打印的都是 info 信息。

重启计算服务,每个controller节点执行

# systemctl restart openstack-nova-api.service

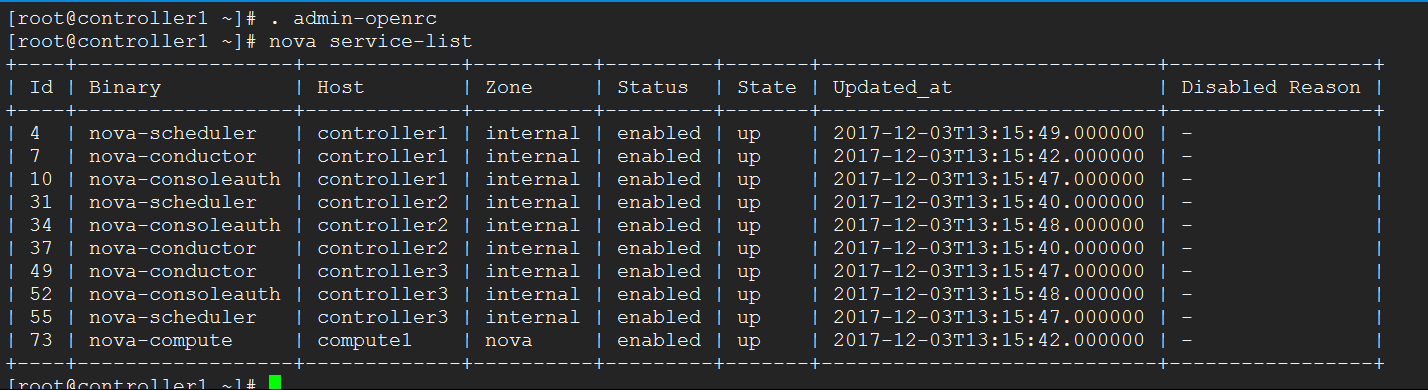

执行完成,再次确认nova各项服务是否正常

各项服务正常。

启动neutron各项服务,建议一项一项启动,监测日志有无报错信息

[root@controller1 ~]# systemctl start neutron-server.service [root@controller1 ~]# systemctl start neutron-linuxbridge-agent.service [root@controller1 ~]# systemctl start neutron-dhcp-agent.service [root@controller1 ~]# systemctl start neutron-metadata-agent.service [root@controller1 ~]# systemctl start neutron-l3-agent.service 全部启动成功,日志打印都是 info 信息,设置开机启动,前面的服务都是设置为开机启动的。 [root@controller1 ~]# systemctl enable neutron-server.service \ neutron-linuxbridge-agent.service neutron-dhcp-agent.service \ neutron-metadata-agent.service neutron-l3-agent.service

neutron各项服务只有 neutron-server需要监听端口为:9696,配置到haproxy中。

目前 haproxy 监听的配置如下:

listen galera_cluster mode tcp bind 192.168.0.10:3306 balance source option mysql-check user haproxy server controller1 192.168.0.11:3306 check inter 2000 rise 3 fall 3 backup server controller2 192.168.0.12:3306 check inter 2000 rise 3 fall 3 server controller3 192.168.0.13:3306 check inter 2000 rise 3 fall 3 backup listen memcache_cluster mode tcp bind 192.168.0.10:11211 balance source server controller1 192.168.0.11:11211 check inter 2000 rise 3 fall 3 backup server controller2 192.168.0.12:11211 check inter 2000 rise 3 fall 3 server controller3 192.168.0.13:11211 check inter 2000 rise 3 fall 3 backup listen dashboard_cluster mode tcp bind 192.168.0.10:80 balance source option tcplog option httplog server controller1 192.168.0.11:80 check inter 2000 rise 3 fall 3 server controller2 192.168.0.12:80 check inter 2000 rise 3 fall 3 server controller3 192.168.0.13:80 check inter 2000 rise 3 fall 3 listen keystone_admin_cluster mode tcp bind 192.168.0.10:35357 balance source option tcplog option httplog server controller1 192.168.0.11:35357 check inter 2000 rise 3 fall 3 server controller2 192.168.0.12:35357 check inter 2000 rise 3 fall 3 server controller3 192.168.0.13:35357 check inter 2000 rise 3 fall 3 listen keystone_public_internal_cluster mode tcp bind 192.168.0.10:5000 balance source option tcplog option httplog server controller1 192.168.0.11:5000 check inter 2000 rise 3 fall 3 server controller2 192.168.0.12:5000 check inter 2000 rise 3 fall 3 server controller3 192.168.0.13:5000 check inter 2000 rise 3 fall 3 listen glance_api_cluster mode tcp bind 192.168.0.10:9292 balance source option tcplog option httplog server controller1 192.168.0.11:9292 check inter 2000 rise 3 fall 3 server controller2 192.168.0.12:9292 check inter 2000 rise 3 fall 3 server controller3 192.168.0.13:9292 check inter 2000 rise 3 fall 3 listen glance_registry_cluster mode tcp bind 192.168.0.10:9191 balance source option tcplog option httplog server controller1 192.168.0.11:9191 check inter 2000 rise 3 fall 3 server controller2 192.168.0.12:9191 check inter 2000 rise 3 fall 3 server controller3 192.168.0.13:9191 check inter 2000 rise 3 fall 3 listen nova_compute_api_cluster mode tcp bind 192.168.0.10:8774 balance source option tcplog option httplog server controller1 192.168.0.11:8774 check inter 2000 rise 3 fall 3 server controller2 192.168.0.12:8774 check inter 2000 rise 3 fall 3 server controller3 192.168.0.13:8774 check inter 2000 rise 3 fall 3 listen nova_metadata_api_cluster mode tcp bind 192.168.0.10:8775 balance source option tcplog option httplog server controller1 192.168.0.11:8775 check inter 2000 rise 3 fall 3 server controller2 192.168.0.12:8775 check inter 2000 rise 3 fall 3 server controller3 192.168.0.13:8775 check inter 2000 rise 3 fall 3 listen nova_vncproxy_cluster mode tcp bind 192.168.0.10:6080 balance source option tcplog option httplog server controller1 192.168.0.11:6080 check inter 2000 rise 3 fall 3 server controller2 192.168.0.12:6080 check inter 2000 rise 3 fall 3 server controller3 192.168.0.13:6080 check inter 2000 rise 3 fall 3 listen neutron_api_cluster mode tcp bind 192.168.0.10:9696 balance source option tcplog option httplog server controller1 192.168.0.11:9696 check inter 2000 rise 3 fall 3 server controller2 192.168.0.12:9696 check inter 2000 rise 3 fall 3 server controller3 192.168.0.13:9696 check inter 2000 rise 3 fall 3

启动成功后,拷贝到其他controller节点:

[root@controller1 ~]# scp /etc/haproxy/haproxy.cfg controller2:/etc/haproxy/ haproxy.cfg 100% 6607 6.5KB/s 00:00 [root@controller1 ~]# scp /etc/haproxy/haproxy.cfg controller3:/etc/haproxy/ haproxy.cfg 100% 6607 6.5KB/s 00:00

安装配置计算节点

以下操作都是在compute节点上:

[root@compute1 ~]# yum install openstack-neutron-linuxbridge ebtables ipset -y

配置通用组件:

Networking 通用组件的配置包括认证机制、消息队列和插件

[root@compute1 ~]# vim /etc/neutron/neutron.conf [DEFAULT] transport_url = rabbit://openstack:openstack@controller1,openstack:openstack@controller2,openstack:openstack@controller3 auth_strategy = keystone … [keystone_authtoken] auth_uri = http://controller:5000 auth_url = http://controller:35357 neutron.conf:memcached_servers = controller1:11211,controller2:11211,controller3:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = neutron password = neutron … [oslo_concurrency] lock_path = /var/lib/neutron/tmp 配置私有网络: [root@compute1 ~]# vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini [DEFAULT] [agent] [linux_bridge] physical_interface_mappings = provider:eno33554992 [securitygroup] enable_security_group = True firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver [vxlan] enable_vxlan = True local_ip = 192.168.0.31 l2_population = True 为计算服务添加网络功能: [root@compute1 ~]# vim /etc/nova/nova.conf … [neutron] url = http://controller:9696 auth_url = http://controller:35357 auth_type = password project_domain_name = default user_domain_name = default region_name = RegionOne project_name = service username = neutron password = neutron … 配置完成,重启计算服务API [root@compute1 ~]# systemctl restart openstack-nova-compute.service 启动Linuxbridge服务并开机启动 [root@compute1 ~]# systemctl start neutron-linuxbridge-agent.service [root@compute1 ~]# systemctl enable neutron-linuxbridge-agent.service

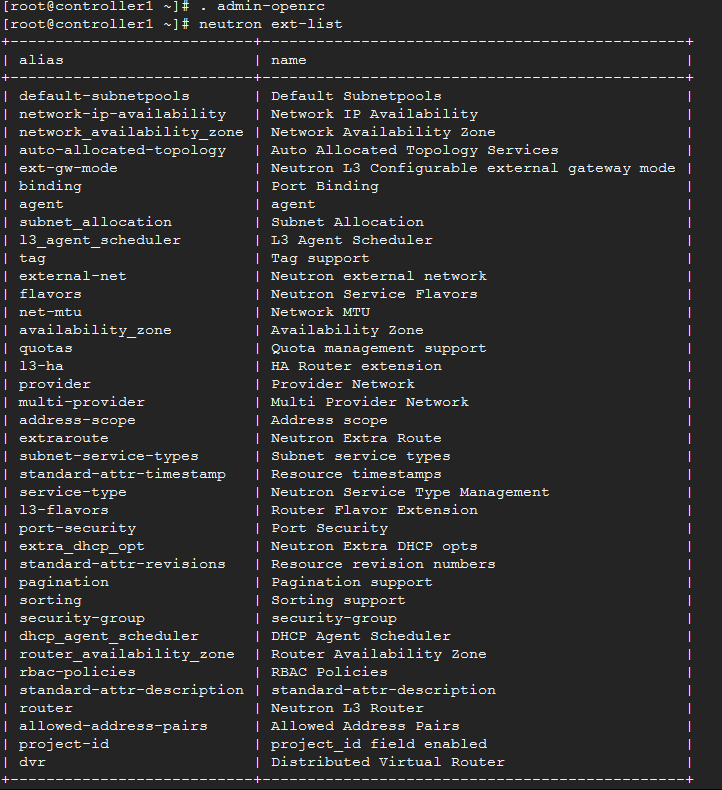

验证网络服务

在任意controller节点上执行:

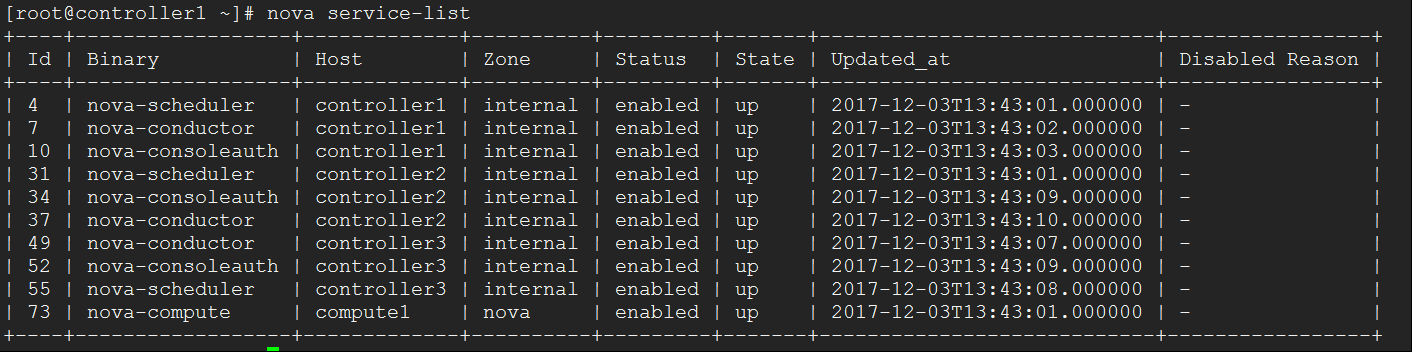

网络服务正常,再次确认计算服务

计算服务正常。neutron配置成功。

本文作者:hukey

本文链接:https://www.cnblogs.com/hukey/p/8047446.html

版权声明:本作品采用知识共享署名-非商业性使用-禁止演绎 2.5 中国大陆许可协议进行许可。

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】凌霞软件回馈社区,博客园 & 1Panel & Halo 联合会员上线

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】博客园社区专享云产品让利特惠,阿里云新客6.5折上折

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步