Lars George , 关于Hadoop和HBase的Blog

http://www.oreillynet.com/pub/au/4685

HBase: The Definitive Guide的作者

HBase Architecture 101 - Storage

http://www.larsgeorge.com/2009/10/hbase-architecture-101-storage.html

HBase最隐秘的问题之一就是它的数据是如何存储的。

我首先学习了HBase中控制各种不同文件的独立的类,然后根据我对整个HBase存储系统的理解在脑海中构建HBase架构的图像。但是我发现想要在头脑中构建出一幅连贯的HBase架构图片很困难,于是我就把它画了出来。

So what does my sketch of the HBase innards really say?

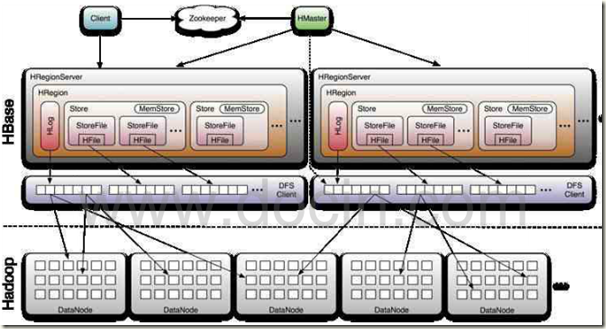

You can see that HBase handles basically two kinds of file types. One is used for the write-ahead log and the other for the actual data storage.

The files are primarily handled by the HRegionServer's. But in certain scenarios even the HMaster will have to perform low-level file operations.

You may also notice that the actual files are in fact divided up into smaller blocks when stored within the Hadoop Distributed Filesystem (HDFS). This is also one of the areas where you can configure the system to handle larger or smaller data better. More on that later.

The general flow is that a new client contacts the Zookeeper quorum (a separate cluster of Zookeeper nodes) first to find a particular row key.

1. Retrieving the server name (i.e. host name) that hosts the -ROOT- region from Zookeeper.

2. Query that server to get the server that hosts the .META. table. Both of these two details are cached and only looked up once.

3. Lastly it can query the .META. server and retrieve the server that has the row the client is looking for. Once it has been told where the row resides, i.e. in what region, it caches this information as well and contacts the HRegionServer hosting that region directly. So over time the client has a pretty complete picture of where to get rows from without needing to query the .META. server again.

Note: The HMaster is responsible to assign the regions to each HRegionServer when you start HBase. This also includes the "special" -ROOT- and .META. tables.

4. Next the HRegionServer opens the region it creates a corresponding HRegion object.

When the HRegion is "opened" it sets up a Store instance for each HColumnFamily for every table as defined by the user beforehand.

Each of the Store instances can in turn have one or more StoreFile instances, which are lightweight wrappers around the actual storage file called HFile.

A HRegion also has a MemStore and a HLog instance. We will now have a look at how they work together but also where there are exceptions to the rule.

Hbase两张文件log和data, 然后写了如果想从Hbase读某一row, 过程是什么样的...配合图, 写的很清楚.

Stay Put

So how is data written to the actual storage?

The client issues a HTable.put(Put) request to the HRegionServer which hands the details to the matching HRegion instance.

The first step is now to decide if the data should be first written to the "Write-Ahead-Log" (WAL) represented by the HLog class. The decision is based on the flag set by the client using Put.writeToWAL(boolean) method. The WAL is a standard Hadoop SequenceFile (although it is currently discussed if that should not be changed to a more HBase suitable file format) and it stores HLogKey's. These keys contain a sequential number as well as the actual data and are used to replay not yet persisted data after a server crash.

Once the data is written (or not) to the WAL it is placed in the MemStore. At the same time it is checked if the MemStore is full and in that case a flush to disk is requested. When the request is served by a separate thread in the HRegionServer it writes the data to an HFile located in the HDFS. It also saves the last written sequence number so the system knows what was persisted so far. Let"s have a look at the files now.

当做Put操作时, 步骤时怎样的, 完全copy SSTable

Files 存储结构

HBase has a configurable root directory in the HDFS but the default is /hbase. You can simply use the DFS tool of the Hadoop command line tool to look at the various files HBase stores.

$ hadoop dfs -lsr /hbase/docs

...

drwxr-xr-x - hadoop supergroup 0 2009-09-28 14:22 /hbase/.logs

drwxr-xr-x - hadoop supergroup 0 2009-10-15 14:33 /hbase/.logs/srv1.foo.bar,60020,1254172960891

-rw-r--r-- 3 hadoop supergroup 14980 2009-10-14 01:32 /hbase/.logs/srv1.foo.bar,60020,1254172960891/hlog.dat.1255509179458

-rw-r--r-- 3 hadoop supergroup 1773 2009-10-14 02:33 /hbase/.logs/srv1.foo.bar,60020,1254172960891/hlog.dat.1255512781014

-rw-r--r-- 3 hadoop supergroup 37902 2009-10-14 03:33 /hbase/.logs/srv1.foo.bar,60020,1254172960891/hlog.dat.1255516382506

...

-rw-r--r-- 3 hadoop supergroup 137648437 2009-09-28 14:20 /hbase/docs/1905740638/oldlogfile.log

...

drwxr-xr-x - hadoop supergroup 0 2009-09-27 18:03 /hbase/docs/999041123

-rw-r--r-- 3 hadoop supergroup 2323 2009-09-01 23:16 /hbase/docs/999041123/.regioninfo

drwxr-xr-x - hadoop supergroup 0 2009-10-13 01:36 /hbase/docs/999041123/cache

-rw-r--r-- 3 hadoop supergroup 91540404 2009-10-13 01:36 /hbase/docs/999041123/cache/5151973105100598304

drwxr-xr-x - hadoop supergroup 0 2009-09-27 18:03 /hbase/docs/999041123/contents

-rw-r--r-- 3 hadoop supergroup 333470401 2009-09-27 18:02 /hbase/docs/999041123/contents/4397485149704042145

drwxr-xr-x - hadoop supergroup 0 2009-09-04 01:16 /hbase/docs/999041123/language

-rw-r--r-- 3 hadoop supergroup 39499 2009-09-04 01:16 /hbase/docs/999041123/language/8466543386566168248

drwxr-xr-x - hadoop supergroup 0 2009-09-04 01:16 /hbase/docs/999041123/mimetype

-rw-r--r-- 3 hadoop supergroup 134729 2009-09-04 01:16 /hbase/docs/999041123/mimetype/786163868456226374

drwxr-xr-x - hadoop supergroup 0 2009-10-08 22:45 /hbase/docs/999882558

-rw-r--r-- 3 hadoop supergroup 2867 2009-10-08 22:45 /hbase/docs/999882558/.regioninfo

drwxr-xr-x - hadoop supergroup 0 2009-10-09 23:01 /hbase/docs/999882558/cache

-rw-r--r-- 3 hadoop supergroup 45473255 2009-10-09 23:01 /hbase/docs/999882558/cache/974303626218211126

drwxr-xr-x - hadoop supergroup 0 2009-10-12 00:37 /hbase/docs/999882558/contents

-rw-r--r-- 3 hadoop supergroup 467410053 2009-10-12 00:36 /hbase/docs/999882558/contents/2507607731379043001

drwxr-xr-x - hadoop supergroup 0 2009-10-09 23:02 /hbase/docs/999882558/language

-rw-r--r-- 3 hadoop supergroup 541 2009-10-09 23:02 /hbase/docs/999882558/language/5662037059920609304

drwxr-xr-x - hadoop supergroup 0 2009-10-09 23:02 /hbase/docs/999882558/mimetype

-rw-r--r-- 3 hadoop supergroup 84447 2009-10-09 23:02 /hbase/docs/999882558/mimetype/2642281535820134018

drwxr-xr-x - hadoop supergroup 0 2009-10-14 10:58 /hbase/docs/compaction.dir

在根目录下面你可以看到一个.logs文件夹,这里面存了所有由HLog管理的WAL log文件。在.logs目录下的每个文件夹对应一个HRegionServer,每个HRegionServer下面的每个log文件对应一个Region。

有时候你会发现一些oldlogfile.log文件(在大多数情况下你可能看不到这个文件),这个文件在一种异常情况下会被产生。这个异常情况就是HMaster对log文件的访问情况产生了怀疑,它会产生一种称作“log splits”的结果。有时候HMaster会发现某个log文件没人管了,就是说任何一个HRegionServer都不会管理这个log文件(有可能是原来管理这个文件的HRegionServer挂了),HMaster会负责分割这个log文件(按照它们归属的Region),并把那些 HLogKey写到一个叫做oldlogfile.log的文件中,并按照它们归属的Region直接将文件放到各自的Region文件夹下面。各个 HRegion会从这个文件中读取数据并将它们写入到MemStore中去,并开始将数据Flush to Disk。然后就可以把这个oldlogfile.log文件删除了。注意:有时候你可能会发现另一个叫做oldlogfile.log.old的文件,这是由于HMaster做了重复分割log文件的操作并发现 oldlogfile.log已经存在了。这时候就需要和HRegionServer以及HMaster协商到底发生了什么,以及是否可以把old的文件删掉了。从我目前遇到的情况来看,old文件都是空的并且可以被安全删除的。

HBase的每个Table在根目录下面用一个文件夹来存储,文件夹的名字就是Table的名字。在Table文件夹下面每个Region也用一个文件夹来存储,但是文件夹的名字并不是Region的名字,而是Region的名字通过Jenkins Hash计算所得到的字符串。这样做的原因是Region的名字里面可能包含了不能在HDFS里面作为路径名的字符。在每个Region文件夹下面每个ColumnFamily也有自己的文件夹,在每个ColumnFamily文件夹下面就是一个个HFile文件了。所以整个文件夹结构看起来应该是这个样子的:

/hbase/<tablename>/<encoded-regionname>/<column-family>/<filename>

In the root of the region directory there is also a .regioninfo holding meta data about the region. This will be used in the future by an HBase fsck utility (see HBASE-7) to be able to rebuild a broken .META. table. For a first usage of the region info can be seen in HBASE-1867.

Region Splits

有一件事情前面一直没有提到,那就是Region的分割。

当一个Region的数据文件不断增长并超过一个最大值的时候(你可以配置这个最大值 hbase.hregion.max.filesize),这个Region会被切分成两个。这个过程完成的非常快,因为原始的数据文件并不会被改变,系统只是简单的创建两个Reference文件指向原始的数据文件。每个Reference文件管理原始文件一半的数据。Reference文件名字是一个 ID,它使用被参考的Region的名字的Hash作为前缀。例如:1278437856009925445.3323223323。Reference 文件只含有非常少量的信息,这些信息包括被分割的原始Region的Key以及这个文件管理前半段还是后半段。HBase使用 HalfHFileReader类来访问Reference文件并从原始数据文件中读取数据。前面的架构图只并没有画出这个类,因为它只是临时使用的。只有当系统做Compaction的时候原始数据文件才会被分割成两个独立的文件并放到相应的Region目录下面,同时原始数据文件和那些 Reference文件也会被清除。

可以看出简单设计的思想, 为了提高效率, region的分割也只有在compaction的时候才会真正的发生, 就像数据删除和多版本合并也是这样.

前面dump出来的文件结构也证实了这个过程,在每个Table的目录下面你可以看到一个叫做compaction.dir的目录。这个文件夹是一个数据交换区,用于存放split和compact Region过程中生成的临时数据。

这节通过举例, 非常清晰的描述HBase在HDFS中存储的文件结构

Compactions

对于Compaction, 首先看看HBase和Bigtable之间坑爹的关键词对应, 这个地方HBase有点过分, 用minor compaction表达和Bigtable不同的含义.

HBase Bigtable

Flush Minor Compaction

Minor Compaction Merging Compaction

Major Compaction Major Compaction

The store files are carefully monitored so that the background housekeeping process can keep them under control.

The flushes of memstores slowly builds up an increasing amount of on-disk files.

If there are enough of them, the compaction process will combine them to a few, but larger ones. This goes on until the largest of these files exceeds the configured maximum store file size and triggers a regions split (see the section called “Region Splits”).

compaction的目的主要是为了提高读效率, 并减少维护大量HFile的overhead. 所以compaction的条件是file的个数, 我觉得这个和regions split不相干, 这个取决于data size.

Compactions come in two varieties: minor, and major.

The minor compactions are responsible for rewriting the last few files into one larger one. The number of files is set with the hbase.hstore.compaction.min property (which was previously called hbase.hstore.compactionThreshold, and although deprecated it is still supported). It is set to 3 by default, and needs to be at least 2 or more. A number too large would delay minor compactions, but also require more resources and take longer once they start.

The other kind of compactions support by HBase are major ones: they compact all files into a single one.

minor, 就是把若干个小file, merge成较大的file. major, 就是把所有file merge成一个. 具体用哪一个由compaction check 来决定.

Which one is run is automatically determined when the compaction check is executed. The check is triggered either after a memstore was flushed to disk, the compact or major_compact commands or API calls where invoked, or by an asynchronous background thread.

The region servers run this thread, implemented by the CompactionChecker class. It triggers a check on a regular basis, controlled by hbase.server.thread.wakefrequency (multiplied by hbase.server.thread.wakefrequency.multiplier, set to 1000, to run it less often than the other thread based tasks).

If you call the major_compact command, or the majorCompact() API call, then you force the major compaction to run. Otherwise the server checks first if the major compaction is due, based on

hbase.hregion.majorcompaction (set to 24 hours) from the last it ran. The hbase.hregion.majorcompaction.jitter (set to 0.2, in other words 20%) causes this time to be spread out for the stores.

Without the jitter all stores would run a major compaction at the same time, every 24 hours.

If no major compaction is due, then a minor is assumed. Based on the above configuration properties, the server determines if enough files for a minor compaction are available and continues if that is the case. Minor compaction might be promoted to major compactions when the minor would include all store files, and there are less than the configured maximum files per compaction.

HFile

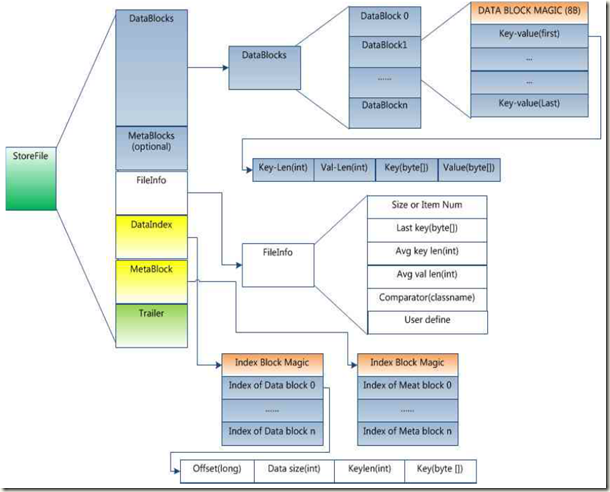

现在我们将深入HBase存储架构的核心,探讨HBase具体的数据存储文件的结构。HFile就是这个数据存储文件的结构(Ryan Rawson就是靠它扬名立万的)。

创建HFile这样一个文件结构的目的只有一个:快速高效的存储HBase的数据。HFile是基于Hadoop TFile的(see HADOOP-3315)。HFile模仿了Google Bigtable中SSTable的格式。原先HBase使用Hadoop的MapFile,但是这种文件已经被证明了效率差。现在让我们来看看这个文件结构到底是什么样的。

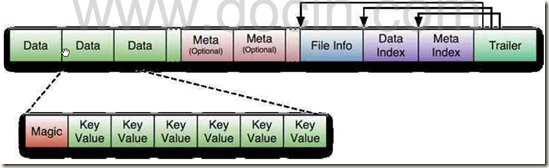

The files have a variable length, the only fixed blocks are the FileInfo and Trailer block.

As the picture shows it is the Trailer that has the pointers to the other blocks and it is written at the end of persisting the data to the file, finalizing the now immutable data store.

The Index blocks record the offsets of the Data and Meta blocks. Both the Data and the Meta blocks are actually optional. But you most likely you would always find data in a data store file.

因为HFile是模仿SSTable, sorted string table, 所以是存放按key排序的一堆key-value数据. 为了便于管理, data分为blocks, 并且在文件末有index便于快速定位block. 而Trailer是用来定位index等管理数据的. 之所以可以把管理数据放在文件末尾, 因为SSTable一旦生成就是不可变的.

那么每个block的大小是如何确定的呢?

这个值可以在创建一个Table的时候通过HColumnDescriptor(实际上应该称作FamilyDescriptor)来设定。这里我们可以看一个例子:

{NAME => ‘docs’, FAMILIES => [{NAME => ‘cache’, COMPRESSION => ‘NONE’, VERSIONS => ’3′, TTL => ’2147483647′, BLOCKSIZE => ’65536′, IN_MEMORY => ‘false’, BLOCKCACHE => ‘false’}, {NAME => ‘contents’, COMPRESSION => ‘NONE’, VERSIONS => ’3′, TTL => ’2147483647′, BLOCKSIZE => ’65536′, IN_MEMORY => ‘false’, BLOCKCACHE => ‘false’}, …

从这里可以看出这是一个叫做docs的Table,它有两个Family:cache和contents,这两个Family对应的HFile的数据块的大小都是64K。

The default is "64KB" (or 65535 bytes). Here is what the HFile JavaDoc explains:

"Minimum block size. We recommend a setting of minimum block size between 8KB to 1MB for general usage.

Larger block size is preferred if files are primarily for sequential access. However, it would lead to inefficient random access (because there are more data to decompress). Smaller blocks are good for random access, but require more memory to hold the block index, and may be slower to create (because we must flush the compressor stream at the conclusion of each data block, which leads to an FS I/O flush). Further, due to the internal caching in Compression codec, the smallest possible block size would be around 20KB-30KB."

block大小的选择, 选比较大的size, 便于sequential access, 但不适合random access, 因为你就是读1byte也要把整个block解压缩(我本来以为是在大block中查找数据效率低, 有序的, 二分效率差别不大)

选小的size, overhead会比较大, 比如block index, 所以也需要balance.

Each block contains a magic header, and a number of serialized KeyValue instances (see the section called “KeyValue Format” for their format).

If you are not using a compression algorithm, then each block is about as large as of the configured block size. This is not an exact science as the writer has to fit whatever you give it: if you store

a KeyValue that is larger than the block size, then the writer has to accept that. But even with smaller values, the check for the block size is done after the last value was written, so in practice the majority of blocks will be slightly larger.

这儿要注意, block size 64kb, 非严格的标准, 实际上都会稍微大一些. 这个和bigtable的设计不一样, bigtable会把record分多个block.

If you have compression enabled the data saved will be less. This means for the final store file that it is comprised of the same amount of blocks, but the total size is less since each block is smaller.

如果对block打开compression选项的话, block的个数不变, 但是每个block的size会变小.

So far so good, but how can you see if a HFile is OK or what data it contains? There is an App for that!

The HFile.main() method provides the tools to dump a data file:

$ hbase org.apache.hadoop.hbase.io.hfile.HFile usage: HFile [-f <arg>] [-v] [-r <arg>] [-a] [-p] [-m] [-k] -a,--checkfamily Enable family check -f,--file <arg> File to scan. Pass full-path; e.g. hdfs://a:9000/hbase/.META./12/34 -k,--checkrow Enable row order check; looks for out-of-order keys -m,--printmeta Print meta data of file -p,--printkv Print key/value pairs -r,--region <arg> Region to scan. Pass region name; e.g. '.META.,,1' -v,--verbose Verbose output; emits file and meta data delimiters

KeyValue Format

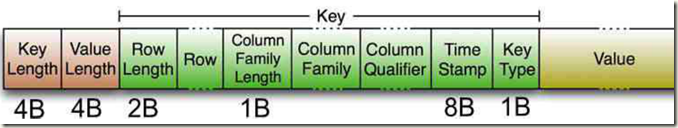

In essence each KeyValue in the HFile is simply a low-level byte array that allows for "zero-copy" access to the data, even with lazy or custom parsing if necessary. How are the instances arranged?

The structure starts with two fixed length numbers indicating the size of the key and the value part.

开始是两个固定长度的数值,分别表示Key的长度和Value的长度。紧接着是Key,开始是固定长度的数值,表示RowKey的长度,紧接着是 RowKey,然后是固定长度的数值,表示Family的长度,然后是Family,接着是Qualifier,然后是两个固定长度的数值,表示Time Stamp和Key Type。Value部分没有这么复杂的结构,就是纯粹的数据。

With that info you can offset into the array to for example get direct access to the value, ignoring the key - if you know what you are doing. Otherwise you can get the required information from the key part. Once parsed into a KeyValue object you have getters to access the details.

The reason why in the example above the average key is larger than the value can be attributed to the contained fields in the key:

it includes the fully specified dimensions of the cell. The key holds the row key, the column family name, the column qualifier, and so on.

For a small payload this results in quite a considerable overhead. If you deal with small values, then try to keep the key small as well. Choose a short row and column key (the family name with a single byte, and the qualifier equally short) to keep the ratio in check.

这幅图是这篇blog里面最有价值的, 我看bigtable相关就一直在想, SSTable只能存kv, 那么多key, row key, column key, timestamp怎么存, 这边太清楚了, good.

HBase Architecture 101 - Write-ahead-Log

http://www.larsgeorge.com/2010/01/hbase-architecture-101-write-ahead-log.html

What is the Write-ahead-Log you ask? In my previous post we had a look at the general storage architecture of HBase. One thing that was mentioned is the Write-ahead-Log, or WAL. This post explains how the log works in detail, but bear in mind that it describes the current version, which is 0.20.3. I will address the various plans to improve the log for 0.21 at the end of this article. For the term itself please read here.

HBase vs. BigTable Comparison

http://www.larsgeorge.com/2009/11/hbase-vs-bigtable-comparison.html

HBase is an open-source implementation of the Google BigTable architecture. That part is fairly easy to understand and grasp. What I personally feel is a bit more difficult is to understand how much HBase covers and where there are differences (still) compared to the BigTable specification. This post is an attempt to compare the two systems.

非常详尽的比较, 牛比

HBase MapReduce 101 - Part I

http://www.larsgeorge.com/2009/05/hbase-mapreduce-101-part-i.html

In this and the following posts I would like to take the opportunity to go into detail about the MapReduce process as provided by Hadoop but more importantly how it applies to HBase.

HBase File Locality in HDFS

http://www.larsgeorge.com/2010/05/hbase-file-locality-in-hdfs.html

One of the more ambiguous things in Hadoop is block replication: it happens automatically and you should not have to worry about it. HBase relies on it 100% to provide the data safety as it stores its files into the distributed file system. While that works completely transparent, one of the more advanced questions asked though is how does this affect performance? This usually arises when the user starts writing MapReduce jobs against either HBase or Hadoop directly. Especially with larger data being stored in HBase, how does the system take care of placing the data close to where it is needed? This is referred to data locality and in case of HBase using the Hadoop file system (HDFS) there may be doubts how that is working.

Hadoop on EC2 - A Primer

http://www.larsgeorge.com/2010/10/hadoop-on-ec2-primer.html

As more and more companies discover the power of Hadoop and how it solves complex analytical problems it seems that there is a growing interest to quickly prototype new solutions - possibly on short lived or "throw away" cluster setups. Amazon's EC2 provides an ideal platform for such prototyping and there are a lot of great resources on how this can be done. I would like to mention "Tracking Trends with Hadoop and Hive on EC2" on the Cloudera Blog by Pete Skomoroch and "Running Hadoop MapReduce on Amazon EC2 and Amazon S3" by Tom White. They give you full examples of how to process data stored on S3 using EC2 servers. Overall there seems to be a common need to quickly get insight into what a Hadoop and Hive based cluster can add in terms of business value. In this post I would like to take a step back though from the above full featured examples and show how you can use Amazon's services to set up an Hadoop cluster with the focus on the more "nitty gritty" details that are more difficult to find answers for.

Hive vs. Pig

http://www.larsgeorge.com/2009/10/hive-vs-pig.html

系统的比较了Hive和Pig