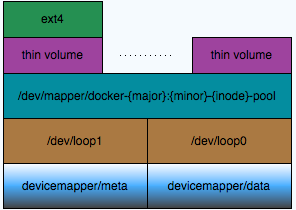

由于aufs并未并入内核,故而目前只有Ubuntu系统上能够使用aufs作为docker的存储引擎,而其他系统上使用lvm thin provisioning(overlayfs是一个和aufs类似的union filesystem,未来有可能进入内核,但目前还没有;Lvm snapshot are useful for doing e.g. backup of a snapshot, but regress badly in performance when you start having many snapshots of the same device.)。为了实现lvm thin provisioning,docker启动时会设置一个100G的sparse文件(/var/lib/docker/devicemapper/devicemapper/data,元数据为/var/lib/docker/devicemapper/devicemapper/metadata),并将其作为devicemapper的存储池,而所有容器都从该存储池中分配默认10G的存储空间使用,如下图所示:

比如创建一个apache容器时devicemapper处理流程如下所示:

- Create a snapshot of the base device.

- Mount it and apply the changes in the fedora image.

- Create a snapshot based on the fedora device.

- Mount it and apply the changes in the apache image.

- Create a snapshot based on the apache device.

- Mount it and use as the root in the new container.

thin provisioning管理

使用lvm工具来创建一个thin pool:

dd if=/dev/zero of=lvm.img bs=1M count=100

losetup /dev/loop7 lvm.img

losetup -a

pvcreate /dev/loop7

vgcreate lvm_pool /dev/loop7

# create thin pool

lvcreate -L 80M -T lvm_pool/thin_pool

# create volume in thin pool

lvcreate -T lvm_pool/thin_pool -V 500M -n first_lv

docker启动时创建的默认存储池:

#dmsetup table docker-253:1-138011042-pool

0 209715200 thin-pool 7:2 7:1 128 32768 1 skip_block_zeroing # 209715200*512/1024/1024/1024=100GB

当启动容器后,会从该池中分配10G出来:

#dmsetup table docker-253:1-138011042-641cdebd22b55f2656a560cd250e661ab181dcf2f5c5b78dc306df7ce62231f2

0 20971520 thin 253:2 166 # 20971520*512/1024/1024/1024=10GB

该10G存储的分配过程为:

dmsetup message /dev/mapper/docker-253:1-138011042-pool 0 "create_thin 166"

dmsetup create docker-253:1-138011042-641cdebd22b55f2656a560cd250e661ab181dcf2f5c5b78dc306df7ce62231f3 --table "0 20971520 thin /dev/mapper/docker-253:1-138011042-pool 166"

创建快照:

dmsetup suspend /dev/mapper/thin

dmsetup message /dev/mapper/yy_thin_pool 0 "create_snap 1 0"

dmsetup resume /dev/mapper/thin

dmsetup create snap --table "0 40960 thin /dev/mapper/yy_thin_pool 1"

docker服务在启动的时候可以配置devicemapper的启动参数,docker -d --storage-opt dm.foo=bar,可选参数有以下几个:

- dm.basesize 默认为10G,限制容器和镜像的大小

- dm.loopdatasize 存储池大小,默认为100G

- dm.datadev 存储池设备,默认生成一个/var/lib/docker/devicemapper/devicemapper/data文件

- dm.loopmetadatasize 元数据大小,默认为2G

- dm.metadatadev 元数据设备,默认生成一个/var/lib/docker/devicemapper/devicemapper/metadata文件

- dm.fs 文件系统,默认ext4

- dm.blocksize blocksize默认64K

- dm.blkdiscard 默认true

最后看看启动一个容器后,该容器的配置是如何组织的。

每个容器创建后都会将其基本配置写入到/var/lib/docker/containers/中:

#ls /var/lib/docker/containers/49f19ee979f6bf125c62779dcabf3bdce310b13d22e5c826752db202e509154e -l

total 20

-rw------- 1 root root 0 Nov 18 16:31 49f19ee979f6bf125c62779dcabf3bdce310b13d22e5c826752db202e509154e-json.log

-rw-r--r-- 1 root root 1741 Nov 18 16:31 config.json

-rw-r--r-- 1 root root 368 Nov 18 16:31 hostconfig.json

-rw-r--r-- 1 root root 13 Nov 18 16:31 hostname

-rw-r--r-- 1 root root 175 Nov 18 16:31 hosts

-rw-r--r-- 1 root root 325 Nov 18 16:31 resolv.conf

分配10G空间后会将容器存储配置写入到以下两个文件中:

# cd /var/lib/docker

#cat ./devicemapper/metadata/49f19ee979f6bf125c62779dcabf3bdce310b13d22e5c826752db202e509154e-init

{"device_id":174,"size":10737418240,"transaction_id":731,"initialized":false}

#cat ./devicemapper/metadata/49f19ee979f6bf125c62779dcabf3bdce310b13d22e5c826752db202e509154e

{"device_id":175,"size":10737418240,"transaction_id":732,"initialized":false}

{"device_id":174,"size":10737418240,"transaction_id":731,"initialized":false}

#cat ./devicemapper/metadata/49f19ee979f6bf125c62779dcabf3bdce310b13d22e5c826752db202e509154e

{"device_id":175,"size":10737418240,"transaction_id":732,"initialized":false}

而容器的rootfs会mount到/var/lib/docker/devicemapper/mnt/container_id下:

#mount | grep 49f1

/dev/mapper/docker-253:1-138011042-49f19ee979f6bf125c62779dcabf3bdce310b13d22e5c826752db202e509154e on /var/lib/docker/devicemapper/mnt/49f19ee979f6bf125c62779dcabf3bdce310b13d22e5c826752db202e509154e type ext4 (rw,relatime,discard,stripe=16,data=ordered)

参考文档

浙公网安备 33010602011771号

浙公网安备 33010602011771号