TensorFlow笔记六:基于cifar10数据库的AlexNet识别

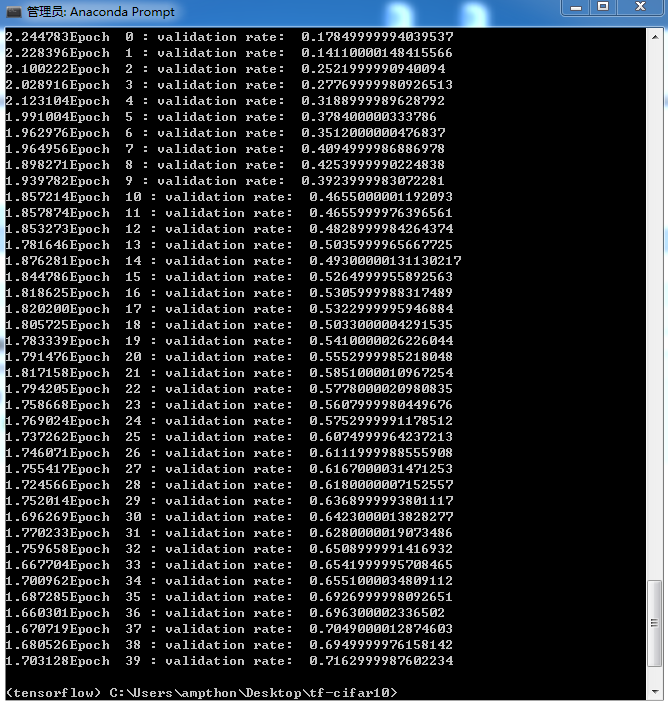

准确率只有70%,cpu版本的TF居然跑了两天才跑完,其他方法将继续尝试。

生成数据目录:

import numpy as np import os train_label = {} for i in range(10): search_path = './data/train/{}'.format(i) file_list = os.listdir(search_path) for file in file_list: train_label[os.path.join(search_path, file)] = i np.save('label.npy', train_label) test_label = {} for i in range(10): search_path = './data/test/{}'.format(i) file_list = os.listdir(search_path) for file in file_list: test_label[os.path.join(search_path, file)] = i np.save('test-label.npy', test_label)

训练:

import tensorflow as tf import numpy as np import random import cv2 # 将传入的label转换成one hot的形式。 def getOneHotLabel(label, depth): m = np.zeros([len(label), depth]) for i in range(len(label)): m[i][label[i]] = 1 return m # 建立神经网络。 def alexnet(image, keepprob=0.5): # 定义卷积层1,卷积核大小,偏置量等各项参数参考下面的程序代码,下同。 with tf.name_scope("conv1") as scope: kernel = tf.Variable(tf.truncated_normal([11, 11, 3, 64], dtype=tf.float32, stddev=1e-1, name="weights")) conv = tf.nn.conv2d(image, kernel, [1, 4, 4, 1], padding="SAME") biases = tf.Variable(tf.constant(0.0, dtype=tf.float32, shape=[64]), trainable=True, name="biases") bias = tf.nn.bias_add(conv, biases) conv1 = tf.nn.relu(bias, name=scope) pass # LRN层 lrn1 = tf.nn.lrn(conv1, 4, bias=1.0, alpha=0.001/9, beta=0.75, name="lrn1") # 最大池化层 pool1 = tf.nn.max_pool(lrn1, ksize=[1,3,3,1], strides=[1,2,2,1],padding="VALID", name="pool1") # 定义卷积层2 with tf.name_scope("conv2") as scope: kernel = tf.Variable(tf.truncated_normal([5,5,64,192], dtype=tf.float32, stddev=1e-1, name="weights")) conv = tf.nn.conv2d(pool1, kernel, [1, 1, 1, 1], padding="SAME") biases = tf.Variable(tf.constant(0.0, dtype=tf.float32, shape=[192]), trainable=True, name="biases") bias = tf.nn.bias_add(conv, biases) conv2 = tf.nn.relu(bias, name=scope) pass # LRN层 lrn2 = tf.nn.lrn(conv2, 4, bias=1.0, alpha=0.001 / 9, beta=0.75, name="lrn2") # 最大池化层 pool2 = tf.nn.max_pool(lrn2, ksize=[1, 3, 3, 1], strides=[1, 2, 2, 1], padding="VALID", name="pool2") # 定义卷积层3 with tf.name_scope("conv3") as scope: kernel = tf.Variable(tf.truncated_normal([3,3,192,384], dtype=tf.float32, stddev=1e-1, name="weights")) conv = tf.nn.conv2d(pool2, kernel, [1, 1, 1, 1], padding="SAME") biases = tf.Variable(tf.constant(0.0, dtype=tf.float32, shape=[384]), trainable=True, name="biases") bias = tf.nn.bias_add(conv, biases) conv3 = tf.nn.relu(bias, name=scope) pass # 定义卷积层4 with tf.name_scope("conv4") as scope: kernel = tf.Variable(tf.truncated_normal([3,3,384,256], dtype=tf.float32, stddev=1e-1, name="weights")) conv = tf.nn.conv2d(conv3, kernel, [1, 1, 1, 1], padding="SAME") biases = tf.Variable(tf.constant(0.0, dtype=tf.float32, shape=[256]), trainable=True, name="biases") bias = tf.nn.bias_add(conv, biases) conv4 = tf.nn.relu(bias, name=scope) pass # 定义卷积层5 with tf.name_scope("conv5") as scope: kernel = tf.Variable(tf.truncated_normal([3,3,256,256], dtype=tf.float32, stddev=1e-1, name="weights")) conv = tf.nn.conv2d(conv4, kernel, [1, 1, 1, 1], padding="SAME") biases = tf.Variable(tf.constant(0.0, dtype=tf.float32, shape=[256]), trainable=True, name="biases") bias = tf.nn.bias_add(conv, biases) conv5 = tf.nn.relu(bias, name=scope) pass # 最大池化层 pool5 = tf.nn.max_pool(conv5, ksize=[1,3,3,1], strides=[1,2,2,1], padding="VALID", name="pool5") # 全连接层 flatten = tf.reshape(pool5, [-1, 6*6*256]) weight1 = tf.Variable(tf.truncated_normal([6*6*256, 4096], mean=0, stddev=0.01)) fc1 = tf.nn.sigmoid(tf.matmul(flatten, weight1)) dropout1 = tf.nn.dropout(fc1, keepprob) weight2 = tf.Variable(tf.truncated_normal([4096, 4096], mean=0, stddev=0.01)) fc2 = tf.nn.sigmoid(tf.matmul(dropout1, weight2)) dropout2 = tf.nn.dropout(fc2, keepprob) weight3 = tf.Variable(tf.truncated_normal([4096, 10], mean=0, stddev=0.01)) fc3 = tf.nn.sigmoid(tf.matmul(dropout2, weight3)) return fc3 def alexnet_main(): # 加载使用的训练集文件名和标签。 files = np.load("label.npy", allow_pickle=True , encoding='bytes')[()] # 提取文件名。 keys = [i for i in files] print(len(keys)) myinput = tf.placeholder(dtype=tf.float32, shape=[None, 224, 224, 3], name='input') mylabel = tf.placeholder(dtype=tf.float32, shape=[None, 10], name='label') # 建立网络,keepprob为0.6。 myoutput = alexnet(myinput, 0.6) # 定义训练的loss函数。 loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=myoutput, labels=mylabel)) # 定义优化器,学习率设置为0.09,学习率可以设置为其他的数值。 optimizer = tf.train.GradientDescentOptimizer(learning_rate=0.09).minimize(loss) # 定义准确率 valaccuracy = tf.reduce_mean( tf.cast( tf.equal( tf.argmax(myoutput, 1), tf.argmax(mylabel, 1)), tf.float32)) # tensorflow的saver,可以用于保存模型。 saver = tf.train.Saver() init = tf.global_variables_initializer() with tf.Session() as sess: sess.run(init) # 40个epoch for loop in range(40): # 生成并打乱训练集的顺序。 indices = np.arange(50000) random.shuffle(indices) # batch size此处定义为200。 # 训练集一共50000张图片,前40000张用于训练,后10000张用于验证集。 for i in range(0, 0+40000, 200): photo = [] label = [] for j in range(0, 200): # print(keys[indices[i + j]]) photo.append(cv2.resize(cv2.imread(keys[indices[i + j]]), (224, 224))/225) label.append(files[keys[indices[i + j]]]) m = getOneHotLabel(label, depth=10) a, b = sess.run([optimizer, loss], feed_dict={myinput: photo, mylabel: m}) print("\r%lf"%b, end='') acc = 0 # 每次取验证集的200张图片进行验证,返回这200张图片的正确率。 for i in range(40000, 40000+10000, 200): photo = [] label = [] for j in range(i, i + 200): photo.append(cv2.resize(cv2.imread(keys[indices[j]]), (224, 224))/225) label.append(files[keys[indices[j]]]) m = getOneHotLabel(label, depth=10) acc += sess.run(valaccuracy, feed_dict={myinput: photo, mylabel: m}) # 输出,一共有50次验证集数据相加,所以需要除以50。 print("Epoch ", loop, ': validation rate: ', acc/50) # 保存模型。 saver.save(sess, "model/model.ckpt") if __name__ == '__main__': alexnet_main()

测试:

import tensorflow as tf import numpy as np import random import cv2 def getOneHotLabel(label, depth): m = np.zeros([len(label), depth]) for i in range(len(label)): m[i][label[i]] = 1 return m # 建立神经网络 def alexnet(image, keepprob=0.5): # 定义卷积层1,卷积核大小,偏置量等各项参数参考下面的程序代码,下同 with tf.name_scope("conv1") as scope: kernel = tf.Variable(tf.truncated_normal([11, 11, 3, 64], dtype=tf.float32, stddev=1e-1, name="weights")) conv = tf.nn.conv2d(image, kernel, [1, 4, 4, 1], padding="SAME") biases = tf.Variable(tf.constant(0.0, dtype=tf.float32, shape=[64]), trainable=True, name="biases") bias = tf.nn.bias_add(conv, biases) conv1 = tf.nn.relu(bias, name=scope) pass # LRN层 lrn1 = tf.nn.lrn(conv1, 4, bias=1.0, alpha=0.001/9, beta=0.75, name="lrn1") # 最大池化层 pool1 = tf.nn.max_pool(lrn1, ksize=[1,3,3,1], strides=[1,2,2,1],padding="VALID", name="pool1") # 定义卷积层2 with tf.name_scope("conv2") as scope: kernel = tf.Variable(tf.truncated_normal([5,5,64,192], dtype=tf.float32, stddev=1e-1, name="weights")) conv = tf.nn.conv2d(pool1, kernel, [1, 1, 1, 1], padding="SAME") biases = tf.Variable(tf.constant(0.0, dtype=tf.float32, shape=[192]), trainable=True, name="biases") bias = tf.nn.bias_add(conv, biases) conv2 = tf.nn.relu(bias, name=scope) pass # LRN层 lrn2 = tf.nn.lrn(conv2, 4, bias=1.0, alpha=0.001 / 9, beta=0.75, name="lrn2") # 最大池化层 pool2 = tf.nn.max_pool(lrn2, ksize=[1, 3, 3, 1], strides=[1, 2, 2, 1], padding="VALID", name="pool2") # 定义卷积层3 with tf.name_scope("conv3") as scope: kernel = tf.Variable(tf.truncated_normal([3,3,192,384], dtype=tf.float32, stddev=1e-1, name="weights")) conv = tf.nn.conv2d(pool2, kernel, [1, 1, 1, 1], padding="SAME") biases = tf.Variable(tf.constant(0.0, dtype=tf.float32, shape=[384]), trainable=True, name="biases") bias = tf.nn.bias_add(conv, biases) conv3 = tf.nn.relu(bias, name=scope) pass # 定义卷积层4 with tf.name_scope("conv4") as scope: kernel = tf.Variable(tf.truncated_normal([3,3,384,256], dtype=tf.float32, stddev=1e-1, name="weights")) conv = tf.nn.conv2d(conv3, kernel, [1, 1, 1, 1], padding="SAME") biases = tf.Variable(tf.constant(0.0, dtype=tf.float32, shape=[256]), trainable=True, name="biases") bias = tf.nn.bias_add(conv, biases) conv4 = tf.nn.relu(bias, name=scope) pass # 定义卷积层5 with tf.name_scope("conv5") as scope: kernel = tf.Variable(tf.truncated_normal([3,3,256,256], dtype=tf.float32, stddev=1e-1, name="weights")) conv = tf.nn.conv2d(conv4, kernel, [1, 1, 1, 1], padding="SAME") biases = tf.Variable(tf.constant(0.0, dtype=tf.float32, shape=[256]), trainable=True, name="biases") bias = tf.nn.bias_add(conv, biases) conv5 = tf.nn.relu(bias, name=scope) pass # 最大池化层 pool5 = tf.nn.max_pool(conv5, ksize=[1,3,3,1], strides=[1,2,2,1], padding="VALID", name="pool5") # 全连接层 flatten = tf.reshape(pool5, [-1, 6*6*256]) weight1 = tf.Variable(tf.truncated_normal([6*6*256, 4096], mean=0, stddev=0.01)) fc1 = tf.nn.sigmoid(tf.matmul(flatten, weight1)) dropout1 = tf.nn.dropout(fc1, keepprob) weight2 = tf.Variable(tf.truncated_normal([4096, 4096], mean=0, stddev=0.01)) fc2 = tf.nn.sigmoid(tf.matmul(dropout1, weight2)) dropout2 = tf.nn.dropout(fc2, keepprob) weight3 = tf.Variable(tf.truncated_normal([4096, 10], mean=0, stddev=0.01)) fc3 = tf.nn.sigmoid(tf.matmul(dropout2, weight3)) return fc3 def alexnet_main(): # 加载测试集的文件名和标签。 files = np.load("test-label.npy", encoding='bytes')[()] keys = [i for i in files] print(len(keys)) myinput = tf.placeholder(dtype=tf.float32, shape=[None, 224, 224, 3], name='input') mylabel = tf.placeholder(dtype=tf.float32, shape=[None, 10], name='label') myoutput = alexnet(myinput, 0.6) prediction = tf.argmax(myoutput, 1) truth = tf.argmax(mylabel, 1) valaccuracy = tf.reduce_mean( tf.cast( tf.equal( prediction, truth), tf.float32)) saver = tf.train.Saver() with tf.Session() as sess: # 加载训练好的模型,路径根据自己的实际情况调整 saver.restore(sess, r"model/model.ckpt") cnt = 0 for i in range(10000): photo = [] label = [] photo.append(cv2.resize(cv2.imread(keys[i]), (224, 224))/225) label.append(files[keys[i]]) m = getOneHotLabel(label, depth=10) a, b= sess.run([prediction, truth], feed_dict={myinput: photo, mylabel: m}) print(a, ' ', b) if a[0] == b[0]: cnt += 1 print("Epoch ", 1, ': prediction rate: ', cnt / 10000) if __name__ == '__main__': alexnet_main()