python爬虫 智联招聘 工作地点

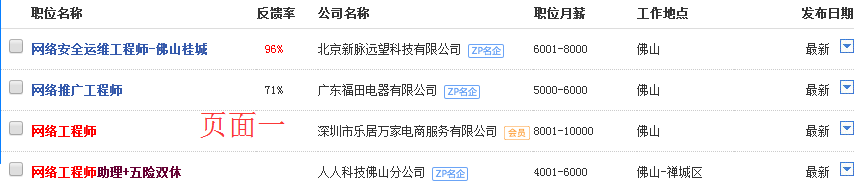

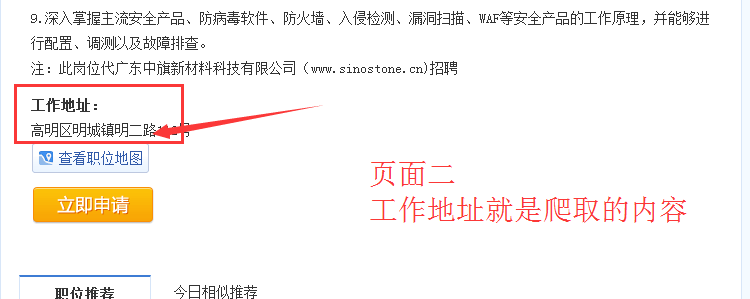

需求:智联上找工作的时候,工作地点在搜索页面只能看到城市-区。看不到具体的地址。(离家近的工作肯定优先考虑)

思路:爬取搜索页面(页面一)然后进去其中一个内页,再爬工作地点(页面二),【废话】

利用的库:re,csv,bs4,requests

基本功能已实现。

下一步方向,调取地图api 计算离家距离。

运算速度方面的话,可以考虑多线程处理。

以下代码拿来就用哈!

import requests

from bs4 import BeautifulSoup

import re

import csv

location = "广州"

search = "网络工程师"

fileName = location + "-" + search + ".csv"

with open(fileName, "w", newline="") as datacsv:

csvwriter = csv.writer(datacsv, dialect=("excel"))

# res = requests.get("https://sou.zhaopin.com/jobs/searchresult.ashx?jl=%E4%BD%9B%E5%B1%B1&kw=%E7%BD%91%E7%BB%9C%E5%B7%A5%E7%A8%8B%E5%B8%88&sm=0&p=1")

Url = ("https://sou.zhaopin.com/jobs/searchresult.ashx?jl=%s&kw=%s&sm=0&p=1" % (location, search))

res = requests.get(Url)

res.encoding = 'utf-8'

soup = BeautifulSoup(res.text,'html.parser')

all_work = []

for link in soup.find_all('a'):

if re.search("com/[0-9]*.htm",str(link.get('href'))):

all_work.append(link.get('href'))

for i in all_work:

work = []

res2 = requests.get(i)

res2.encoding = 'utf-8'

soup2 = BeautifulSoup(res2.text,'html.parser')

for z in soup2.find_all("h2"):

work.append((z.get_text()).split()[0])

work_list = str(soup2.get_text()).split()

work_list2 = []

count_y = 0

for y in work_list:

count_y +=1

if "职位月薪" in y:

work_list2 = work_list[count_y-1:count_y+6]

for xx in work_list2:

work.append(xx)

csvwriter.writerow(work)

break

datacsv.close()