1.下载源码

# Hadoop home

hibench.hadoop.home /opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/lib/hadoop

# The path of hadoop executable

hibench.hadoop.executable /opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/bin/hadoop

# Hadoop configraution directory

hibench.hadoop.configure.dir /etc/hadoop/conf

# The root HDFS path to store HiBench data

hibench.hdfs.master hdfs://spark-4:8020

# Hadoop release provider. Supported value: apache, cdh5, hdp

hibench.hadoop.release cdh5

4.修改spark配置文件 conf/spark.conf

我的参考配置:

# Spark home

hibench.spark.home /opt/cloudera/parcels/SPARK2/lib/spark2

# Spark master

# standalone mode: spark://xxx:7077

# YARN mode: yarn-client

hibench.spark.master yarn-client

# executor number and cores when running on Yarn

hibench.yarn.executor.num 1

hibench.yarn.executor.cores 2

# executor and driver memory in standalone & YARN mode

spark.executor.memory 512m

spark.driver.memory 512m

5.编辑conf/benchmarks.lst 选择你要测试的模块以及功能,如以wordcount为例子

6.编辑conf/frameworks.lst 选择你要测试的环境如hadoop或spark

7.准备数据

bin/workloads/micro/wordcount/prepare/prepare.sh

8.运行测试的项目

bin/workloads/micro/wordcount/spark/run.sh

9 查看结果

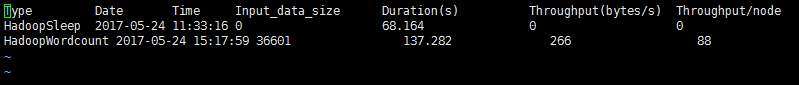

a、在HiBench/report/hibench.report中查看 workload name, execution duration, data size, throughput per cluster, throughput per node等信息