学习笔记 | Morvan - Reinforcement Learning, Part 2: Q-learning

Q-learning

Auxiliary Material

-

Simple Reinforcement Learning with Tensorflow Part 0: Q-Learning

-

6.5 Q-Learning: Off-Policy TD Control (Sutton and Barto's Reinforcement Learning ebook)

Note

- tabular

扁平的,表格式的

- Q-learning by Morvan

Q-learning 是一种记录行为值 (Q value) 的方法, 每种在一定状态的行为都会有一个值 Q(s, a), 就是说 行为 a 在 s 状态的值是 Q(s, a).

s 在上面的探索者游戏中, 就是 o 所在的地点了. 而每一个地点探索者都能做出两个行为 left/right, 这就是探索者的所有可行的 a 啦.

- Q-learning by Wikipedia

Q-learning is a model-free reinforcement learning technique. Specifically, Q-learning can be used to find an optimal action-selection policy for any given (finite) Markov decision process (MDP). It works by learning an action-value function that ultimately gives the expected utility of taking a given action in a given state and following the optimal policy thereafter.

When such an action-value function is learned, the optimal policy can be constructed by simply selecting the action with the highest value in each state. One of the strengths of Q-learning is that it is able to compare the expected utility of the available actions without requiring a model of the environment. Additionally, Q-learning can handle problems with stochastic transitions and rewards, without requiring any adaptations.

-

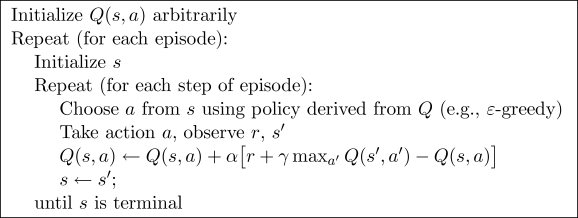

Psudocode

-

The transition rule of Q learning is a very simple formula (source):

Q(state, action) = R(state, action) + Gamma * Max[Q(next state, all actions)]

-

epsilon greedy

EPSILON 就是用来控制贪婪程度的值。EPSILON 可以随着探索时间不断提升(越来越贪婪)。

-

Why is Q-learning considered an off-policy control method? (Exercise 6.9 of Sutton and Barto book's)

If the algorithm estimates the value function of the policy generating the data, the method is called on-policy. Otherwise it is called off-policy.

if the samples used in the TD update is not generated according to your behavior policy (policy that the agent is following) then it is called off-policy learning--you can also say learning from off-policy data. (source)

Q-learning 是一个 off-policy 的算法, 因为里面的 max action 让 Q table 的更新可以不基于正在经历的经验(可以是现在学习着很久以前的经验,甚至是学习他人的经验).