学习笔记 | Morvan - Reinforcement Learning, Part 3: Sarsa

Sarsa

Notes

What is Sarsa?

-

From Wikipedia:

State-Action-Reward-State-Action (SARSA) is an algorithm for learning a Markov decision process policy, used in the reinforcement learning area of machine learning. It was introduced in a technical note [1] where the alternative name SARSA was only mentioned as a footnote.

This name simply reflects the fact that the main function for updating the Q-value depends on the current state of the agent "S1", the action the agent chooses "A1", the reward "R" the agent gets for choosing this action, the state "S2" that the agent will now be in after taking that action, and finally the next action "A2" the agent will choose in its new state. Taking every letter in the quintuple (st, at, rt, st+1, at+1) yields the word SARSA.[2]

-

Sarsa is similar to Q-Learning in updating the Q-table

-

it is an on-policy algorithm

- Sarsa updates both the state and action for the next step which explicitly finds the future reward value,

qnext, that followed, rather than assuming that the optimal action will be taken and that the largest reward,maxqnew, resulted.

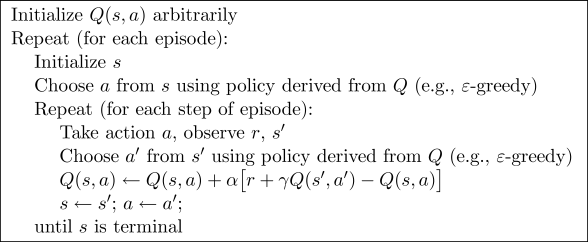

Sarsa Pseudocode

SARSA VS Q-LEARNING

-

Post: REINFORCEMENT LEARNING PART 2: SARSA VS Q-LEARNING (with code link)

- Written out, the Q-learning update policy is

Q(s, a) = reward(s) + alpha * max(Q(s')), and the SARSA update policy isQ(s, a) = reward(s) + alpha * Q(s', a'). This is how SARSA is able to take into account the control policy of the agent during learning. -

The major difference between it and Q-Learning, is that the maximum reward for the next state is not necessarily used for updating the Q-values. Instead, a new action, and therefore reward, is selected using the same policy that determined the original action. (UNSW RL Algorithm webpage)

- Q-Learning 是说到不保证做到(更新Q表但不直接用于选择行为);Sarsa是说到必做到(更新Q表的同时基于此选择下一步行为)