通过logstash收集日志(三)

一、logstash收集日志并写入redis

[k8s玩法](https://kubernetes.hankbook.cn)

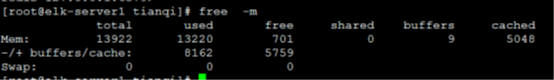

用一台服务器按照部署redis服务,专门用于日志缓存使用,用于web服务器产生大量日志的场景,例如下面的服务器内存即将被使用完毕,查看是因为redis服务保存了大量的数据没有被读取而占用了大量的内存空间。

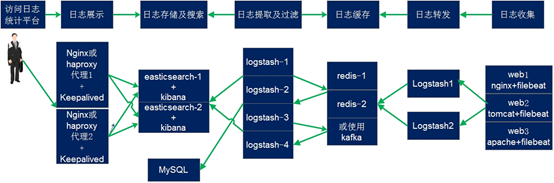

整体架构:

1.部署redis

[root@linux-host2 ~]# cd /usr/local/src/ [root@linux-host2 src]# [root@linux-host2 src]# tar xvf redis-3.2.8.tar.gz [root@linux-host2 src]# ln -sv /usr/local/src/redis-3.2.8 /usr/local/redis ‘/usr/local/redis’ -> ‘/usr/local/src/redis-3.2.8’ [root@linux-host2 src]#cd /usr/local/redis/deps [root@linux-host2 redis]# yum install gcc [root@linux-host2 deps]# make geohash-int hiredis jemalloc linenoise lua [root@linux-host2 deps]# cd .. [root@linux-host2 redis]# make [root@linux-host2 redis]# vim redis.conf [root@linux-host2 redis]# grep "^[a-Z]" redis.conf #主要改动的地方 bind 0.0.0.0 protected-mode yes port 6379 tcp-backlog 511 timeout 0 tcp-keepalive 300 daemonize yes supervised no pidfile /var/run/redis_6379.pid loglevel notice logfile "" databases 16 save "" rdbcompression no #是否压缩 rdbchecksum no #是否校验 [root@linux-host2 redis]# ln -sv /usr/local/redis/src/redis-server /usr/bin/ ‘/usr/bin/redis-server’ -> ‘/usr/local/redis/src/redis-server’ [root@linux-host2 redis]# ln -sv /usr/local/redis/src/redis-cli /usr/bin/ ‘/usr/bin/redis-cli’ -> ‘/usr/local/redis/src/redis-cli’

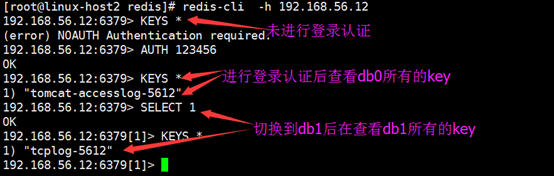

2.设置redis访问密码

为安全考虑,生产环境必须设置reids连接密码:

[root@linux-host2 redis]# redis-cli 127.0.0.1:6379> config set requirepass 123456 #动态设置,重启后无效 OK 480 requirepass 123456 #redis.conf配置文件

3.启动并测试redis服务

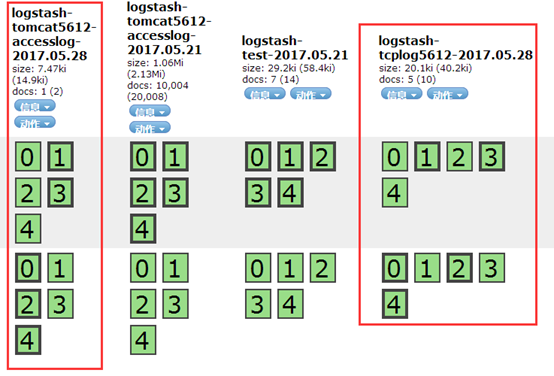

[root@linux-host2 redis]# redis-server /usr/local/redis/redis.conf #启动服务 [root@linux-host2 redis]# redis-cli 127.0.0.1:6379> ping PONG

4.配置logstash将日志写入至redis

将tomcat服务器的logstash收集之后的tomcat 访问日志写入到redis服务器,然后通过另外的logstash将redis服务器的数据取出在写入到elasticsearch服务器。

官方文档:https://www.elastic.co/guide/en/logstash/current/plugins-outputs-redis.html

[root@linux-host2 tomcat]# cat /etc/logstash/conf.d/tomcat_tcp.conf

input {

file {

path => "/usr/local/tomcat/logs/tomcat_access_log.*.log"

type => "tomcat-accesslog-5612"

start_position => "beginning"

stat_interval => "2"

}

tcp {

port => 7800

mode => "server"

type => "tcplog-5612"

}

}

output {

if [type] == "tomcat-accesslog-5612" {

redis {

data_type => "list"

key => "tomcat-accesslog-5612"

host => "192.168.56.12"

port => "6379"

db => "0"

password => "123456"

}}

if [type] == "tcplog-5612" {

redis {

data_type => "list"

key => "tcplog-5612"

host => "192.168.56.12"

port => "6379"

db => "1"

password => "123456"

}}

}

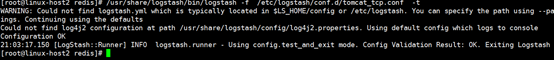

5.测试logstash配置文件语法是否正确

[root@linux-host2 ~]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/tomcat.conf

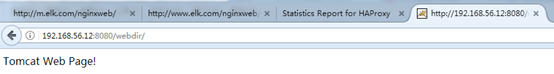

6.访问tomcat的web界面并生成系统日志

[root@linux-host1 ~]# echo "伪设备1" > /dev/tcp/192.168.56.12/7800

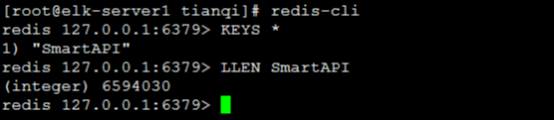

7.验证redis是否有数据

8.配置其他logstash服务器从redis读取数据

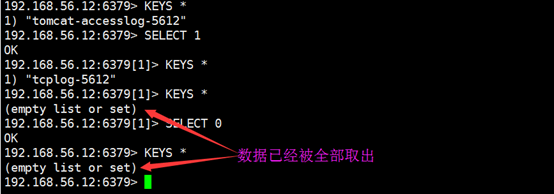

配置专门logstash服务器从redis读取指定的key的数据,并写入到elasticsearch。

[root@linux-host3 ~]# cat /etc/logstash/conf.d/redis-to-els.conf

[root@linux-host1 conf.d]# cat /etc/logstash/conf.d/redis-tomcat-es.conf

input {

redis {

data_type => "list"

key => "tomcat-accesslog-5612"

host => "192.168.56.12"

port => "6379"

db => "0"

password => "123456"

codec => "json"

}

redis {

data_type => "list"

key => "tcplog-5612"

host => "192.168.56.12"

port => "6379"

db => "1"

password => "123456"

}

}

output {

if [type] == "tomcat-accesslog-5612" {

elasticsearch {

hosts => ["192.168.56.11:9200"]

index => "logstash-tomcat5612-accesslog-%{+YYYY.MM.dd}"

}}

if [type] == "tcplog-5612" {

elasticsearch {

hosts => ["192.168.56.11:9200"]

index => "logstash-tcplog5612-%{+YYYY.MM.dd}"

}}

}

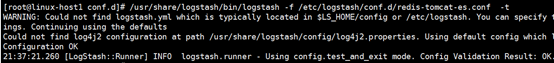

9.测试logstash

[root@linux-host1 conf.d]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/redis-to-els.conf

10.验证redis的数据是否被取出

11.在head插件验证数据

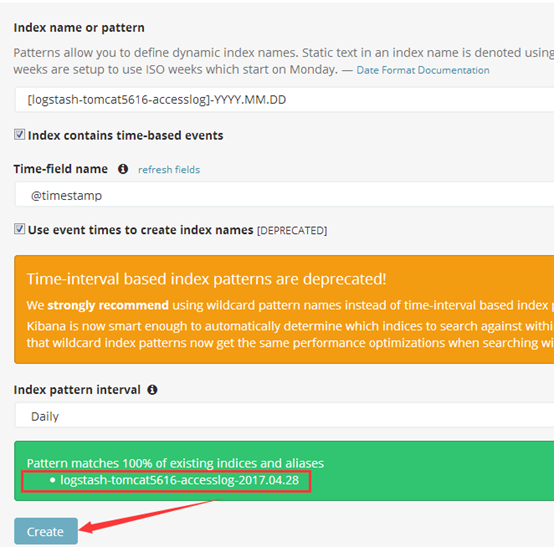

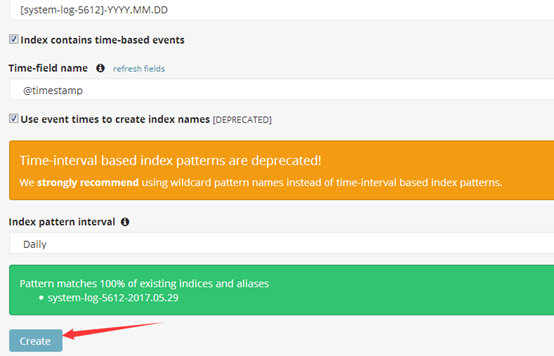

12.kibana添加tomcat访问日志索引

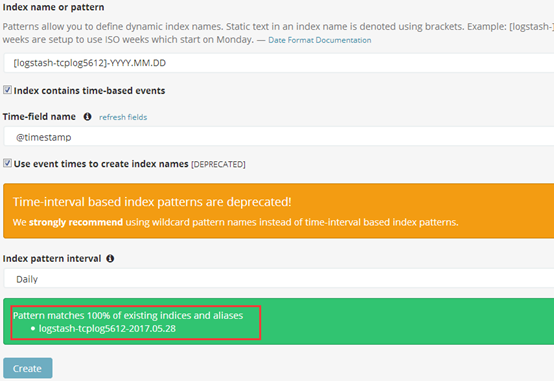

14.kibana添加tcp日志索引

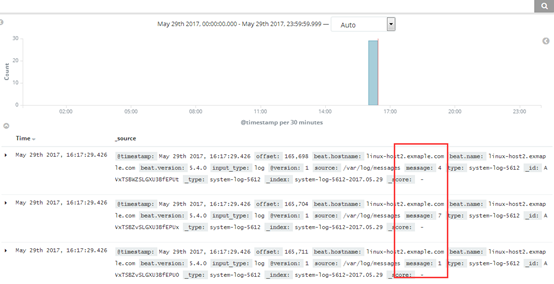

15.kibana验证tomcat访问日志

16.kibana验证tomcat访问日志

17.kibana 验证tcp日志

#注:测试没有问题之后,请将logstash使用服务的方式正常启动

二、使用filebeat替代logstash收集日志

Filebeat是轻量级单用途的日志收集工具,用于在没有安装java的服务器上专门收集日志,可以将日志转发到logstash、elasticsearch或redis等场景中进行下一步处理。

官网下载地址:https://www.elastic.co/downloads/beats/filebeat

官方文档:https://www.elastic.co/guide/en/beats/filebeat/current/filebeat-configuration-details.html

1.确认日志格式为json格式

先访问web服务器,以产生一定的日志,然后确认是json格式,因为下面的课程中会使用到:

[root@linux-host2 ~]# ab -n100 -c100 http://192.168.56.16:8080/web

2.确认日志格式,后续会用日志做统计

[root@linux-host2 ~]# tail /usr/local/tomcat/logs/localhost_access_log.2017-04-28.txt

{"clientip":"192.168.56.15","ClientUser":"-","authenticated":"-","AccessTime":"[28/Apr/2017:21:16:46 +0800]","method":"GET /webdir/ HTTP/1.0","status":"200","SendBytes":"12","Query?string":"","partner":"-","AgentVersion":"ApacheBench/2.3"}

{"clientip":"192.168.56.15","ClientUser":"-","authenticated":"-","AccessTime":"[28/Apr/2017:21:16:46 +0800]","method":"GET /webdir/ HTTP/1.0","status":"200","SendBytes":"12","Query?string":"","partner":"-","AgentVersion":"ApacheBench/2.3"}

3.安装配置filebeat

[root@linux-host2 ~]# systemctl stop logstash #停止logstash服务(如果有安装) [root@linux-host2 src]# wget https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-5.3.2-x86_64.rpm [root@linux-host6 src]# yum install filebeat-5.3.2-x86_64.rpm -y

4.配置filebeat收集系统日志

[root@linux-host2 ~]# cd /etc/filebeat/ [root@linux-host2 filebeat]# cp filebeat.yml filebeat.yml.bak #备份源配置文件

4.1filebeat收集多个系统日志并输出到本地文件

[root@linux-host2 ~]# grep -v "#" /etc/filebeat/filebeat.yml | grep -v "^$"

grep -v "#" /etc/filebeat/filebeat.yml | grep -v "^$"

filebeat.prospectors:

- input_type: log

paths:

- /var/log/messages

- /var/log/*.log

exclude_lines: ["^DBG","^$"] #不收取的

#include_lines: ["^ERR", "^WARN"] #只收取的

f #类型,会在每条日志中插入标记

output.file:

path: "/tmp"

filename: "filebeat.txt"

4.2启动filebeat服务并验证本地文件是否有数据

[root@linux-host2 filebeat]# systemctl start filebeat

5.filebeat收集单个类型日志并写入redis

Filebeat支持将数据直接写入到redis服务器,本步骤为写入到redis当中的一个可以,另外filebeat还支持写入到elasticsearch、logstash等服务器。

5.1filebeat配置

[root@linux-host2 ~]# grep -v "#" /etc/filebeat/filebeat.yml | grep -v "^$"

filebeat.prospectors:

- input_type: log

paths:

- /var/log/messages

- /var/log/*.log

exclude_lines: ["^DBG","^$"]

document_type: system-log-5612

output.redis:

hosts: ["192.168.56.12:6379"]

key: "system-log-5612" #为了后期日志处理,建议自定义key名称

db: 1 #使用第几个库

timeout: 5 #超时时间

password: 123456 #redis密码

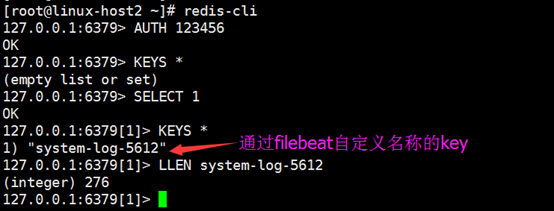

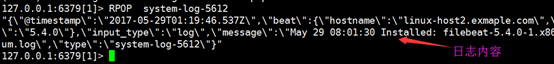

5.2验证redis是否有数据

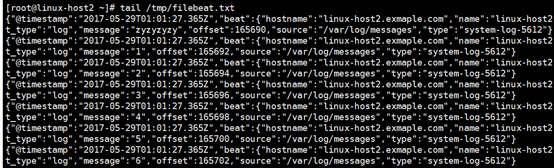

5.3查看redis中的日志数据

注意选择的db是否和filebeat写入一致

5.4配置logstash从redis读取上面的日志

[root@linux-host1 ~]# cat /etc/logstash/conf.d/redis-systemlog-es.conf

input {

redis {

host => "192.168.56.12"

port => "6379"

db => "1"

key => "system-log-5612"

data_type => "list"

}

}

output {

if [type] == "system-log-5612" {

elasticsearch {

hosts => ["192.168.56.11:9200"]

index => "system-log-5612"

}}

}

[root@linux-host1 ~]# systemctl restart logstash #重启logstash服务

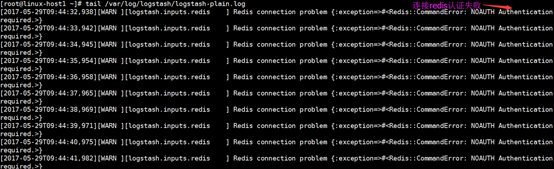

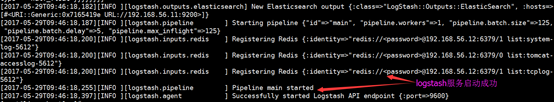

5.5查看logstash服务日志

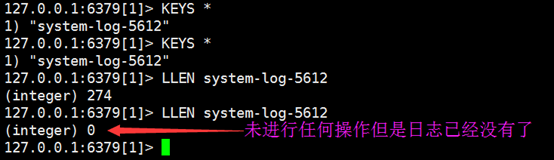

5.6查看redis中是否有数据

5.7在logstash的head插件验证索引是否创建

5.8kibana界面添加索引

5.9在kibana验证system日志

6.监控redis数据长度

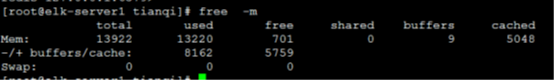

实际环境当中,可能会出现reids当中堆积了大量的数据而logstash由于种种原因未能及时提取日志,此时会导致redis服务器的内存被大量使用,甚至出现如下内存即将被使用完毕的情景:

查看reids中的日志队列长度发现有大量的日志堆积在redis 当中:

6.1脚本内容

#!/usr/bin/env python

#coding:utf-8

#Author Zhang jie

import redis

def redis_conn():

pool=redis.ConnectionPool(host="192.168.56.12",port=6379,db=0,password=123456)

conn = redis.Redis(connection_pool=pool)

data = conn.llen('tomcat-accesslog-5612')

print(data)

redis_conn()