5.通过logstash收集日志(一)

1.收集单个系统日志并输出至文件

前提需要logstash用户对被收集的日志文件有读的权限并对写入的文件有写权限

修改logstash的配置文件

path.config: /etc/logstash/conf.d/*

1.1logstash配置文件

[root@linux-node1 ~]# cat /etc/logstash/conf.d/system-log.conf

input {

file {

type => "messagelog"

path => "/var/log/messages"

start_position => "beginning"

}

}

output {

file {

path => "/tmp/%{type}.%{+yyyy.MM.dd}"

}

}

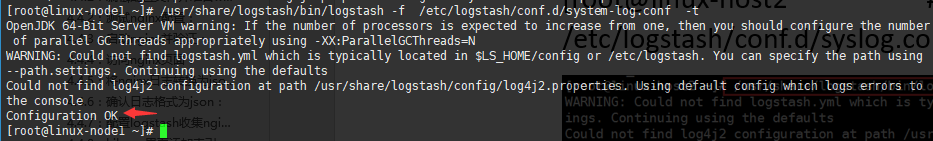

1.2检测配置文件语法是否正确(时间有点长)

/usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/system-log.conf -t

友情提示,后面的配置文件问好自己手打,(补全),不然会报找不到文件的哦

systemctl restart logstash.service # 修改配置文件就重启服务,此处是测试,博主使用了restart,生产请用reload

1.3生成数据并验证

echo 123 >> /var/log/messages # 好像啥事也没放生

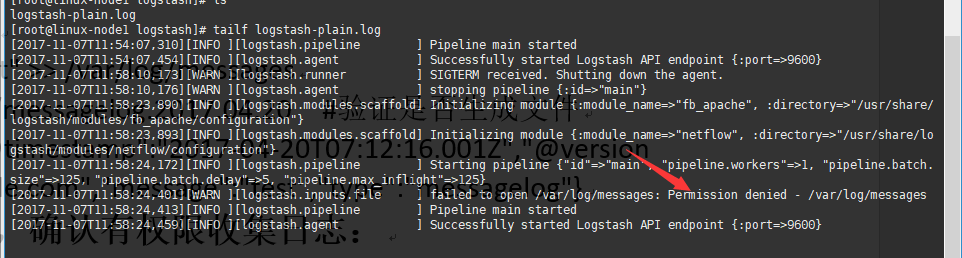

1.4 查看日志是重中之重

tail /var/log/logstash/logstash-plain.log

[root@linux-node1 logstash]# tailf logstash-plain.log

[2017-11-07T11:54:07,310][INFO ][logstash.pipeline ] Pipeline main started

[2017-11-07T11:54:07,454][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

[2017-11-07T11:58:10,173][WARN ][logstash.runner ] SIGTERM received. Shutting down the agent.

[2017-11-07T11:58:10,176][WARN ][logstash.agent ] stopping pipeline {:id=>"main"}

[2017-11-07T11:58:23,890][INFO ][logstash.modules.scaffold] Initializing module {:module_name=>"fb_apache", :directory=>"/usr/share/logstash/modules/fb_apache/configuration"}

[2017-11-07T11:58:23,893][INFO ][logstash.modules.scaffold] Initializing module {:module_name=>"netflow", :directory=>"/usr/share/logstash/modules/netflow/configuration"}

[2017-11-07T11:58:24,172][INFO ][logstash.pipeline ] Starting pipeline {"id"=>"main", "pipeline.workers"=>1, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>5, "pipeline.max_inflight"=>125}

[2017-11-07T11:58:24,401][WARN ][logstash.inputs.file ] failed to open /var/log/messages: Permission denied - /var/log/messages

[2017-11-07T11:58:24,413][INFO ][logstash.pipeline ] Pipeline main started

[2017-11-07T11:58:24,459][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

授权文件

chmod 644 /var/log/messages

[root@linux-node1 logstash]# ls -l /tmp/messagelog.2017.11.07 -rw-r--r--. 1 logstash logstash 2899901 Nov 7 12:03 /tmp/messagelog.2017.11.07

2.通过logstash收集多个日志文件

[root@linux-node1 logstash]# cat /etc/logstash/conf.d/system-log.conf

input {

file {

path => "/var/log/messages" #日志路径

type => "systemlog" #事件的唯一类型

start_position => "beginning" #第一次收集日志的位置

stat_interval => "3" #日志收集的间隔时间

}

file {

path => "/var/log/secure"

type => "securelog"

start_position => "beginning"

stat_interval => "3"

}

}

output {

if [type] == "systemlog" {

elasticsearch {

hosts => ["192.168.56.11:9200"]

index => "system-log-%{+YYYY.MM.dd}"

}}

if [type] == "securelog" {

elasticsearch {

hosts => ["192.168.56.11:9200"]

index => "secury-log-%{+YYYY.MM.dd}"

}}

}

[root@linux-node1 logstash]# systemctl restart logstash.service # 必须重启服务

授权

chmod 644 /var/log/secure

echo "test" >> /var/log/secure echo "test" >> /var/log/messages

网站192.168.56.11:9100查看索引,已经存在啦。

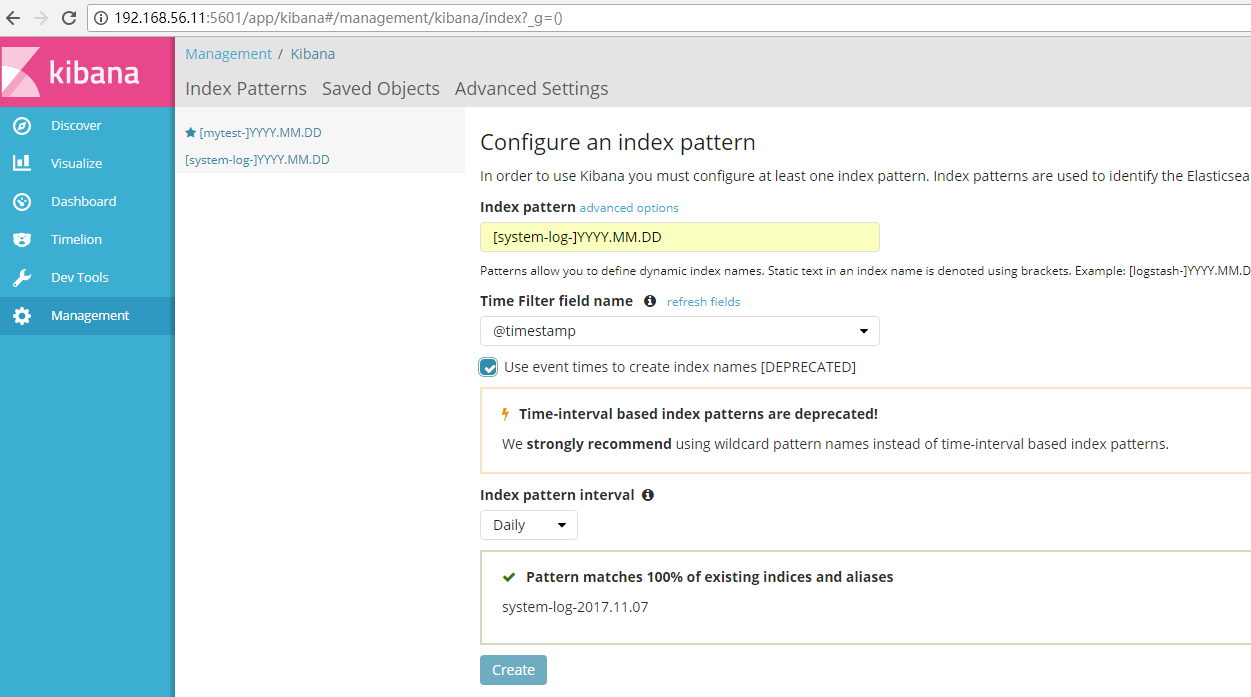

2.1在kibana界面添加system-log索引

3.通过logtsash收集tomcat和java日志

收集Tomcat服务器的访问日志以及Tomcat错误日志进行实时统计,在kibana页面进行搜索展现,每台Tomcat服务器要安装logstash负责收集日志,然后将日志转发给elasticsearch进行分析,在通过kibana在前端展现,配置过程如下

3.1服务器部署tomcat服务

需要安装java环境,并自定义一个web界面进行测试

yum install jdk-8u121-linux-x64.rpm cd /usr/local/src wget http://mirrors.shuosc.org/apache/tomcat/tomcat-8/v8.5.23/bin/apache-tomcat-8.5.23.tar.gz tar xf apache-tomcat-8.5.23.tar.gz mv apache-tomcat-8.5.23 /usr/local/ ln -s /usr/local/apache-tomcat-8.5.23/ /usr/local/tomcat cd /usr/local/tomcat/webapps/ mkdir /usr/local/tomcat/webapps/webdir echo "Tomcat Page" > /usr/local/tomcat/webapps/webdir/index.html ../bin/catalina.sh start #[root@linux-node1 webapps]# netstat -plunt | grep 8080 #tcp6 0 0 :::8080 :::* LISTEN 19879/java

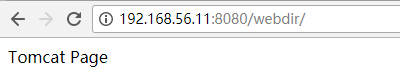

查看页面是否能访问

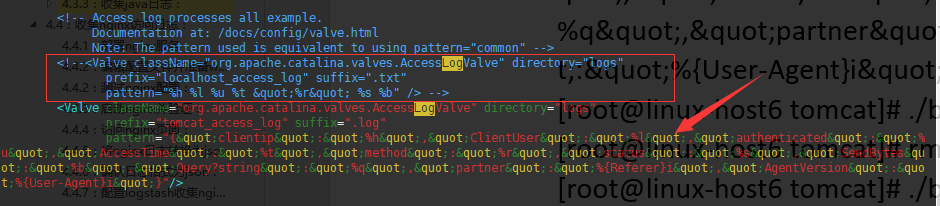

3.2tomcat日志转json

vim /usr/local/tomcat/conf/server.xml

<Valve className="org.apache.catalina.valves.AccessLogValve" directory="logs"

prefix="tomcat_access_log" suffix=".log"

pattern="{"clientip":"%h","ClientUser":"%l","authenticated":"%u","AccessTime":"%t","method":"%r","status":"%s","SendBytes":"%b","Query?string":"%q","partner":"%{Referer}i","AgentVersion":"%{User-Agent}i"}"/>

./bin/catalina.sh stop rm -rf /usr/local/tomcat/logs/* ./bin/catalina.sh start #tailf /usr/local/tomcat/logs/tomcat_access_log.2017-11-07.log

验证日志是否json格式

如何获取日志行中的IP?

这个我就不教啦。出门左拐学个python基础或者js基础都能获取到。

python叫字典,javascripts叫对象。

3.3在tomcat服务器安装logstash收集tomcat和系统日志:

需要部署tomcat并安装配置logstash

# cat /etc/logstash/conf.d/tomcat.conf

input {

file {

path => "/usr/local/tomcat/logs/tomcat_access_log.*.txt" # 注意文件名

start_position => "end"

type => "tomct-access-log"

}

file {

path => "/var/log/messages"

start_position => "end"

type => "system-log"

}

}

output {

if [type] == "tomct-access-log" {

elasticsearch {

hosts => ["192.168.56.11:9200"]

index => "logstash-tomcat-5616-access-%{+YYYY.MM.dd}"

codec => "json"

}}

if [type] == "system-log" {

elasticsearch {

hosts => ["192.168.56.12:9200"] #写入到不通的ES服务器

index => "system-log-5616-%{+YYYY.MM.dd}"

}}

}

3.4重启logstash并确认

systemctl restart logstash tail -f /var/log/logstash/logstash-plain.log #验证日志 chmod 644 /var/log/messages #修改权限 systemctl restart logstash #再次重启logstash

3.5访问tomcat并生成日志

echo "2017-11-07" >> /var/log/messages

tomcat重启通常使用kill -9强杀。别问我为什么。博主对tomcat不熟/

接下来的博主就用复制粘贴,表示不错测试了。 以下的版本是5.4的ELK

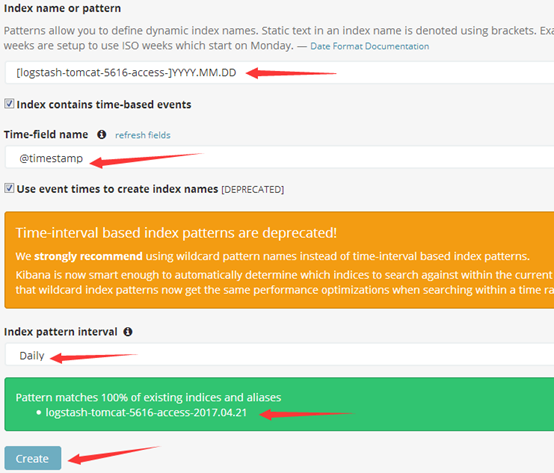

在kibana添加logstash-tomcat-5616-access-:

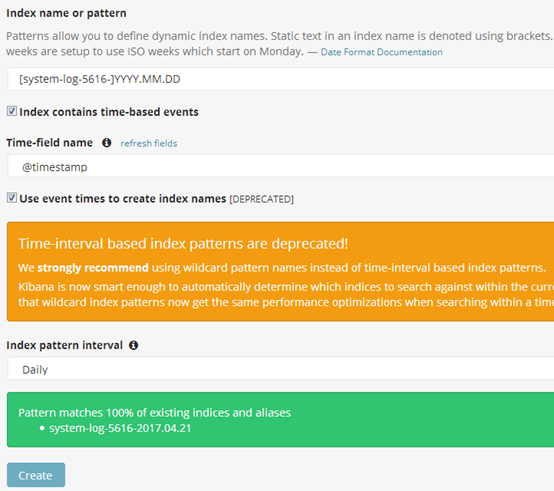

4.3.2.6:在kibana添加system-log-5616-:

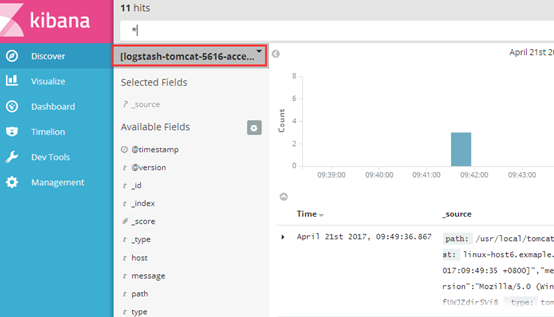

4.3.2.7:验证数据:

4.3.2.8:在其它服务器使用ab批量访问并验证数据:

[root@linux-host3 ~]# yum install httpd-tools –y [root@linux-host3 ~]# ab -n1000 -c100 http://192.168.56.16:8080/webdir/

4.3.3:收集java日志:

使用codec的multiline插件实现多行匹配,这是一个可以将多行进行合并的插件,而且可以使用what指定将匹配到的行与前面的行合并还是和后面的行合并,https://www.elastic.co/guide/en/logstash/current/plugins-codecs-multiline.html

4.3.3.1:在elasticsearch服务器部署logstash:

[root@linux-host1 ~]# chown logstash.logstash /usr/share/logstash/data/queue -R

[root@linux-host1 ~]# ll -d /usr/share/logstash/data/queue

drwxr-xr-x 2 logstash logstash 6 Apr 19 20:03 /usr/share/logstash/data/queue

[root@linux-host1 ~]# cat /etc/logstash/conf.d/java.conf

input {

stdin {

codec => multiline {

pattern => "^\[" #当遇到[开头的行时候将多行进行合并

negate => true #true为匹配成功进行操作,false为不成功进行操作

what => "previous" #与上面的行合并,如果是下面的行合并就是next

}}

}

filter { #日志过滤,如果所有的日志都过滤就写这里,如果只针对某一个过滤就写在input里面的日志输入里面

}

output {

stdout {

codec => rubydebug

}}

4.3.3.2:测试可以正常启动:

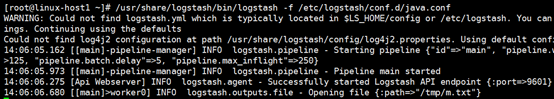

[root@linux-host1 ~]#/usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/java.conf

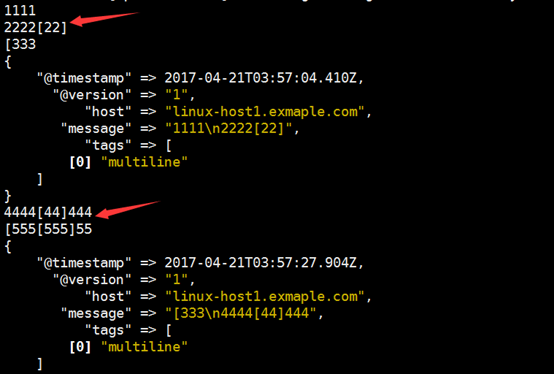

4.3.3.3:测试标准输入和标准输出:

4.3.3.4:配置读取日志文件写入到文件:

[root@linux-host1 ~]# vim /etc/logstash/conf.d/java.conf

input {

file {

path => "/elk/logs/ELK-Cluster.log"

type => "javalog"

start_position => "beginning"

codec => multiline {

pattern => "^\["

negate => true

what => "previous"

}}

}

output {

if [type] == "javalog" {

stdout {

codec => rubydebug

}

file {

path => "/tmp/m.txt"

}}

}

4.3.3.5:语法验证:

[root@linux-host1 ~]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/java.conf -t

4.3.3.7:将输出改为elasticsearch:

更改后的内容如下:

[root@linux-host1 ~]# cat /etc/logstash/conf.d/java.conf

input {

file {

path => "/elk/logs/ELK-Cluster.log"

type => "javalog"

start_position => "beginning"

codec => multiline {

pattern => "^\["

negate => true

what => "previous"

}}

}

output {

if [type] == "javalog" {

elasticsearch {

hosts => ["192.168.56.11:9200"]

index => "javalog-5611-%{+YYYY.MM.dd}"

}}

}

[root@linux-host1 ~]# systemctl restart logstash

然后重启一下elasticsearch服务,目前是为了生成新的日志,以验证logstash能否自动收集新生成的日志。

[root@linux-host1 ~]# systemctl restart elasticsearch

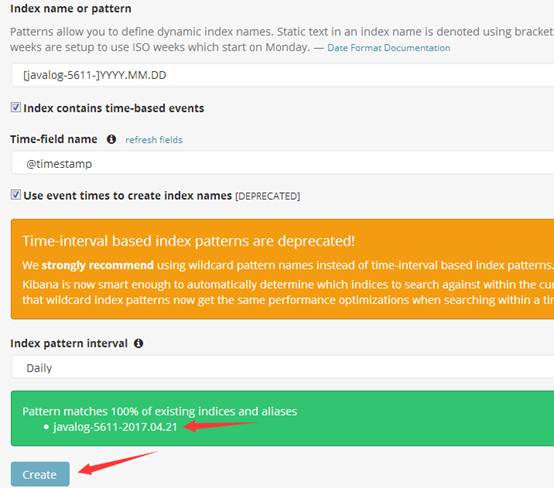

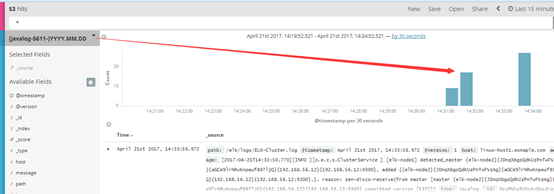

4.3.3.8:kibana界面添加javalog-5611索引:

4.3.3.9:生成数据:

[root@linux-host1 ~]# cat /elk/logs/ELK-Cluster.log >> /tmp/1 [root@linux-host1 ~]# cat /tmp/1 >> /elk/logs/ELK-Cluster.log

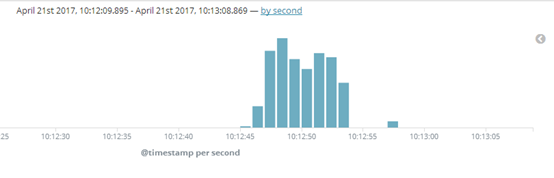

4.3.3.10:kibana界面查看数据:

4.3.3.11:关于sincedb:

[root@linux-host1~]# cat /var/lib/logstash/plugins/inputs/file/.sincedb_1ced15cfacdbb0380466be84d620085a 134219868 0 2064 29465 #记录了收集文件的inode信息 [root@linux-host1 ~]# ll -li /elk/logs/ELK-Cluster.log 134219868 -rw-r--r-- 1 elasticsearch elasticsearch 29465 Apr 21 14:33 /elk/logs/ELK-Cluster.log