FAIR-Detectron 开源代码

先贴上链接:https://github.com/facebookresearch/Detectron

。。。Install Caffe2 就问题一大堆了。。。。

首先是下载完caffe2工程后,第一步的make ,就出现“Protocol "https" not supported or disabled in libcurl” 试了很多方法,都不管用,哎。

应该是curl的问题,不管了,反正系统已经重装了,现在一切正常,比以前还顺溜~

装个这玩意让我火大。直接重装系统!!!!!

全新的系统:Ubuntu14.04!!!

显卡:GTX 1080

本以为重装系统的话,cuda这玩意又要倒腾很久,已经做好了长期奋战的准备,结果 - - 时代在进步啊,要是当年有这么好装的话,我也不用装大半个月了。

废话不多说,总结下今天安装 caffe2 的过程。

一、 首先下载依赖项:

sudo apt-get update sudo apt-get install -y --no-install-recommends \ build-essential \ cmake \ git \ libgoogle-glog-dev \ libgtest-dev \ libiomp-dev \ libleveldb-dev \ liblmdb-dev \ libopencv-dev \ libopenmpi-dev \ libsnappy-dev \ libprotobuf-dev \ openmpi-bin \ openmpi-doc \ protobuf-compiler \ python-dev \ python-pip sudo pip install \ future \ numpy \ protobuf

这里有个很重要的一步,可能会导致下面的pip安装失败以及opencv-python>=3.0无法安装:

sudo pip install --upgrade pip

那就是升级pip !!!!!!!!

对于ubuntu14.04 和 ubuntu16.04 两个系统要分别下不同的文件:

# for Ubuntu 14.04 sudo apt-get install -y --no-install-recommends libgflags2 # for Ubuntu 16.04 sudo apt-get install -y --no-install-recommends libgflags-dev

========================以下是需要GPU的安装步骤,如果直接用CPU那么就跳过=================================

精彩的地方来了,这步安装cuda,简直是从 王者排位赛 一下子到 简单人机啊 !!.

我的是ubuntu14.04,所以按照这个步骤来:

sudo apt-get update && sudo apt-get install wget -y --no-install-recommends wget "http://developer.download.nvidia.com/compute/cuda/repos/ubuntu1404/x86_64/cuda-repo-ubuntu1404_8.0.61-1_amd64.deb" sudo dpkg -i cuda-repo-ubuntu1404_8.0.61-1_amd64.deb sudo apt-get update sudo apt-get install cuda

对于16.04的系统,执行下面的步骤:

sudo apt-get update && sudo apt-get install wget -y --no-install-recommends wget "http://developer.download.nvidia.com/compute/cuda/repos/ubuntu1604/x86_64/cuda-repo-ubuntu1604_8.0.61-1_amd64.deb" sudo dpkg -i cuda-repo-ubuntu1604_8.0.61-1_amd64.deb sudo apt-get update sudo apt-get install cuda

CUDA就这么安装好了!!!!我都不敢相信

接下来装cudnn5.1或者6.0都行

CUDNN_URL="http://developer.download.nvidia.com/compute/redist/cudnn/v5.1/cudnn-8.0-linux-x64-v5.1.tgz" wget ${CUDNN_URL} sudo tar -xzf cudnn-8.0-linux-x64-v5.1.tgz -C /usr/local rm cudnn-8.0-linux-x64-v5.1.tgz && sudo ldconfig

完全不用了解中间涉及了什么,反正就这么装好了?!!!

=======================================================================================================

二、下载和编译caffe2

git clone --recursive https://github.com/caffe2/caffe2.git && cd caffe2 make && cd build && sudo make install

注意看我用耀眼的红色标注出来的部分, --recursive 递归的意思,加上这个指令可以把third_party里面的文件一次性统统下载好。否则的话 third_party这个文件夹里就是空的! (直接从caffe2/caffe2.git 网页上下载的话,也会缺失third_party内的文件)

第一条语句执行完成后,会在你执行这条语句的路径位置得到一个 caffe2 文件夹 ,&& cd caffe2 这个操作就是进入该文件夹内(如果直接浏览器下载 caffe2-master.zip,解压出来的文件夹名为caffe2-master)

然后就是make (最好是 make -j32 多线程编译会更快些),会生成一个build文件夹,cd build && sudo make install 这样caffe2就安装完成了!!!

三、测试caffe2是否安装成功:

这里需要注意,在Install文档后面也有补充,那就是这里的 caffe2.python 是指的在caffe2/build/ 下面的 caffe2 文件夹下的 python 文件夹。

可以在两种方法下执行下面的指令

1.进入 caffe2/python 这个文件夹下,然后再在终端中执行下面的指令

2.命令行中执行: export PYTHONPATH=/home/ username /caffe2/python:PYTHONPATH 这样系统在执行python文件时,会自动包含这个路径,那就可以在任意路径下执行下面的语句了。

python -c 'from caffe2.python import core' 2>/dev/null && echo "Success" || echo "Failure"

如果安装成功,会返回 Success ,否则就是Failure .

如果是GPU用户的话,还需要多执行一条测试指令:

python -m caffe2.python.operator_test.relu_op_test

可能会出现 ImportError: No module named hypothesis 不要紧张,sudo pip install hypothesis 。安装完后再试试上面的,应该就可以了。

最后建议将上面的路径都添加进 ~/.bashrc 文件的最后,这样就不用每次使用caffe2的时候都执行一次 export PYTHONPATH = 。。。。 了

sudo gedit ~/.bashrc #如果没装过 gedit ,先执行 sudo apt-get install gedit

在文件的末尾处添加如下语句:

export PYTHONPATH=/usr/local:$PYTHONPATH export PYTHONPATH=/home/username/caffe2/build/caffe2/python:$PYTHONPATH export PYTHONPATH=/home/username/caffe2/build:$PYTHONPATH export LD_LIBRARY_PATH=/usr/local/lib:$LD_LIBRARY_PATH

保存后,执行 source ~/.bashrc 使之生效。

到这里,caffe2 算是大功告成了。

插入段,我想既然caffe2已经把cuda装好了,那么是不是意味着我也可以直接装caffe呢。 是的!!

第一步也是安装依赖项,可能和caffe2有重复的,不管,这一步都输进去

sudo apt-get install freeglut3-dev build-essential libx11-dev libxmu-dev libxi-dev libgl1-mesa-glx libglu1-mesa libglu1-mesa-dev

接着在 ~/.bashrc文件末尾添加下面指令:

export PATH=/usr/local/cuda-8.0/bin:$PATH

然后是添加共享库变量,这一步在caffe2中没有用到,但是想通过caffe必须添加:

#在/etc/ld.so.conf.d/ 文件夹下新建 cuda.conf 文件,并添加内容: /usr/local/cuda-8.0/lib64

保存后,使指令生效:

sudo ldconfig

好了,终于到caffe编译这一步了。我把我的Makefile.config文件贴下,红色标注是我修改的地方:

## Refer to http://caffe.berkeleyvision.org/installation.html # Contributions simplifying and improving our build system are welcome! # cuDNN acceleration switch (uncomment to build with cuDNN). USE_CUDNN := 1 # CPU-only switch (uncomment to build without GPU support). # CPU_ONLY := 1 # uncomment to disable IO dependencies and corresponding data layers # USE_OPENCV := 0 # USE_LEVELDB := 0 # USE_LMDB := 0 # uncomment to allow MDB_NOLOCK when reading LMDB files (only if necessary) # You should not set this flag if you will be reading LMDBs with any # possibility of simultaneous read and write # ALLOW_LMDB_NOLOCK := 1 # Uncomment if you're using OpenCV 3 # OPENCV_VERSION := 3 # To customize your choice of compiler, uncomment and set the following. # N.B. the default for Linux is g++ and the default for OSX is clang++ # CUSTOM_CXX := g++ # CUDA directory contains bin/ and lib/ directories that we need. CUDA_DIR := /usr/local/cuda # On Ubuntu 14.04, if cuda tools are installed via # "sudo apt-get install nvidia-cuda-toolkit" then use this instead: # CUDA_DIR := /usr # CUDA architecture setting: going with all of them. # For CUDA < 6.0, comment the *_50 through *_61 lines for compatibility. # For CUDA < 8.0, comment the *_60 and *_61 lines for compatibility. # For CUDA >= 9.0, comment the *_20 and *_21 lines for compatibility. CUDA_ARCH :=-gencode arch=compute_30,code=sm_30 \ -gencode arch=compute_35,code=sm_35 \ -gencode arch=compute_50,code=sm_50 \ -gencode arch=compute_52,code=sm_52 \ -gencode arch=compute_60,code=sm_60 \ -gencode arch=compute_61,code=sm_61 \ -gencode arch=compute_61,code=compute_61 # BLAS choice: # atlas for ATLAS (default) # mkl for MKL # open for OpenBlas BLAS := atlas # Custom (MKL/ATLAS/OpenBLAS) include and lib directories. # Leave commented to accept the defaults for your choice of BLAS # (which should work)! # BLAS_INCLUDE := /path/to/your/blas # BLAS_LIB := /path/to/your/blas # Homebrew puts openblas in a directory that is not on the standard search path # BLAS_INCLUDE := $(shell brew --prefix openblas)/include # BLAS_LIB := $(shell brew --prefix openblas)/lib # This is required only if you will compile the matlab interface. # MATLAB directory should contain the mex binary in /bin. # MATLAB_DIR := /usr/local # MATLAB_DIR := /Applications/MATLAB_R2012b.app # NOTE: this is required only if you will compile the python interface. # We need to be able to find Python.h and numpy/arrayobject.h. PYTHON_INCLUDE := /usr/include/python2.7 \ /usr/lib/python2.7/dist-packages/numpy/core/include # Anaconda Python distribution is quite popular. Include path: # Verify anaconda location, sometimes it's in root. # ANACONDA_HOME := $(HOME)/anaconda # PYTHON_INCLUDE := $(ANACONDA_HOME)/include \ # $(ANACONDA_HOME)/include/python2.7 \ # $(ANACONDA_HOME)/lib/python2.7/site-packages/numpy/core/include # Uncomment to use Python 3 (default is Python 2) # PYTHON_LIBRARIES := boost_python3 python3.5m # PYTHON_INCLUDE := /usr/include/python3.5m \ # /usr/lib/python3.5/dist-packages/numpy/core/include # We need to be able to find libpythonX.X.so or .dylib. PYTHON_LIB := /usr/lib # PYTHON_LIB := $(ANACONDA_HOME)/lib # Homebrew installs numpy in a non standard path (keg only) # PYTHON_INCLUDE += $(dir $(shell python -c 'import numpy.core; print(numpy.core.__file__)'))/include # PYTHON_LIB += $(shell brew --prefix numpy)/lib # Uncomment to support layers written in Python (will link against Python libs) WITH_PYTHON_LAYER := 1 # Whatever else you find you need goes here. INCLUDE_DIRS := $(PYTHON_INCLUDE) /usr/local/include LIBRARY_DIRS := $(PYTHON_LIB) /usr/local/lib /usr/lib # If Homebrew is installed at a non standard location (for example your home directory) and you use it for general dependencies # INCLUDE_DIRS += $(shell brew --prefix)/include # LIBRARY_DIRS += $(shell brew --prefix)/lib # NCCL acceleration switch (uncomment to build with NCCL) # https://github.com/NVIDIA/nccl (last tested version: v1.2.3-1+cuda8.0) # USE_NCCL := 1 # Uncomment to use `pkg-config` to specify OpenCV library paths. # (Usually not necessary -- OpenCV libraries are normally installed in one of the above $LIBRARY_DIRS.) # USE_PKG_CONFIG := 1 # N.B. both build and distribute dirs are cleared on `make clean` BUILD_DIR := build DISTRIBUTE_DIR := distribute # Uncomment for debugging. Does not work on OSX due to https://github.com/BVLC/caffe/issues/171 # DEBUG := 1 # The ID of the GPU that 'make runtest' will use to run unit tests. TEST_GPUID := 0 # enable pretty build (comment to see full commands) Q ?= @

只有两处,随后:

make all -j32 make test -j32 make runtest -j32

最后来测试下,跑个MNIST数据集:

#下载数据集 ./data/mnist/get_mnist.sh #生成LMDB文件 ./example/mnist/create_mnist.sh #训练 ./example/mnist/train_lenet.sh

四、其他依赖项安装

①Python的依赖项:(这里之前一定要先升级pip,否则会安装失败)

pip install numpy pyyaml matplotlib opencv-python>=3.0 setuptools Cython mock

②COCO API安装:

# COCOAPI=/path/to/clone/cocoapi git clone https://github.com/cocodataset/cocoapi.git $COCOAPI cd $COCOAPI/PythonAPI # Install into global site-packages make install

五、Detectron 安装

# DETECTRON=/path/to/clone/detectron git clone https://github.com/facebookresearch/detectron $DETECTRON cd $DETECTRON/lib && make cd ~ python2 $DETECTRON/tests/test_spatial_narrow_as_op.py

如果到这里都没有问题的话,那么就可以Inference with Pretrained Models了;

cd Detectrom-master python2 tools/infer_simple.py \ --cfg configs/12_2017_baselines/e2e_mask_rcnn_R-101-FPN_2x.yaml \ --output-dir /tmp/detectron-visualizations \ --image-ext jpg \ --wts https://s3-us-west-2.amazonaws.com/detectron/35861858/12_2017_baselines/e2e_mask_rcnn_R-101-FPN_2x.yaml.02_32_51.SgT4y1cO/output/train/coco_2014_train:coco_2014_valminusminival/generalized_rcnn/model_final.pkl \ demo

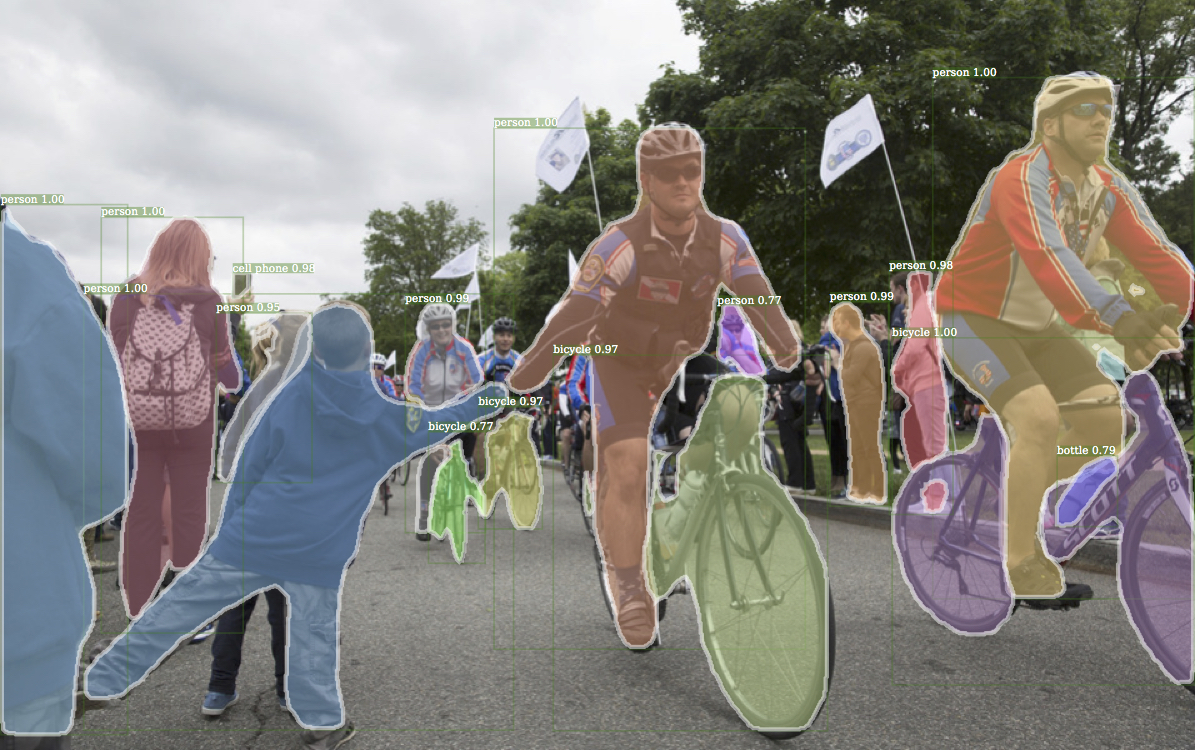

程序运行结束后,会在/tmp/detection-visualizations 文件夹下以PDF的格式输出预测结果,下面是一个例子

INPUT:

OUTPUT:

六、下面开始下载COCO数据集

mkdir data &&cd data wget http://images.cocodataset.org/zips/test2014.zip wget http://images.cocodataset.org/zips/train2014.zip wget http://images.cocodataset.org/zips/val2014.zip

解压后,按如下格式存放:

coco |_ coco_train2014 | |_ <im-1-name>.jpg | |_ ... | |_ <im-N-name>.jpg |_ coco_val2014 |_ ... |_ annotations |_ instances_train2014.json |_ ...

(这里注意,我下载完后还缺 " annotations/instances_minival2014.json " ,需要额外下载,并放在 annotations 文件夹下: https://s3-us-west-2.amazonaws.com/detectron/coco/coco_annotations_minival.tgz)

然后和Detectron项目建立软连接,注意这里一定要是绝对路径!以我的为例:

ln -s /home/cc/data/coco /home/cc/Detectron-master/lib/dataset/data/coco

至此,整个Detectron项目都已经配置完毕!!!开始飞翔吧

Ps: 如果想用Detectron在自己的数据集上训练一个模型,最好是把annotation转换成 COCO json Format .然后将新的数据集路径添加到 lib/datasets/dataset_catalog.py 中。