Spark函数详解系列之RDD基本转换

摘要:

RDD:弹性分布式数据集,是一种特殊集合 ‚ 支持多种来源 ‚ 有容错机制 ‚ 可以被缓存 ‚ 支持并行操作,一个RDD代表一个分区里的数据集

RDD有两种操作算子:

Transformation(转换):Transformation属于延迟计算,当一个RDD转换成另一个RDD时并没有立即进行转换,仅仅是记住了数据集的逻辑操作

Ation(执行):触发Spark作业的运行,真正触发转换算子的计算

本系列主要讲解Spark中常用的函数操作:

1.RDD基本转换

本节所讲函数

基础转换操作:

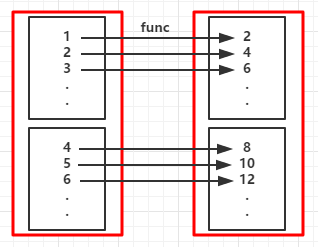

(例1)

1 2 3 4 5 6 7 8 9 10 | object Map { def main(args: Array[String]) { val conf = new SparkConf().setMaster("local").setAppName("map") val sc = new SparkContext(conf) val rdd = sc.parallelize(1 to 10) //创建RDD val map = rdd.map(_*2) //对RDD中的每个元素都乘于2 map.foreach(x => print(x+" ")) sc.stop() }} |

输出:

2 4 6 8 10 12 14 16 18 20

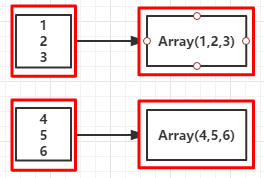

(RDD依赖图:红色块表示一个RDD区,黑色块表示该分区集合,下同)

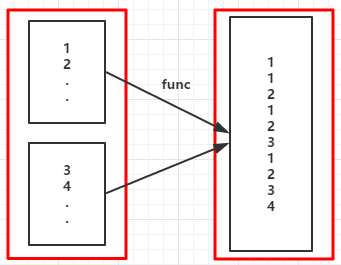

(例2)

1 2 3 4 | //...省略sc val rdd = sc.parallelize(1 to 5) val fm = rdd.flatMap(x => (1 to x)).collect() fm.foreach( x => print(x + " ")) |

输出:

1 1 2 1 2 3 1 2 3 4 1 2 3 4 5

如果是map函数其输出如下:

Range(1) Range(1, 2) Range(1, 2, 3) Range(1, 2, 3, 4) Range(1, 2, 3, 4, 5)

(RDD依赖图)

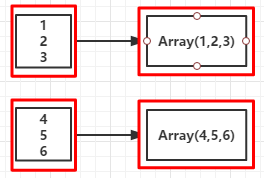

func的类型:Iterator[T] => Iterator[U]

假设有N个元素,有M个分区,那么map的函数的将被调用N次,而mapPartitions被调用M次,当在映射的过程中不断的创建对象时就可以使用mapPartitions比map的效率要高很多,比如当向数据库写入数据时,如果使用map就需要为每个元素创建connection对象,但使用mapPartitions的话就需要为每个分区创建connetcion对象

(例3):输出有女性的名字:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | object MapPartitions {//定义函数 def partitionsFun(/*index : Int,*/iter : Iterator[(String,String)]) : Iterator[String] = { var woman = List[String]() while (iter.hasNext){ val next = iter.next() next match { case (_,"female") => woman = /*"["+index+"]"+*/next._1 :: woman case _ => } } return woman.iterator } def main(args: Array[String]) { val conf = new SparkConf().setMaster("local").setAppName("mappartitions") val sc = new SparkContext(conf) val l = List(("kpop","female"),("zorro","male"),("mobin","male"),("lucy","female")) val rdd = sc.parallelize(l,2) val mp = rdd.mapPartitions(partitionsFun) /*val mp = rdd.mapPartitionsWithIndex(partitionsFun)*/ mp.collect.foreach(x => (print(x +" "))) //将分区中的元素转换成Aarray再输出 }} |

输出:

kpop lucy

其实这个效果可以用一条语句完成

1 | val mp = rdd.mapPartitions(x => x.filter(_._2 == "female")).map(x => x._1) |

之所以不那么做是为了演示函数的定义

(RDD依赖图)

func类型:(Int, Iterator[T]) => Iterator[U]

(例4):将例3橙色的注释部分去掉即是

输出:(带了分区索引)

[0]kpop [1]lucy

5.sample(withReplacement,fraction,seed):以指定的随机种子随机抽样出数量为fraction的数据,withReplacement表示是抽出的数据是否放回,true为有放回的抽样,false为无放回的抽样

(例5):从RDD中随机且有放回的抽出50%的数据,随机种子值为3(即可能以1 2 3的其中一个起始值)

1 2 3 4 5 | //省略 val rdd = sc.parallelize(1 to 10) val sample1 = rdd.sample(true,0.5,3) sample1.collect.foreach(x => print(x + " ")) sc.stop |

1 2 3 4 5 6 | //省略sc val rdd1 = sc.parallelize(1 to 3) val rdd2 = sc.parallelize(3 to 5) val unionRDD = rdd1.union(rdd2) unionRDD.collect.foreach(x => print(x + " ")) sc.stop |

输出:

1 2 3 3 4 5

1 2 3 4 5 6 | //省略scval rdd1 = sc.parallelize(1 to 3)val rdd2 = sc.parallelize(3 to 5)val unionRDD = rdd1.intersection(rdd2)unionRDD.collect.foreach(x => print(x + " "))sc.stop |

输出:

3 4

1 2 3 4 5 | //省略scval list = List(1,1,2,5,2,9,6,1)val distinctRDD = sc.parallelize(list)val unionRDD = distinctRDD.distinct()unionRDD.collect.foreach(x => print(x + " ")) |

输出:

1 6 9 5 2

1 2 3 4 5 | //省略val rdd1 = sc.parallelize(1 to 3)val rdd2 = sc.parallelize(2 to 5)val cartesianRDD = rdd1.cartesian(rdd2)cartesianRDD.foreach(x => println(x + " ")) |

输出:

(1,2) (1,3) (1,4) (1,5) (2,2) (2,3) (2,4) (2,5) (3,2) (3,3) (3,4) (3,5)

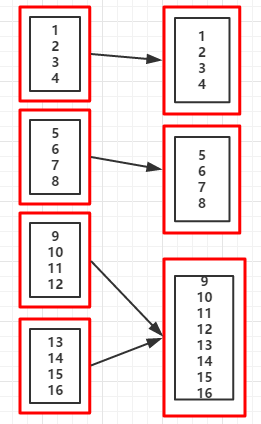

(RDD依赖图)

目,但不会报错,只是分区个数还是原来的

(例9:)shuffle=false

1 2 3 4 | //省略 val rdd = sc.parallelize(1 to 16,4)val coalesceRDD = rdd.coalesce(3) //当suffle的值为false时,不能增加分区数(即分区数不能从5->7)println("重新分区后的分区个数:"+coalesceRDD.partitions.size) |

输出:

重新分区后的分区个数:3 //分区后的数据集 List(1, 2, 3, 4) List(5, 6, 7, 8) List(9, 10, 11, 12, 13, 14, 15, 16)

(例9.1:)shuffle=true

1 2 3 4 5 | //...省略val rdd = sc.parallelize(1 to 16,4)val coalesceRDD = rdd.coalesce(7,true)println("重新分区后的分区个数:"+coalesceRDD.partitions.size)println("RDD依赖关系:"+coalesceRDD.toDebugString) |

输出:

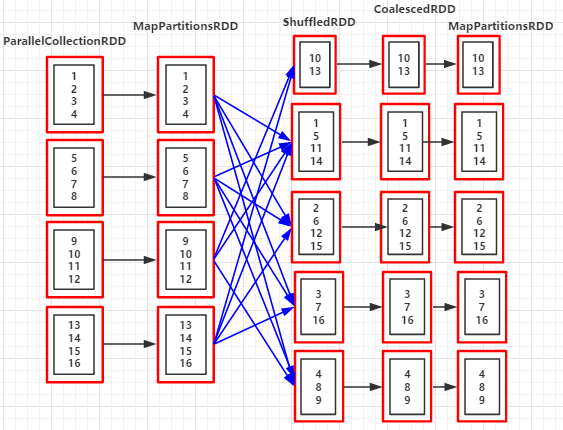

重新分区后的分区个数:5 RDD依赖关系:(5) MapPartitionsRDD[4] at coalesce at Coalesce.scala:14 [] | CoalescedRDD[3] at coalesce at Coalesce.scala:14 [] | ShuffledRDD[2] at coalesce at Coalesce.scala:14 [] +-(4) MapPartitionsRDD[1] at coalesce at Coalesce.scala:14 [] | ParallelCollectionRDD[0] at parallelize at Coalesce.scala:13 [] //分区后的数据集 List(10, 13) List(1, 5, 11, 14) List(2, 6, 12, 15) List(3, 7, 16) List(4, 8, 9)

(RDD依赖图:coalesce(3,flase))

(RDD依赖图:coalesce(3,true))

11.repartition(numPartition):是函数coalesce(numPartition,true)的实现,效果和例9.1的coalesce(numPartition,true)的一样

1 2 3 4 5 | //省略val rdd = sc.parallelize(1 to 16,4)val glomRDD = rdd.glom() //RDD[Array[T]]glomRDD.foreach(rdd => println(rdd.getClass.getSimpleName))sc.stop |

输出:

int[] //说明RDD中的元素被转换成数组Array[Int]

1 2 3 4 5 6 7 | //省略scval rdd = sc.parallelize(1 to 10)val randomSplitRDD = rdd.randomSplit(Array(1.0,2.0,7.0))randomSplitRDD(0).foreach(x => print(x +" "))randomSplitRDD(1).foreach(x => print(x +" "))randomSplitRDD(2).foreach(x => print(x +" "))sc.stop |

输出:

2 4 3 8 9 1 5 6 7 10

以上例子源码地址:https://github.com/Mobin-F/SparkExample/tree/master/src/main/scala/com/mobin/SparkRDDFun/TransFormation/KVRDD

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 10年+ .NET Coder 心语,封装的思维:从隐藏、稳定开始理解其本质意义

· .NET Core 中如何实现缓存的预热?

· 从 HTTP 原因短语缺失研究 HTTP/2 和 HTTP/3 的设计差异

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· 阿里巴巴 QwQ-32B真的超越了 DeepSeek R-1吗?

· 【译】Visual Studio 中新的强大生产力特性

· 10年+ .NET Coder 心语 ── 封装的思维:从隐藏、稳定开始理解其本质意义

· 【设计模式】告别冗长if-else语句:使用策略模式优化代码结构