HBase2.0.5 WordCount

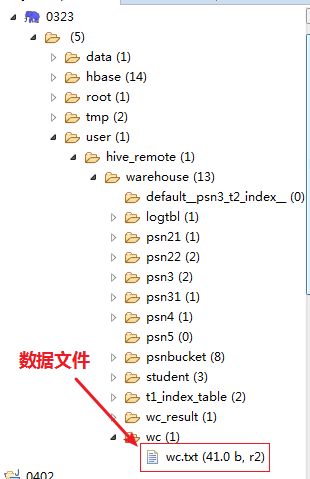

待计算的wordCount文件放在HDFS上。

wc.txt:

hive hadoop hello

hello world

hbase

hive

目标:进行WordCount计算,把结果输出到HBase表中

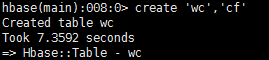

1.先在HBase中创建表wc

create 'wc','cf'

2. WCRunner.class

package com.bjsxt.wc; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.hbase.mapreduce.TableMapReduceUtil; // 注意别导错TableMapReduceUtil包,这里开始没注意,导成mapred下的了,坑死了 import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; public class WCRunner { public static void main(String[] args) throws Exception { Configuration conf = new Configuration(); conf.set("fs.defaultFS", "hdfs://node01:8020"); conf.set("hbase.zookeeper.quorum", "node02,node03,node04"); Job job = Job.getInstance(conf); job.setJarByClass(WCRunner.class); // 指定mapper和reducer job.setMapperClass(WCMapper.class); job.setMapOutputKeyClass(Text.class); job.setMapOutputValueClass(IntWritable.class); FileInputFormat.addInputPath(job, new Path("/user/hive_remote/warehouse/wc/")); TableMapReduceUtil.initTableReducerJob("wc", WCReducer.class, job, null, null, null, null, false); job.waitForCompletion(true); } }

3. Mapper

package com.bjsxt.wc; import java.io.IOException; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Mapper; public class WCMapper extends Mapper<LongWritable, Text,Text,IntWritable> { @Override protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException { String[] strs = value.toString().split(" "); for (String str : strs) { context.write(new Text(str), new IntWritable(1)); } } }

4. Reducer

package com.bjsxt.wc; import java.io.IOException; import org.apache.hadoop.hbase.client.Put; import org.apache.hadoop.hbase.io.ImmutableBytesWritable; import org.apache.hadoop.hbase.mapreduce.TableReducer; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.Text; public class WCReducer extends TableReducer<Text, IntWritable, ImmutableBytesWritable> { @Override protected void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException { int sum = 0; for (IntWritable it : values) { sum += it.get(); } Put put = new Put(key.toString().getBytes()); put.addColumn("cf".getBytes(), "ct".getBytes(), (sum + "").getBytes()); context.write(null, put); } }

5. Eclipse Console输出

SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/F:/usr/hadoop-lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/F:/usr/hbase-lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] 2019-04-07 00:04:00,627 INFO Configuration.deprecation (Configuration.java:warnOnceIfDeprecated(1243)) - session.id is deprecated. Instead, use dfs.metrics.session-id 2019-04-07 00:04:00,632 INFO jvm.JvmMetrics (JvmMetrics.java:init(76)) - Initializing JVM Metrics with processName=JobTracker, sessionId= 2019-04-07 00:04:01,392 INFO zookeeper.ReadOnlyZKClient (ReadOnlyZKClient.java:<init>(135)) - Connect 0x1698fc68 to node02:2181,node03:2181,node04:2181 with session timeout=90000ms, retries 30, retry interval 1000ms, keepAlive=60000ms 2019-04-07 00:04:01,662 INFO zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:zookeeper.version=3.4.6-1569965, built on 02/20/2014 09:09 GMT 2019-04-07 00:04:01,662 INFO zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:host.name=FF2R0M0IZ0X5J55 2019-04-07 00:04:01,663 INFO zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:java.version=1.8.0_161 2019-04-07 00:04:01,663 INFO zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:java.vendor=Oracle Corporation 2019-04-07 00:04:01,663 INFO zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:java.home=C:\Program Files\Java\jre1.8.0_161 2019-04-07 00:04:01,663 INFO zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:java.class.path=C:\Program Files\Java\jre1.8.0_161\lib\resources.jar;C:\Program Files\Java\jre1.8.0_161\lib\rt.jar;C:\Program Files\Java\jre1.8.0_161\lib\jsse.jar;C:\Program Files\Java\jre1.8.0_161\lib\jce.jar;C:\Program Files\Java\jre1.8.0_161\lib\charsets.jar;C:\Program Files\Java\jre1.8.0_161\lib\jfr.jar;C:\Program Files\Java\jre1.8.0_161\lib\ext\access-bridge-64.jar;C:\Program Files\Java\jre1.8.0_161\lib\ext\cldrdata.jar;C:\Program Files\Java\jre1.8.0_161\lib\ext\dnsns.jar;C:\Program Files\Java\jre1.8.0_161\lib\ext\jaccess.jar;C:\Program Files\Java\jre1.8.0_161\lib\ext\jfxrt.jar;C:\Program Files\Java\jre1.8.0_161\lib\ext\localedata.jar;C:\Program Files\Java\jre1.8.0_161\lib\ext\nashorn.jar;C:\Program Files\Java\jre1.8.0_161\lib\ext\sunec.jar;C:\Program Files\Java\jre1.8.0_161\lib\ext\sunjce_provider.jar;C:\Program Files\Java\jre1.8.0_161\lib\ext\sunmscapi.jar;C:\Program Files\Java\jre1.8.0_161\lib\ext\sunpkcs11.jar;C:\Program Files\Java\jre1.8.0_161\lib\ext\zipfs.jar;F:\usr\hadoop-lib\activation-1.1.jar;F:\usr\hadoop-lib\annotations-api.jar;F:\usr\hadoop-lib\antlr-2.7.7.jar;F:\usr\hadoop-lib\aopalliance-1.0.jar;F:\usr\hadoop-lib\apacheds-i18n-2.0.0-M15.jar;F:\usr\hadoop-lib\apacheds-kerberos-codec-2.0.0-M15.jar;F:\usr\hadoop-lib\api-asn1-api-1.0.0-M20.jar;F:\usr\hadoop-lib\api-asn1-ber-1.0.0-M20.jar;F:\usr\hadoop-lib\api-i18n-1.0.0-M20.jar;F:\usr\hadoop-lib\api-ldap-model-1.0.0-M20.jar;F:\usr\hadoop-lib\api-util-1.0.0-M20.jar;F:\usr\hadoop-lib\asm-3.2.jar;F:\usr\hadoop-lib\avro-1.7.4.jar;F:\usr\hadoop-lib\aws-java-sdk-1.7.4.jar;F:\usr\hadoop-lib\azure-storage-2.0.0.jar;F:\usr\hadoop-lib\bootstrap.jar;F:\usr\hadoop-lib\catalina.jar;F:\usr\hadoop-lib\catalina-ant.jar;F:\usr\hadoop-lib\catalina-ha.jar;F:\usr\hadoop-lib\catalina-tribes.jar;F:\usr\hadoop-lib\commons-beanutils-1.7.0.jar;F:\usr\hadoop-lib\commons-beanutils-core-1.8.0.jar;F:\usr\hadoop-lib\commons-cli-1.2.jar;F:\usr\hadoop-lib\commons-codec-1.4.jar;F:\usr\hadoop-lib\commons-collections-3.2.2.jar;F:\usr\hadoop-lib\commons-compress-1.4.1.jar;F:\usr\hadoop-lib\commons-configuration-1.6.jar;F:\usr\hadoop-lib\commons-daemon.jar;F:\usr\hadoop-lib\commons-daemon-1.0.13.jar;F:\usr\hadoop-lib\commons-digester-1.8.jar;F:\usr\hadoop-lib\commons-httpclient-3.1.jar;F:\usr\hadoop-lib\commons-io-2.4.jar;F:\usr\hadoop-lib\commons-lang-2.6.jar;F:\usr\hadoop-lib\commons-lang3-3.3.2.jar;F:\usr\hadoop-lib\commons-logging-1.1.3.jar;F:\usr\hadoop-lib\commons-math3-3.1.1.jar;F:\usr\hadoop-lib\commons-net-3.1.jar;F:\usr\hadoop-lib\curator-client-2.7.1.jar;F:\usr\hadoop-lib\curator-framework-2.7.1.jar;F:\usr\hadoop-lib\curator-recipes-2.7.1.jar;F:\usr\hadoop-lib\ecj-4.3.1.jar;F:\usr\hadoop-lib\ehcache-core-2.4.4.jar;F:\usr\hadoop-lib\el-api.jar;F:\usr\hadoop-lib\gson-2.2.4.jar;F:\usr\hadoop-lib\guava-11.0.2.jar;F:\usr\hadoop-lib\guice-3.0.jar;F:\usr\hadoop-lib\guice-servlet-3.0.jar;F:\usr\hadoop-lib\hadoop-annotations-2.7.7.jar;F:\usr\hadoop-lib\hadoop-ant-2.7.7.jar;F:\usr\hadoop-lib\hadoop-archives-2.7.7.jar;F:\usr\hadoop-lib\hadoop-archives-2.7.7-sources.jar;F:\usr\hadoop-lib\hadoop-archives-2.7.7-test-sources.jar;F:\usr\hadoop-lib\hadoop-auth-2.7.7.jar;F:\usr\hadoop-lib\hadoop-aws-2.7.7.jar;F:\usr\hadoop-lib\hadoop-azure-2.7.7.jar;F:\usr\hadoop-lib\hadoop-common-2.7.7.jar;F:\usr\hadoop-lib\hadoop-common-2.7.7-sources.jar;F:\usr\hadoop-lib\hadoop-common-2.7.7-tests.jar;F:\usr\hadoop-lib\hadoop-common-2.7.7-test-sources.jar;F:\usr\hadoop-lib\hadoop-datajoin-2.7.7.jar;F:\usr\hadoop-lib\hadoop-datajoin-2.7.7-sources.jar;F:\usr\hadoop-lib\hadoop-datajoin-2.7.7-test-sources.jar;F:\usr\hadoop-lib\hadoop-distcp-2.7.7.jar;F:\usr\hadoop-lib\hadoop-distcp-2.7.7-sources.jar;F:\usr\hadoop-lib\hadoop-distcp-2.7.7-test-sources.jar;F:\usr\hadoop-lib\hadoop-extras-2.7.7.jar;F:\usr\hadoop-lib\hadoop-extras-2.7.7-sources.jar;F:\usr\hadoop-lib\hadoop-extras-2.7.7-test-sources.jar;F:\usr\hadoop-lib\hadoop-gridmix-2.7.7.jar;F:\usr\hadoop-lib\hadoop-gridmix-2.7.7-sources.jar;F:\usr\hadoop-lib\hadoop-gridmix-2.7.7-test-sources.jar;F:\usr\hadoop-lib\hadoop-hdfs-2.7.7.jar;F:\usr\hadoop-lib\hadoop-hdfs-2.7.7-sources.jar;F:\usr\hadoop-lib\hadoop-hdfs-2.7.7-tests.jar;F:\usr\hadoop-lib\hadoop-hdfs-2.7.7-test-sources.jar;F:\usr\hadoop-lib\hadoop-hdfs-nfs-2.7.7.jar;F:\usr\hadoop-lib\hadoop-kms-2.7.7.jar;F:\usr\hadoop-lib\hadoop-mapreduce-client-app-2.7.7.jar;F:\usr\hadoop-lib\hadoop-mapreduce-client-app-2.7.7-sources.jar;F:\usr\hadoop-lib\hadoop-mapreduce-client-app-2.7.7-test-sources.jar;F:\usr\hadoop-lib\hadoop-mapreduce-client-common-2.7.7.jar;F:\usr\hadoop-lib\hadoop-mapreduce-client-common-2.7.7-sources.jar;F:\usr\hadoop-lib\hadoop-mapreduce-client-common-2.7.7-test-sources.jar;F:\usr\hadoop-lib\hadoop-mapreduce-client-core-2.7.7.jar;F:\usr\hadoop-lib\hadoop-mapreduce-client-core-2.7.7-sources.jar;F:\usr\hadoop-lib\hadoop-mapreduce-client-core-2.7.7-test-sources.jar;F:\usr\hadoop-lib\hadoop-mapreduce-client-hs-2.7.7.jar;F:\usr\hadoop-lib\hadoop-mapreduce-client-hs-2.7.7-sources.jar;F:\usr\hadoop-lib\hadoop-mapreduce-client-hs-2.7.7-test-sources.jar;F:\usr\hadoop-lib\hadoop-mapreduce-client-hs-plugins-2.7.7.jar;F:\usr\hadoop-lib\hadoop-mapreduce-client-hs-plugins-2.7.7-sources.jar;F:\usr\hadoop-lib\hadoop-mapreduce-client-hs-plugins-2.7.7-test-sources.jar;F:\usr\hadoop-lib\hadoop-mapreduce-client-jobclient-2.7.7.jar;F:\usr\hadoop-lib\hadoop-mapreduce-client-jobclient-2.7.7-sources.jar;F:\usr\hadoop-lib\hadoop-mapreduce-client-jobclient-2.7.7-tests.jar;F:\usr\hadoop-lib\hadoop-mapreduce-client-jobclient-2.7.7-test-sources.jar;F:\usr\hadoop-lib\hadoop-mapreduce-client-shuffle-2.7.7.jar;F:\usr\hadoop-lib\hadoop-mapreduce-client-shuffle-2.7.7-sources.jar;F:\usr\hadoop-lib\hadoop-mapreduce-client-shuffle-2.7.7-test-sources.jar;F:\usr\hadoop-lib\hadoop-mapreduce-examples-2.7.7.jar;F:\usr\hadoop-lib\hadoop-mapreduce-examples-2.7.7-sources.jar;F:\usr\hadoop-lib\hadoop-mapreduce-examples-2.7.7-test-sources.jar;F:\usr\hadoop-lib\hadoop-nfs-2.7.7.jar;F:\usr\hadoop-lib\hadoop-openstack-2.7.7.jar;F:\usr\hadoop-lib\hadoop-rumen-2.7.7.jar;F:\usr\hadoop-lib\hadoop-rumen-2.7.7-sources.jar;F:\usr\hadoop-lib\hadoop-rumen-2.7.7-test-sources.jar;F:\usr\hadoop-lib\hadoop-sls-2.7.7.jar;F:\usr\hadoop-lib\hadoop-sls-2.7.7-sources.jar;F:\usr\hadoop-lib\hadoop-sls-2.7.7-test-sources.jar;F:\usr\hadoop-lib\hadoop-streaming-2.7.7.jar;F:\usr\hadoop-lib\hadoop-streaming-2.7.7-sources.jar;F:\usr\hadoop-lib\hadoop-streaming-2.7.7-test-sources.jar;F:\usr\hadoop-lib\hadoop-yarn-api-2.7.7.jar;F:\usr\hadoop-lib\hadoop-yarn-api-2.7.7-sources.jar;F:\usr\hadoop-lib\hadoop-yarn-api-2.7.7-test-sources.jar;F:\usr\hadoop-lib\hadoop-yarn-applications-distributedshell-2.7.7.jar;F:\usr\hadoop-lib\hadoop-yarn-applications-distributedshell-2.7.7-sources.jar;F:\usr\hadoop-lib\hadoop-yarn-applications-distributedshell-2.7.7-test-sources.jar;F:\usr\hadoop-lib\hadoop-yarn-applications-unmanaged-am-launcher-2.7.7.jar;F:\usr\hadoop-lib\hadoop-yarn-applications-unmanaged-am-launcher-2.7.7-sources.jar;F:\usr\hadoop-lib\hadoop-yarn-applications-unmanaged-am-launcher-2.7.7-test-sources.jar;F:\usr\hadoop-lib\hadoop-yarn-client-2.7.7.jar;F:\usr\hadoop-lib\hadoop-yarn-client-2.7.7-sources.jar;F:\usr\hadoop-lib\hadoop-yarn-client-2.7.7-test-sources.jar;F:\usr\hadoop-lib\hadoop-yarn-common-2.7.7.jar;F:\usr\hadoop-lib\hadoop-yarn-common-2.7.7-sources.jar;F:\usr\hadoop-lib\hadoop-yarn-common-2.7.7-test-sources.jar;F:\usr\hadoop-lib\hadoop-yarn-registry-2.7.7.jar;F:\usr\hadoop-lib\hadoop-yarn-server-applicationhistoryservice-2.7.7.jar;F:\usr\hadoop-lib\hadoop-yarn-server-applicationhistoryservice-2.7.7-sources.jar;F:\usr\hadoop-lib\hadoop-yarn-server-applicationhistoryservice-2.7.7-test-sources.jar;F:\usr\hadoop-lib\hadoop-yarn-server-common-2.7.7.jar;F:\usr\hadoop-lib\hadoop-yarn-server-common-2.7.7-sources.jar;F:\usr\hadoop-lib\hadoop-yarn-server-common-2.7.7-test-sources.jar;F:\usr\hadoop-lib\hadoop-yarn-server-nodemanager-2.7.7.jar;F:\usr\hadoop-lib\hadoop-yarn-server-nodemanager-2.7.7-sources.jar;F:\usr\hadoop-lib\hadoop-yarn-server-nodemanager-2.7.7-test-sources.jar;F:\usr\hadoop-lib\hadoop-yarn-server-resourcemanager-2.7.7.jar;F:\usr\hadoop-lib\hadoop-yarn-server-resourcemanager-2.7.7-sources.jar;F:\usr\hadoop-lib\hadoop-yarn-server-resourcemanager-2.7.7-test-sources.jar;F:\usr\hadoop-lib\hadoop-yarn-server-sharedcachemanager-2.7.7.jar;F:\usr\hadoop-lib\hadoop-yarn-server-tests-2.7.7.jar;F:\usr\hadoop-lib\hadoop-yarn-server-tests-2.7.7-sources.jar;F:\usr\hadoop-lib\hadoop-yarn-server-tests-2.7.7-tests.jar;F:\usr\hadoop-lib\hadoop-yarn-server-tests-2.7.7-test-sources.jar;F:\usr\hadoop-lib\hadoop-yarn-server-web-proxy-2.7.7.jar;F:\usr\hadoop-lib\hadoop-yarn-server-web-proxy-2.7.7-sources.jar;F:\usr\hadoop-lib\hadoop-yarn-server-web-proxy-2.7.7-test-sources.jar;F:\usr\hadoop-lib\hamcrest-core-1.3.jar;F:\usr\hadoop-lib\hsqldb-2.0.0.jar;F:\usr\hadoop-lib\htrace-core-3.1.0-incubating.jar;F:\usr\hadoop-lib\httpclient-4.2.5.jar;F:\usr\hadoop-lib\httpcore-4.2.5.jar;F:\usr\hadoop-lib\jackson-annotations-2.2.3.jar;F:\usr\hadoop-lib\jackson-core-2.2.3.jar;F:\usr\hadoop-lib\jackson-core-asl-1.9.13.jar;F:\usr\hadoop-lib\jackson-databind-2.2.3.jar;F:\usr\hadoop-lib\jackson-jaxrs-1.9.13.jar;F:\usr\hadoop-lib\jackson-mapper-asl-1.9.13.jar;F:\usr\hadoop-lib\jackson-xc-1.9.13.jar;F:\usr\hadoop-lib\jasper.jar;F:\usr\hadoop-lib\jasper-el.jar;F:\usr\hadoop-lib\javax.inject-1.jar;F:\usr\hadoop-lib\java-xmlbuilder-0.4.jar;F:\usr\hadoop-lib\jaxb-api-2.2.2.jar;F:\usr\hadoop-lib\jaxb-impl-2.2.3-1.jar;F:\usr\hadoop-lib\jersey-client-1.9.jar;F:\usr\hadoop-lib\jersey-core-1.9.jar;F:\usr\hadoop-lib\jersey-guice-1.9.jar;F:\usr\hadoop-lib\jersey-json-1.9.jar;F:\usr\hadoop-lib\jersey-server-1.9.jar;F:\usr\hadoop-lib\jets3t-0.9.0.jar;F:\usr\hadoop-lib\jettison-1.1.jar;F:\usr\hadoop-lib\jetty-6.1.26.jar;F:\usr\hadoop-lib\jetty-sslengine-6.1.26.jar;F:\usr\hadoop-lib\jetty-util-6.1.26.jar;F:\usr\hadoop-lib\jline-0.9.94.jar;F:\usr\hadoop-lib\joda-time-2.10.jar;F:\usr\hadoop-lib\jsch-0.1.54.jar;F:\usr\hadoop-lib\json-simple-1.1.jar;F:\usr\hadoop-lib\jsp-api.jar;F:\usr\hadoop-lib\jsp-api-2.1.jar;F:\usr\hadoop-lib\jsr305-3.0.0.jar;F:\usr\hadoop-lib\jul-to-slf4j-1.7.10.jar;F:\usr\hadoop-lib\junit-4.11.jar;F:\usr\hadoop-lib\leveldbjni-all-1.8.jar;F:\usr\hadoop-lib\log4j-1.2.17.jar;F:\usr\hadoop-lib\metrics-core-3.0.1.jar;F:\usr\hadoop-lib\mina-core-2.0.0-M5.jar;F:\usr\hadoop-lib\mockito-all-1.8.5.jar;F:\usr\hadoop-lib\netty-3.6.2.Final.jar;F:\usr\hadoop-lib\netty-all-4.0.23.Final.jar;F:\usr\hadoop-lib\paranamer-2.3.jar;F:\usr\hadoop-lib\protobuf-java-2.5.0.jar;F:\usr\hadoop-lib\servlet-api.jar;F:\usr\hadoop-lib\servlet-api-2.5.jar;F:\usr\hadoop-lib\slf4j-api-1.7.10.jar;F:\usr\hadoop-lib\slf4j-log4j12-1.7.10.jar;F:\usr\hadoop-lib\snappy-java-1.0.4.1.jar;F:\usr\hadoop-lib\stax-api-1.0-2.jar;F:\usr\hadoop-lib\tomcat-coyote.jar;F:\usr\hadoop-lib\tomcat-dbcp.jar;F:\usr\hadoop-lib\tomcat-i18n-es.jar;F:\usr\hadoop-lib\tomcat-i18n-fr.jar;F:\usr\hadoop-lib\tomcat-i18n-ja.jar;F:\usr\hadoop-lib\tomcat-juli.jar;F:\usr\hadoop-lib\xercesImpl-2.9.1.jar;F:\usr\hadoop-lib\xml-apis-1.3.04.jar;F:\usr\hadoop-lib\xmlenc-0.52.jar;F:\usr\hadoop-lib\xz-1.0.jar;F:\usr\hadoop-lib\zookeeper-3.4.6.jar;F:\usr\hadoop-lib\zookeeper-3.4.6-tests.jar;F:\usr\hadoop-lib\IKAnalyzer2012_u6.jar;F:\usr\hbase-lib\aopalliance-1.0.jar;F:\usr\hbase-lib\aopalliance-repackaged-2.5.0-b32.jar;F:\usr\hbase-lib\apacheds-i18n-2.0.0-M15.jar;F:\usr\hbase-lib\apacheds-kerberos-codec-2.0.0-M15.jar;F:\usr\hbase-lib\api-asn1-api-1.0.0-M20.jar;F:\usr\hbase-lib\api-util-1.0.0-M20.jar;F:\usr\hbase-lib\asm-3.1.jar;F:\usr\hbase-lib\audience-annotations-0.5.0.jar;F:\usr\hbase-lib\avro-1.7.7.jar;F:\usr\hbase-lib\commons-beanutils-core-1.8.0.jar;F:\usr\hbase-lib\commons-cli-1.2.jar;F:\usr\hbase-lib\commons-codec-1.10.jar;F:\usr\hbase-lib\commons-collections-3.2.2.jar;F:\usr\hbase-lib\commons-compress-1.4.1.jar;F:\usr\hbase-lib\commons-configuration-1.6.jar;F:\usr\hbase-lib\commons-crypto-1.0.0.jar;F:\usr\hbase-lib\commons-daemon-1.0.13.jar;F:\usr\hbase-lib\commons-digester-1.8.jar;F:\usr\hbase-lib\commons-httpclient-3.1.jar;F:\usr\hbase-lib\commons-io-2.5.jar;F:\usr\hbase-lib\commons-lang-2.6.jar;F:\usr\hbase-lib\commons-lang3-3.6.jar;F:\usr\hbase-lib\commons-logging-1.2.jar;F:\usr\hbase-lib\commons-math3-3.6.1.jar;F:\usr\hbase-lib\commons-net-3.1.jar;F:\usr\hbase-lib\curator-client-4.0.0.jar;F:\usr\hbase-lib\curator-framework-4.0.0.jar;F:\usr\hbase-lib\curator-recipes-4.0.0.jar;F:\usr\hbase-lib\disruptor-3.3.6.jar;F:\usr\hbase-lib\findbugs-annotations-1.3.9-1.jar;F:\usr\hbase-lib\gson-2.2.4.jar;F:\usr\hbase-lib\guava-11.0.2.jar;F:\usr\hbase-lib\guice-3.0.jar;F:\usr\hbase-lib\guice-servlet-3.0.jar;F:\usr\hbase-lib\hadoop-annotations-2.7.7.jar;F:\usr\hbase-lib\hadoop-auth-2.7.7.jar;F:\usr\hbase-lib\hadoop-client-2.7.7.jar;F:\usr\hbase-lib\hadoop-common-2.7.7.jar;F:\usr\hbase-lib\hadoop-common-2.7.7-tests.jar;F:\usr\hbase-lib\hadoop-distcp-2.7.7.jar;F:\usr\hbase-lib\hadoop-hdfs-2.7.7.jar;F:\usr\hbase-lib\hadoop-hdfs-2.7.7-tests.jar;F:\usr\hbase-lib\hadoop-mapreduce-client-app-2.7.7.jar;F:\usr\hbase-lib\hadoop-mapreduce-client-common-2.7.7.jar;F:\usr\hbase-lib\hadoop-mapreduce-client-core-2.7.7.jar;F:\usr\hbase-lib\hadoop-mapreduce-client-hs-2.7.7.jar;F:\usr\hbase-lib\hadoop-mapreduce-client-jobclient-2.7.7.jar;F:\usr\hbase-lib\hadoop-mapreduce-client-shuffle-2.7.7.jar;F:\usr\hbase-lib\hadoop-minicluster-2.7.7.jar;F:\usr\hbase-lib\hadoop-yarn-api-2.7.7.jar;F:\usr\hbase-lib\hadoop-yarn-client-2.7.7.jar;F:\usr\hbase-lib\hadoop-yarn-common-2.7.7.jar;F:\usr\hbase-lib\hadoop-yarn-server-applicationhistoryservice-2.7.7.jar;F:\usr\hbase-lib\hadoop-yarn-server-common-2.7.7.jar;F:\usr\hbase-lib\hadoop-yarn-server-nodemanager-2.7.7.jar;F:\usr\hbase-lib\hadoop-yarn-server-resourcemanager-2.7.7.jar;F:\usr\hbase-lib\hadoop-yarn-server-tests-2.7.7-tests.jar;F:\usr\hbase-lib\hadoop-yarn-server-web-proxy-2.7.7.jar;F:\usr\hbase-lib\hamcrest-core-1.3.jar;F:\usr\hbase-lib\hbase-annotations-2.0.5.jar;F:\usr\hbase-lib\hbase-annotations-2.0.5-tests.jar;F:\usr\hbase-lib\hbase-client-2.0.5.jar;F:\usr\hbase-lib\hbase-common-2.0.5.jar;F:\usr\hbase-lib\hbase-common-2.0.5-tests.jar;F:\usr\hbase-lib\hbase-endpoint-2.0.5.jar;F:\usr\hbase-lib\hbase-examples-2.0.5.jar;F:\usr\hbase-lib\hbase-external-blockcache-2.0.5.jar;F:\usr\hbase-lib\hbase-hadoop2-compat-2.0.5.jar;F:\usr\hbase-lib\hbase-hadoop2-compat-2.0.5-tests.jar;F:\usr\hbase-lib\hbase-hadoop-compat-2.0.5.jar;F:\usr\hbase-lib\hbase-hadoop-compat-2.0.5-tests.jar;F:\usr\hbase-lib\hbase-http-2.0.5.jar;F:\usr\hbase-lib\hbase-it-2.0.5.jar;F:\usr\hbase-lib\hbase-it-2.0.5-tests.jar;F:\usr\hbase-lib\hbase-mapreduce-2.0.5.jar;F:\usr\hbase-lib\hbase-mapreduce-2.0.5-tests.jar;F:\usr\hbase-lib\hbase-metrics-2.0.5.jar;F:\usr\hbase-lib\hbase-metrics-api-2.0.5.jar;F:\usr\hbase-lib\hbase-procedure-2.0.5.jar;F:\usr\hbase-lib\hbase-protocol-2.0.5.jar;F:\usr\hbase-lib\hbase-protocol-shaded-2.0.5.jar;F:\usr\hbase-lib\hbase-replication-2.0.5.jar;F:\usr\hbase-lib\hbase-resource-bundle-2.0.5.jar;F:\usr\hbase-lib\hbase-rest-2.0.5.jar;F:\usr\hbase-lib\hbase-rsgroup-2.0.5.jar;F:\usr\hbase-lib\hbase-rsgroup-2.0.5-tests.jar;F:\usr\hbase-lib\hbase-server-2.0.5.jar;F:\usr\hbase-lib\hbase-server-2.0.5-tests.jar;F:\usr\hbase-lib\hbase-shaded-miscellaneous-2.1.0.jar;F:\usr\hbase-lib\hbase-shaded-netty-2.1.0.jar;F:\usr\hbase-lib\hbase-shaded-protobuf-2.1.0.jar;F:\usr\hbase-lib\hbase-shell-2.0.5.jar;F:\usr\hbase-lib\hbase-testing-util-2.0.5.jar;F:\usr\hbase-lib\hbase-thrift-2.0.5.jar;F:\usr\hbase-lib\hbase-zookeeper-2.0.5.jar;F:\usr\hbase-lib\hbase-zookeeper-2.0.5-tests.jar;F:\usr\hbase-lib\hk2-api-2.5.0-b32.jar;F:\usr\hbase-lib\hk2-locator-2.5.0-b32.jar;F:\usr\hbase-lib\hk2-utils-2.5.0-b32.jar;F:\usr\hbase-lib\htrace-core-3.2.0-incubating.jar;F:\usr\hbase-lib\htrace-core4-4.2.0-incubating.jar;F:\usr\hbase-lib\httpclient-4.5.3.jar;F:\usr\hbase-lib\httpcore-4.4.6.jar;F:\usr\hbase-lib\jackson-annotations-2.9.0.jar;F:\usr\hbase-lib\jackson-core-2.9.2.jar;F:\usr\hbase-lib\jackson-core-asl-1.9.13.jar;F:\usr\hbase-lib\jackson-databind-2.9.2.jar;F:\usr\hbase-lib\jackson-jaxrs-1.8.3.jar;F:\usr\hbase-lib\jackson-jaxrs-base-2.9.2.jar;F:\usr\hbase-lib\jackson-jaxrs-json-provider-2.9.2.jar;F:\usr\hbase-lib\jackson-mapper-asl-1.9.13.jar;F:\usr\hbase-lib\jackson-module-jaxb-annotations-2.9.2.jar;F:\usr\hbase-lib\jackson-xc-1.8.3.jar;F:\usr\hbase-lib\jamon-runtime-2.4.1.jar;F:\usr\hbase-lib\javassist-3.20.0-GA.jar;F:\usr\hbase-lib\javax.annotation-api-1.2.jar;F:\usr\hbase-lib\javax.el-3.0.1-b08.jar;F:\usr\hbase-lib\javax.inject-2.5.0-b32.jar;F:\usr\hbase-lib\javax.servlet.jsp.jstl-1.2.0.v201105211821.jar;F:\usr\hbase-lib\javax.servlet.jsp.jstl-1.2.2.jar;F:\usr\hbase-lib\javax.servlet.jsp-2.3.2.jar;F:\usr\hbase-lib\javax.servlet.jsp-api-2.3.1.jar;F:\usr\hbase-lib\javax.servlet-api-3.1.0.jar;F:\usr\hbase-lib\javax.ws.rs-api-2.0.1.jar;F:\usr\hbase-lib\java-xmlbuilder-0.4.jar;F:\usr\hbase-lib\jaxb-api-2.2.12.jar;F:\usr\hbase-lib\jaxb-impl-2.2.3-1.jar;F:\usr\hbase-lib\jcodings-1.0.18.jar;F:\usr\hbase-lib\jersey-client-2.25.1.jar;F:\usr\hbase-lib\jersey-common-2.25.1.jar;F:\usr\hbase-lib\jersey-container-servlet-core-2.25.1.jar;F:\usr\hbase-lib\jersey-guava-2.25.1.jar;F:\usr\hbase-lib\jersey-media-jaxb-2.25.1.jar;F:\usr\hbase-lib\jersey-server-2.25.1.jar;F:\usr\hbase-lib\jets3t-0.9.0.jar;F:\usr\hbase-lib\jettison-1.3.8.jar;F:\usr\hbase-lib\jetty-6.1.26.jar;F:\usr\hbase-lib\jetty-http-9.3.19.v20170502.jar;F:\usr\hbase-lib\jetty-io-9.3.19.v20170502.jar;F:\usr\hbase-lib\jetty-jmx-9.3.19.v20170502.jar;F:\usr\hbase-lib\jetty-jsp-9.2.19.v20160908.jar;F:\usr\hbase-lib\jetty-schemas-3.1.M0.jar;F:\usr\hbase-lib\jetty-security-9.3.19.v20170502.jar;F:\usr\hbase-lib\jetty-server-9.3.19.v20170502.jar;F:\usr\hbase-lib\jetty-servlet-9.3.19.v20170502.jar;F:\usr\hbase-lib\jetty-sslengine-6.1.26.jar;F:\usr\hbase-lib\jetty-util-6.1.26.jar;F:\usr\hbase-lib\jetty-util-9.3.19.v20170502.jar;F:\usr\hbase-lib\jetty-util-ajax-9.3.19.v20170502.jar;F:\usr\hbase-lib\jetty-webapp-9.3.19.v20170502.jar;F:\usr\hbase-lib\jetty-xml-9.3.19.v20170502.jar;F:\usr\hbase-lib\joni-2.1.11.jar;F:\usr\hbase-lib\jsch-0.1.54.jar;F:\usr\hbase-lib\junit-4.12.jar;F:\usr\hbase-lib\leveldbjni-all-1.8.jar;F:\usr\hbase-lib\libthrift-0.12.0.jar;F:\usr\hbase-lib\log4j-1.2.17.jar;F:\usr\hbase-lib\metrics-core-3.2.1.jar;F:\usr\hbase-lib\netty-all-4.0.23.Final.jar;F:\usr\hbase-lib\org.eclipse.jdt.core-3.8.2.v20130121.jar;F:\usr\hbase-lib\osgi-resource-locator-1.0.1.jar;F:\usr\hbase-lib\paranamer-2.3.jar;F:\usr\hbase-lib\protobuf-java-2.5.0.jar;F:\usr\hbase-lib\slf4j-api-1.7.25.jar;F:\usr\hbase-lib\slf4j-log4j12-1.7.25.jar;F:\usr\hbase-lib\snappy-java-1.0.5.jar;F:\usr\hbase-lib\spymemcached-2.12.2.jar;F:\usr\hbase-lib\validation-api-1.1.0.Final.jar;F:\usr\hbase-lib\xmlenc-0.52.jar;F:\usr\hbase-lib\xz-1.0.jar;F:\usr\hbase-lib\zookeeper-3.4.10.jar;D:\eclipse\eclipse\plugins\org.junit.jupiter.api_5.0.0.v20170910-2246.jar;D:\eclipse\eclipse\plugins\org.junit.jupiter.engine_5.0.0.v20170910-2246.jar;D:\eclipse\eclipse\plugins\org.junit.jupiter.migrationsupport_5.0.0.v20170910-2246.jar;D:\eclipse\eclipse\plugins\org.junit.jupiter.params_5.0.0.v20170910-2246.jar;D:\eclipse\eclipse\plugins\org.junit.platform.commons_1.0.0.v20170910-2246.jar;D:\eclipse\eclipse\plugins\org.junit.platform.engine_1.0.0.v20170910-2246.jar;D:\eclipse\eclipse\plugins\org.junit.platform.launcher_1.0.0.v20170910-2246.jar;D:\eclipse\eclipse\plugins\org.junit.platform.runner_1.0.0.v20170910-2246.jar;D:\eclipse\eclipse\plugins\org.junit.platform.suite.api_1.0.0.v20170910-2246.jar;D:\eclipse\eclipse\plugins\org.junit.vintage.engine_4.12.0.v20170910-2246.jar;D:\eclipse\eclipse\plugins\org.opentest4j_1.0.0.v20170910-2246.jar;D:\eclipse\eclipse\plugins\org.apiguardian_1.0.0.v20170910-2246.jar;D:\eclipse\eclipse\plugins\org.junit_4.12.0.v201504281640\junit.jar;D:\eclipse\eclipse\plugins\org.hamcrest.core_1.3.0.v201303031735.jar;G:\AllAPPsetplace\eclipseworkplace\HBaseDemo\bin 2019-04-07 00:04:01,665 INFO zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:java.library.path=C:\Program Files\Java\jre1.8.0_161\bin;C:\Windows\Sun\Java\bin;C:\Windows\system32;C:\Windows;%HADOOP_HOME\bin;%C:\Program Files (x86)\NetSarang\Xftp 6\;C:\Program Files\ImageMagick-7.0.8-Q16;C:\Users\Administrator\AppData\Local\Programs\Python\Python36\;C:\Python27\Scripts\;E:\Allapplicatiansplace\mysql\bin;D:\数据库\redis-windows-master\downloads\redis-64.3.0.503;D:;浏览器\phantomjs-2.1.1-windows\bin;D:;python;C:\Users\Administrator\AppData\Local\Google\Chrome\Application;C:\ProgramData\Oracle\Java\javapath;C:\Users\Administrator\AppData\Local\Programs\Python\Python36\Scripts;C:\Program Files (x86)\ClockworkMod\Universal Adb Driver;C:\Python27;%CommonProgramFiles%\Microsoft Shared\Windows Live;C:\Windows\system32;C:\Windows;C:\Windows\System32\Wbem;C:\Windows\System32\WindowsPowerShell\v1.0\;C:\Program Files\Microsoft SQL Server\130\Tools\Binn\;%USERPROFILE%\.dnx\bin;C:\Program Files\Microsoft DNX\Dnvm\;E:\Allapplicatiansplace\Git\Git\cmd;E:\Allapplicatiansplace\SVN\bin;E:\Allapplicatiansplace\nodejs\;E:\Allapplicatiansplace\visualSVN\bin;C:\Program Files (x86)\Windows Kits\8.1\Windows Performance Toolkit\;C:\Program Files (x86)\GitExtensions\;C:\Program Files\dotnet\;C:\Program Files\Microsoft SQL Server\Client SDK\ODBC\110\Tools\Binn\;C:\Program Files (x86)\Microsoft SQL Server\120\Tools\Binn\;C:\Program Files\Microsoft SQL Server\120\Tools\Binn\;C:\Program Files\Microsoft SQL Server\120\DTS\Binn\;D:\PHP\php-7.2.3-Win32-VC15-x64\ext;D:\PHP\php-7.2.3-Win32-VC15-x64;C:\Users\Administrator\AppData\Local\Programs\Python\Python36\Scripts\;C:\Program Files\Common Files\Microsoft Shared\Windows Live;C:\Program Files\MySQL\MySQL Server 6.0\bin;C:\Users\Administrator\AppData\Roaming\npm;E:\Allapplicatiansplace\Microsoft VS Code\bin;C:\Users\Administrator\AppData\Local\GitHubDesktop\bin;. 2019-04-07 00:04:01,665 INFO zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:java.io.tmpdir=C:\Users\ADMINI~1\AppData\Local\Temp\ 2019-04-07 00:04:01,665 INFO zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:java.compiler=<NA> 2019-04-07 00:04:01,666 INFO zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:os.name=Windows 7 2019-04-07 00:04:01,666 INFO zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:os.arch=amd64 2019-04-07 00:04:01,666 INFO zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:os.version=6.1 2019-04-07 00:04:01,666 INFO zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:user.name=Administrator 2019-04-07 00:04:01,670 INFO zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:user.home=C:\Users\Administrator 2019-04-07 00:04:01,671 INFO zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:user.dir=G:\AllAPPsetplace\eclipseworkplace\HBaseDemo 2019-04-07 00:04:01,675 INFO zookeeper.ZooKeeper (ZooKeeper.java:<init>(438)) - Initiating client connection, connectString=node02:2181,node03:2181,node04:2181 sessionTimeout=90000 watcher=org.apache.hadoop.hbase.zookeeper.ReadOnlyZKClient$$Lambda$6/1990143034@750a46c9 2019-04-07 00:04:01,806 INFO zookeeper.ClientCnxn (ClientCnxn.java:logStartConnect(975)) - Opening socket connection to server node04/192.168.30.139:2181. Will not attempt to authenticate using SASL (unknown error) 2019-04-07 00:04:01,810 INFO zookeeper.ClientCnxn (ClientCnxn.java:primeConnection(852)) - Socket connection established to node04/192.168.30.139:2181, initiating session 2019-04-07 00:04:01,874 INFO zookeeper.ClientCnxn (ClientCnxn.java:onConnected(1235)) - Session establishment complete on server node04/192.168.30.139:2181, sessionid = 0x30002fd41930000, negotiated timeout = 40000 2019-04-07 00:04:06,131 WARN mapreduce.JobResourceUploader (JobResourceUploader.java:uploadFiles(64)) - Hadoop command-line option parsing not performed. Implement the Tool interface and execute your application with ToolRunner to remedy this. 2019-04-07 00:04:06,196 WARN mapreduce.JobResourceUploader (JobResourceUploader.java:uploadFiles(171)) - No job jar file set. User classes may not be found. See Job or Job#setJar(String). 2019-04-07 00:04:11,222 INFO input.FileInputFormat (FileInputFormat.java:listStatus(283)) - Total input paths to process : 1 2019-04-07 00:04:11,414 INFO mapreduce.JobSubmitter (JobSubmitter.java:submitJobInternal(198)) - number of splits:1 2019-04-07 00:04:11,545 INFO mapreduce.JobSubmitter (JobSubmitter.java:printTokens(287)) - Submitting tokens for job: job_local1129811918_0001 2019-04-07 00:04:11,828 INFO mapreduce.Job (Job.java:submit(1294)) - The url to track the job: http://localhost:8080/ 2019-04-07 00:04:11,829 INFO mapreduce.Job (Job.java:monitorAndPrintJob(1339)) - Running job: job_local1129811918_0001 2019-04-07 00:04:11,830 INFO mapred.LocalJobRunner (LocalJobRunner.java:createOutputCommitter(471)) - OutputCommitter set in config null 2019-04-07 00:04:11,881 INFO mapred.LocalJobRunner (LocalJobRunner.java:createOutputCommitter(489)) - OutputCommitter is org.apache.hadoop.hbase.mapreduce.TableOutputCommitter 2019-04-07 00:04:11,982 INFO mapred.LocalJobRunner (LocalJobRunner.java:runTasks(448)) - Waiting for map tasks 2019-04-07 00:04:11,984 INFO mapred.LocalJobRunner (LocalJobRunner.java:run(224)) - Starting task: attempt_local1129811918_0001_m_000000_0 2019-04-07 00:04:12,146 INFO util.ProcfsBasedProcessTree (ProcfsBasedProcessTree.java:isAvailable(192)) - ProcfsBasedProcessTree currently is supported only on Linux. 2019-04-07 00:04:12,273 INFO mapred.Task (Task.java:initialize(614)) - Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@9982e96 2019-04-07 00:04:12,279 INFO mapred.MapTask (MapTask.java:runNewMapper(756)) - Processing split: hdfs://node01:8020/user/hive_remote/warehouse/wc/wc.txt:0+41 2019-04-07 00:04:12,391 INFO mapred.MapTask (MapTask.java:setEquator(1205)) - (EQUATOR) 0 kvi 26214396(104857584) 2019-04-07 00:04:12,392 INFO mapred.MapTask (MapTask.java:init(998)) - mapreduce.task.io.sort.mb: 100 2019-04-07 00:04:12,392 INFO mapred.MapTask (MapTask.java:init(999)) - soft limit at 83886080 2019-04-07 00:04:12,392 INFO mapred.MapTask (MapTask.java:init(1000)) - bufstart = 0; bufvoid = 104857600 2019-04-07 00:04:12,392 INFO mapred.MapTask (MapTask.java:init(1001)) - kvstart = 26214396; length = 6553600 2019-04-07 00:04:12,425 INFO mapred.MapTask (MapTask.java:createSortingCollector(403)) - Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer 2019-04-07 00:04:12,740 INFO zookeeper.ReadOnlyZKClient (ReadOnlyZKClient.java:close(340)) - Close zookeeper connection 0x1698fc68 to node02:2181,node03:2181,node04:2181 2019-04-07 00:04:12,764 INFO zookeeper.ZooKeeper (ZooKeeper.java:close(684)) - Session: 0x30002fd41930000 closed 2019-04-07 00:04:12,764 INFO zookeeper.ClientCnxn (ClientCnxn.java:run(512)) - EventThread shut down 2019-04-07 00:04:12,831 INFO mapreduce.Job (Job.java:monitorAndPrintJob(1360)) - Job job_local1129811918_0001 running in uber mode : false 2019-04-07 00:04:12,834 INFO mapreduce.Job (Job.java:monitorAndPrintJob(1367)) - map 0% reduce 0% 2019-04-07 00:04:13,187 INFO mapred.LocalJobRunner (LocalJobRunner.java:statusUpdate(591)) - 2019-04-07 00:04:13,204 INFO mapred.MapTask (MapTask.java:flush(1460)) - Starting flush of map output 2019-04-07 00:04:13,204 INFO mapred.MapTask (MapTask.java:flush(1482)) - Spilling map output 2019-04-07 00:04:13,205 INFO mapred.MapTask (MapTask.java:flush(1483)) - bufstart = 0; bufend = 69; bufvoid = 104857600 2019-04-07 00:04:13,205 INFO mapred.MapTask (MapTask.java:flush(1485)) - kvstart = 26214396(104857584); kvend = 26214372(104857488); length = 25/6553600 2019-04-07 00:04:13,245 INFO mapred.MapTask (MapTask.java:sortAndSpill(1667)) - Finished spill 0 2019-04-07 00:04:13,267 INFO mapred.Task (Task.java:done(1046)) - Task:attempt_local1129811918_0001_m_000000_0 is done. And is in the process of committing 2019-04-07 00:04:13,301 INFO mapred.LocalJobRunner (LocalJobRunner.java:statusUpdate(591)) - map 2019-04-07 00:04:13,301 INFO mapred.Task (Task.java:sendDone(1184)) - Task 'attempt_local1129811918_0001_m_000000_0' done. 2019-04-07 00:04:13,311 INFO mapred.Task (Task.java:done(1080)) - Final Counters for attempt_local1129811918_0001_m_000000_0: Counters: 22 File System Counters FILE: Number of bytes read=178 FILE: Number of bytes written=399623 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=41 HDFS: Number of bytes written=0 HDFS: Number of read operations=3 HDFS: Number of large read operations=0 HDFS: Number of write operations=0 Map-Reduce Framework Map input records=4 Map output records=7 Map output bytes=69 Map output materialized bytes=89 Input split bytes=120 Combine input records=0 Spilled Records=7 Failed Shuffles=0 Merged Map outputs=0 GC time elapsed (ms)=112 Total committed heap usage (bytes)=373293056 File Input Format Counters Bytes Read=41 2019-04-07 00:04:13,312 INFO mapred.LocalJobRunner (LocalJobRunner.java:run(249)) - Finishing task: attempt_local1129811918_0001_m_000000_0 2019-04-07 00:04:13,316 INFO mapred.LocalJobRunner (LocalJobRunner.java:runTasks(456)) - map task executor complete. 2019-04-07 00:04:13,321 INFO mapred.LocalJobRunner (LocalJobRunner.java:runTasks(448)) - Waiting for reduce tasks 2019-04-07 00:04:13,321 INFO mapred.LocalJobRunner (LocalJobRunner.java:run(302)) - Starting task: attempt_local1129811918_0001_r_000000_0 2019-04-07 00:04:13,382 INFO util.ProcfsBasedProcessTree (ProcfsBasedProcessTree.java:isAvailable(192)) - ProcfsBasedProcessTree currently is supported only on Linux. 2019-04-07 00:04:13,501 INFO mapred.Task (Task.java:initialize(614)) - Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@681277ee 2019-04-07 00:04:13,518 INFO mapred.ReduceTask (ReduceTask.java:run(362)) - Using ShuffleConsumerPlugin: org.apache.hadoop.mapreduce.task.reduce.Shuffle@5f1b3a7c 2019-04-07 00:04:13,541 INFO reduce.MergeManagerImpl (MergeManagerImpl.java:<init>(205)) - MergerManager: memoryLimit=334338464, maxSingleShuffleLimit=83584616, mergeThreshold=220663392, ioSortFactor=10, memToMemMergeOutputsThreshold=10 2019-04-07 00:04:13,546 INFO reduce.EventFetcher (EventFetcher.java:run(61)) - attempt_local1129811918_0001_r_000000_0 Thread started: EventFetcher for fetching Map Completion Events 2019-04-07 00:04:13,605 INFO reduce.LocalFetcher (LocalFetcher.java:copyMapOutput(144)) - localfetcher#1 about to shuffle output of map attempt_local1129811918_0001_m_000000_0 decomp: 85 len: 89 to MEMORY 2019-04-07 00:04:13,613 INFO reduce.InMemoryMapOutput (InMemoryMapOutput.java:shuffle(100)) - Read 85 bytes from map-output for attempt_local1129811918_0001_m_000000_0 2019-04-07 00:04:13,625 INFO reduce.MergeManagerImpl (MergeManagerImpl.java:closeInMemoryFile(319)) - closeInMemoryFile -> map-output of size: 85, inMemoryMapOutputs.size() -> 1, commitMemory -> 0, usedMemory ->85 2019-04-07 00:04:13,627 INFO reduce.EventFetcher (EventFetcher.java:run(76)) - EventFetcher is interrupted.. Returning 2019-04-07 00:04:13,628 INFO mapred.LocalJobRunner (LocalJobRunner.java:statusUpdate(591)) - 1 / 1 copied. 2019-04-07 00:04:13,628 INFO reduce.MergeManagerImpl (MergeManagerImpl.java:finalMerge(691)) - finalMerge called with 1 in-memory map-outputs and 0 on-disk map-outputs 2019-04-07 00:04:13,660 INFO mapred.Merger (Merger.java:merge(606)) - Merging 1 sorted segments 2019-04-07 00:04:13,660 INFO mapred.Merger (Merger.java:merge(705)) - Down to the last merge-pass, with 1 segments left of total size: 76 bytes 2019-04-07 00:04:13,662 INFO reduce.MergeManagerImpl (MergeManagerImpl.java:finalMerge(758)) - Merged 1 segments, 85 bytes to disk to satisfy reduce memory limit 2019-04-07 00:04:13,663 INFO reduce.MergeManagerImpl (MergeManagerImpl.java:finalMerge(788)) - Merging 1 files, 89 bytes from disk 2019-04-07 00:04:13,664 INFO reduce.MergeManagerImpl (MergeManagerImpl.java:finalMerge(803)) - Merging 0 segments, 0 bytes from memory into reduce 2019-04-07 00:04:13,664 INFO mapred.Merger (Merger.java:merge(606)) - Merging 1 sorted segments 2019-04-07 00:04:13,667 INFO mapred.Merger (Merger.java:merge(705)) - Down to the last merge-pass, with 1 segments left of total size: 76 bytes 2019-04-07 00:04:13,668 INFO mapred.LocalJobRunner (LocalJobRunner.java:statusUpdate(591)) - 1 / 1 copied. 2019-04-07 00:04:13,670 INFO zookeeper.ReadOnlyZKClient (ReadOnlyZKClient.java:<init>(135)) - Connect 0x16b2d4ee to node02:2181,node03:2181,node04:2181 with session timeout=90000ms, retries 30, retry interval 1000ms, keepAlive=60000ms 2019-04-07 00:04:13,671 INFO zookeeper.ZooKeeper (ZooKeeper.java:<init>(438)) - Initiating client connection, connectString=node02:2181,node03:2181,node04:2181 sessionTimeout=90000 watcher=org.apache.hadoop.hbase.zookeeper.ReadOnlyZKClient$$Lambda$6/1990143034@750a46c9 2019-04-07 00:04:13,679 INFO zookeeper.ClientCnxn (ClientCnxn.java:logStartConnect(975)) - Opening socket connection to server node03/192.168.30.138:2181. Will not attempt to authenticate using SASL (unknown error) 2019-04-07 00:04:13,680 INFO zookeeper.ClientCnxn (ClientCnxn.java:primeConnection(852)) - Socket connection established to node03/192.168.30.138:2181, initiating session 2019-04-07 00:04:13,699 INFO zookeeper.ClientCnxn (ClientCnxn.java:onConnected(1235)) - Session establishment complete on server node03/192.168.30.138:2181, sessionid = 0x200035524030000, negotiated timeout = 40000 2019-04-07 00:04:13,739 INFO mapreduce.TableOutputFormat (TableOutputFormat.java:<init>(107)) - Created table instance for wc 2019-04-07 00:04:13,739 INFO Configuration.deprecation (Configuration.java:warnOnceIfDeprecated(1243)) - mapred.skip.on is deprecated. Instead, use mapreduce.job.skiprecords 2019-04-07 00:04:13,837 INFO mapreduce.Job (Job.java:monitorAndPrintJob(1367)) - map 100% reduce 0% 2019-04-07 00:04:14,625 INFO zookeeper.ReadOnlyZKClient (ReadOnlyZKClient.java:close(340)) - Close zookeeper connection 0x16b2d4ee to node02:2181,node03:2181,node04:2181 2019-04-07 00:04:14,628 INFO mapred.Task (Task.java:done(1046)) - Task:attempt_local1129811918_0001_r_000000_0 is done. And is in the process of committing 2019-04-07 00:04:14,632 INFO mapred.LocalJobRunner (LocalJobRunner.java:statusUpdate(591)) - reduce > reduce 2019-04-07 00:04:14,632 INFO mapred.Task (Task.java:sendDone(1184)) - Task 'attempt_local1129811918_0001_r_000000_0' done. 2019-04-07 00:04:14,633 INFO mapred.Task (Task.java:done(1080)) - Final Counters for attempt_local1129811918_0001_r_000000_0: Counters: 29 File System Counters FILE: Number of bytes read=388 FILE: Number of bytes written=399712 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=41 HDFS: Number of bytes written=0 HDFS: Number of read operations=3 HDFS: Number of large read operations=0 HDFS: Number of write operations=0 Map-Reduce Framework Combine input records=0 Combine output records=0 Reduce input groups=5 Reduce shuffle bytes=89 Reduce input records=7 Reduce output records=5 Spilled Records=7 Shuffled Maps =1 Failed Shuffles=0 Merged Map outputs=1 GC time elapsed (ms)=0 Total committed heap usage (bytes)=373293056 Shuffle Errors BAD_ID=0 CONNECTION=0 IO_ERROR=0 WRONG_LENGTH=0 WRONG_MAP=0 WRONG_REDUCE=0 File Output Format Counters Bytes Written=0 2019-04-07 00:04:14,633 INFO mapred.LocalJobRunner (LocalJobRunner.java:run(325)) - Finishing task: attempt_local1129811918_0001_r_000000_0 2019-04-07 00:04:14,635 INFO mapred.LocalJobRunner (LocalJobRunner.java:runTasks(456)) - reduce task executor complete. 2019-04-07 00:04:14,657 INFO zookeeper.ZooKeeper (ZooKeeper.java:close(684)) - Session: 0x200035524030000 closed 2019-04-07 00:04:14,658 INFO zookeeper.ClientCnxn (ClientCnxn.java:run(512)) - EventThread shut down 2019-04-07 00:04:14,837 INFO mapreduce.Job (Job.java:monitorAndPrintJob(1367)) - map 100% reduce 100% 2019-04-07 00:04:14,838 INFO mapreduce.Job (Job.java:monitorAndPrintJob(1378)) - Job job_local1129811918_0001 completed successfully 2019-04-07 00:04:14,851 INFO mapreduce.Job (Job.java:monitorAndPrintJob(1385)) - Counters: 35 File System Counters FILE: Number of bytes read=566 FILE: Number of bytes written=799335 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=82 HDFS: Number of bytes written=0 HDFS: Number of read operations=6 HDFS: Number of large read operations=0 HDFS: Number of write operations=0 Map-Reduce Framework Map input records=4 Map output records=7 Map output bytes=69 Map output materialized bytes=89 Input split bytes=120 Combine input records=0 Combine output records=0 Reduce input groups=5 Reduce shuffle bytes=89 Reduce input records=7 Reduce output records=5 Spilled Records=14 Shuffled Maps =1 Failed Shuffles=0 Merged Map outputs=1 GC time elapsed (ms)=112 Total committed heap usage (bytes)=746586112 Shuffle Errors BAD_ID=0 CONNECTION=0 IO_ERROR=0 WRONG_LENGTH=0 WRONG_MAP=0 WRONG_REDUCE=0 File Input Format Counters Bytes Read=41 File Output Format Counters Bytes Written=0

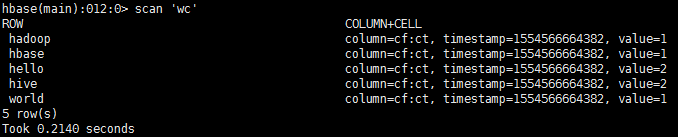

6. HBase中查看结果

-----------------------------

另外,不知道什么原因,在跑MR程序的时候,我必须要断网才能保证虚拟机的IP不变,虚拟机IP我已经设置成静态地址了,真是奇怪,有知道的大佬麻烦留个言,提前谢过。